BestBlogs.dev Highlights Issue #13

Subscribe NowDear friends,

👋 Welcome to this week's curated article recommendations from BestBlogs.dev!

🚀 This issue focuses on the latest breakthroughs, innovative applications, and industry dynamics in the AI field, presenting you with essential insights on model advancements, emerging developer roles, product innovations, and market strategies. Let's explore the cutting edge of AI development together!

🔬 AI Models: Competition intensifies among large-scale models, with open-source and proprietary solutions each showcasing their strengths

- Meta releases Llama-3.1: Boasting up to 405B parameters, it outperforms GPT-4 and Claude 3.5, with a context length of 128k.

- Mistral AI launches Large 2 123B: Matching Llama 3.1 405B in performance, while supporting single-node deployment.

- Alibaba Cloud open-sources Qwen2-Audio: Processes various audio inputs, performing audio analysis and text responses to voice commands.

🛠️ AI Development: Emerging professions gain traction, with diverse skill requirements

- Prompt engineering optimization: LangChain blog details few-shot prompting techniques to enhance tool-calling performance.

- AI Agent development: Explores various AI Agent mechanisms and architectures, including ReAct, Reflexion, and Plan and Execute.

- AI Engineer job analysis: ShowMeAI Research Center provides an in-depth analysis of AI Engineer roles, skill requirements, and career trends.

💼 AI Products: Innovative applications proliferate, with product strategy proving crucial

- Conch AI Floating Ball: Breaks application boundaries, achieving global accessibility for AI assistants and offering context-aware services.

- Qunar's AI-generated hotel videos: Optimizes hotel video production through AI technology, boosting user conversion rates.

- Andrew Ng's AI startup advice: Emphasizes the importance of clear product vision and rapid iteration, while assessing technical and commercial feasibility.

📈 AI News: Rapidly evolving industry dynamics, with new products and perspectives constantly emerging

- OpenAI launches SearchGPT: Leverages large language models for precise web information retrieval, supporting multimedia content display and conversational interaction.

- Scale AI valued at $13.8 billion: Focuses on solving AI data challenges, providing high-quality data for AI models.

- a16z founders discuss AI startups: Emphasize that AI companies provide services rather than products, and that hardware startups, though challenging, can more easily establish competitive advantages.

Table of Contents

- Llama-3.1 Released: A Comprehensive Look at the 405B Model

- How Open-Source Models Surpass GPT-4o: Meta Details Llama 3.1 405B in This Paper

- Mistral's New Flagship Challenges Llama 3.1! The Multilingual Programming Powerhouse: Large 2 123B

- Meta Llama 3.1 now available on Workers AI

- Achieves New State-of-the-Art! Alibaba's Newly Open-Sourced Speech Model Qwen2-Audio Outperforms Gemini-1.5-pro in Practice, Netizens: Just a Step Away from GPT-4o

- NVIDIA and Mistral AI Unleash the Small Model King! The 120 Billion Parameter Mighty Entry, Outperforming Llama 3, Runs on a Single NVIDIA L40S.

- Ma Yi: Large Models Lack Theoretical Guidance, Like Blind Men Touching Elephants; Experts Gather to Discuss AI's Next Steps

- ByteDance's Large Model Achieves Human-Level Simultaneous Interpretation

- From AI Beginner to Expert: 7 Key Details to Empower You to Excel

- Planning for Agents

- AI Agent: What Industry Leaders are Focusing On (Part 2) - Analyzing AI Agent with the 5W1H Framework

- Few-shot prompting to improve tool-calling performance

- Introducing LlamaExtract Beta: structured data extraction in just a few clicks

- AI Engineer | New Position Gains Traction Overseas, What About Domestic? Successful Transition: How Long Does It Take? Comprehensive Question Bank & Interview Experience | ShowMeAI Daily

- Issue 3321: Directly Calling Large Language Model APIs in Chrome

- Lessons Learned from Building Large Language Models in the Past Year

- Exploring and Implementing Vertical Domain Large Models in ToB

- Behind the Competition for the 'World's Longest Context Window': Does Longer Context Mean the End of RAG?

- Is RAG Technology Really 'Overhyped'?

- Mem0: Open-sourced Just One Day Ago, It Gained Nearly 10,000 Stars, Surpassing RAG and Giving Large Models Super Strong Memory Power!

- Mastering Prompt Writing Techniques: The Secret to Crafting Effective Prompts

- Redis Improves Performance of Vector Semantic Search with Multi-Threaded Query Engine

- A Letter from Andrew Ng: Working on Concrete Ideas

- Notion Surpasses 100 Million Users: Early Funding Pitch Deck Reveals AI Software as the New Hardware

- From App to OS: The Global Revolution of Conch AI Floating Button

- AI-Generated Video Practices for Qunar International Hotels

- A Comprehensive Look at Kimi: Features, Versions, Pricing, Advantages, and Applications

- Midjourney Business Model Canvas Analysis

- Is This the PMF for AI Hardware? European Company Launches AI-Guided Glasses, 1/10th the Price of a Guide Dog

- The Only Thing That Matters for a Startup is PMF | Z Talk

- Entrepreneur's Post-mortem: Mistakes I Made in 'Localization' During Overseas Expansion

- Amazon Fully Launches AI Shopping Gateway, Significantly Alters Traffic Landscape and Operational Logic

- Scale AI: A $138 Billion Company Solving the Right Problems, Doing the "Lowest-Tech" Business

- Deep Dive: AI's Seven Pioneers (OpenAI, Figure, Cognition, Scale, etc.): Innovation and Challenges in the AI Era?

- a16z Founders Discuss AI Entrepreneurship: AI Provides Services, Not Products; Hardware Entrepreneurship is More Challenging but Offers Greater Competitive Advantage

- A16z In-Depth Discussion: Challenges and Opportunities for AI in Healthcare

- DialogueWall AI CTO Zeng Guoyang: Large Models Are Expensive, How to Compete and Win?

- Challenges in Integrating Technology and Business, Uncertain ROI: Can Financial Large Models Overcome These Hurdles?

- In-Depth Analysis: 10 Most Noteworthy AI Products in the First Half of 2024 (Overseas Edition)

Llama-3.1 Released: A Comprehensive Look at the 405B Model

On July 23, 2024, Meta released Llama-3.1, a series of open-source AI models encompassing 8B, 70B, and 405B sizes. The maximum context length has been extended to 128k. The 405B model outperforms both GPT-4 and Claude 3.5, solidifying its position as one of the most powerful models available. Llama-3.1 achieves a comprehensive performance upgrade through increased context length and enhanced inference capabilities. This article delves into the evolving landscape of open-source AI, highlighting the advantages of open-source models, including their openness, modifiability, and cost efficiency. It predicts that open-source AI will become the industry standard in the future. The article also explores the security implications of open-source AI, arguing that it offers greater security compared to other options. Government support for open-source is seen as crucial for promoting global prosperity and security.

How Open-Source Models Surpass GPT-4o: Meta Details Llama 3.1 405B in This Paper

Meta's latest released Llama 3.1 405B model significantly raises the bar for large language models by extending the context length to 128K and providing three versions: 8B, 70B, and 405B. This model holds significant importance in the AI community, as it sets a new benchmark for open-source foundation models, and Meta states that its performance can rival the best closed-source models. The paper 'The Llama 3 Herd of Models' details the research behind the Llama 3 series models, including data processing for pre-training and post-training, model scale and complexity management, quantization technology for large-scale production inference, and multimodal expansion. Moreover, Meta has updated its license, allowing developers to use Llama model output to enhance other models, and has established an ecosystem with over 25 partners to support the latest model applications. Although Llama 3.1 has achieved significant performance improvements, it may still face challenges in practical applications, such as training costs, inference speed, and potential biases, which require further research and solution.

Mistral's New Flagship Challenges Llama 3.1! The Multilingual Programming Powerhouse: Large 2 123B

Mistral AI's latest release, Mistral Large 2, has set new performance benchmarks in multiple technical fields. With only 123B parameters, it has demonstrated abilities comparable to Llama 3.1 405B in code generation, mathematical reasoning, and multilingual processing. Moreover, Mistral Large 2 supports single-node deployment, significantly lowering the usage threshold, providing individuals and small teams with the opportunity to explore high-performance large language models. Compared to other models, Mistral Large 2 excels in generating concise and accurate responses, while possessing strong multilingual support capabilities, covering dozens of natural languages and over 80 programming languages. Its open-source nature and flexible deployment options make Mistral Large 2 a potential driving force for AI application development.

Meta Llama 3.1 now available on Workers AI

Cloudflare, a strong supporter of open-source, has partnered with Meta to introduce the Llama 3.1 8B model on its Workers AI platform. This model, available from day one, boasts multilingual support across eight languages and native function calling capabilities, allowing developers to generate structured JSON outputs compatible with various APIs. Additionally, Cloudflare's embedded function calling feature enhances efficiency by reducing manual requests and utilizing the ai-utils package for orchestration. Developers are encouraged to explore the new capabilities while adhering to Meta's Acceptable Use Policy and License.

Achieves New State-of-the-Art! Alibaba's Newly Open-Sourced Speech Model Qwen2-Audio Outperforms Gemini-1.5-pro in Practice, Netizens: Just a Step Away from GPT-4o

Alibaba Cloud's open-sourced speech model Qwen2-Audio is a large-scale audio-linguistic model capable of handling various audio inputs, performing audio analysis or responding with text based on voice commands. The model supports two interaction modes: audio analysis and speech chat, and can autonomously judge and switch between the two. Qwen2-Audio has the capability to analyze audio sentiment and can effectively extract information in noisy environments. Despite the model's excellence in multiple areas, it currently does not include speech synthesis capability.

NVIDIA and Mistral AI Unleash the Small Model King! The 120 Billion Parameter Mighty Entry, Outperforming Llama 3, Runs on a Single NVIDIA L40S.

Mistral NeMo is a small model co-developed by NVIDIA and Mistral AI, boasting 120 billion parameters and supporting 128K context. It outperforms Gemma 2 9B and Llama 3 8B in various benchmark tests, excelling in multi-round dialogue, mathematics, common sense reasoning, world knowledge, and coding tasks. Designed specifically for enterprise users, Mistral NeMo supports applications such as chatbots, multilingual tasks, coding, and summarization. It utilizes the FP8 data format, reducing memory requirements and accelerating deployment speed. Furthermore, Mistral NeMo is open-source and easy to deploy, capable of running on a single NVIDIA GPU, providing businesses with an efficient, flexible, and cost-effective AI solution.

Ma Yi: Large Models Lack Theoretical Guidance, Like Blind Men Touching Elephants; Experts Gather to Discuss AI's Next Steps

This article reports on the views of multiple AI experts at the 2024 International Basic Science Conference 'Basic Science and AI Forum', focusing on the speech of Professor Ma Yi, Dean of the Data Science Academy at the University of Hong Kong. Professor Ma Yi emphasized that current large model development lacks theoretical guidance, comparing it to 'blind men touching elephants', and called for a return to theoretical foundations, exploring the essence of intelligence, and emphasizing the importance of computing and execution.

Additionally, the article gathers the insights of other experts, including:

- Academician Guo Yike, who pointed out the application of hybrid expert models and knowledge embedding under computing power limitations, and the need for future large model development to transcend search paradigms, incorporating subjective values and emotional knowledge.

- Professor He Xiaodong, who believes that large model development has entered a platform period, requiring the discovery of super applications that can demonstrate its value, and pointing out that multimodal learning is the direction to solve the illusion problem, expand model generalization, and enhance interactivity.

- Dr. Rui Yong, who emphasized the importance of developing 'intelligent agents' and 'hybrid frameworks', especially enabling intelligent agents to understand their ability boundaries, and combining cloud-based large models with private deployment, knowledge-driven, and personalized hybrid models.

ByteDance's Large Model Achieves Human-Level Simultaneous Interpretation

This article introduces CLASI, a novel simultaneous interpretation AI developed by ByteDance's research team. The system utilizes an end-to-end architecture design, effectively mitigating error propagation issues inherent in traditional cascaded models. CLASI leverages the robust language understanding capabilities of the Doubao base model and the ability to acquire knowledge from external sources, achieving near-professional level simultaneous interpretation results. CLASI has demonstrated impressive performance in various complex language scenarios, including tongue twisters, classical Chinese, and improvisational dialogue. Compared to professional human interpreters, CLASI has achieved significant leads in terms of Valid Information Proportion (VIP) and has even reached or surpassed human-level performance in certain test sets. The article also provides a detailed introduction to CLASI's system architecture, operating process, and its performance advantages in experiments, as well as discussing the challenges and development directions it may face in future practical applications.

From AI Beginner to Expert: 7 Key Details to Empower You to Excel

This article explains the concepts of artificial intelligence (AI) from the basics to practical applications in a clear and concise language. It first introduces basic terms such as machine learning, generative AI, hallucination, and bias, helping readers establish a preliminary understanding of AI. Then, it delves into the training process of AI models and introduces key technologies such as natural language processing, Transformer architecture, and RAG technology, as well as hardware foundations like NVIDIA H100 chips and neural processing units (NPUs). Furthermore, the article analyzes the layouts and contributions of domestic and foreign tech giants such as OpenAI, Microsoft, Google, and Meta in the AI field, as well as the progress of domestic companies like Baidu, Alibaba, and Tencent in AI models and applications. Finally, the article also explores the challenges faced during AI technology development, such as the hallucination and bias problems of AI models, and emphasizes the importance of resolving these issues.

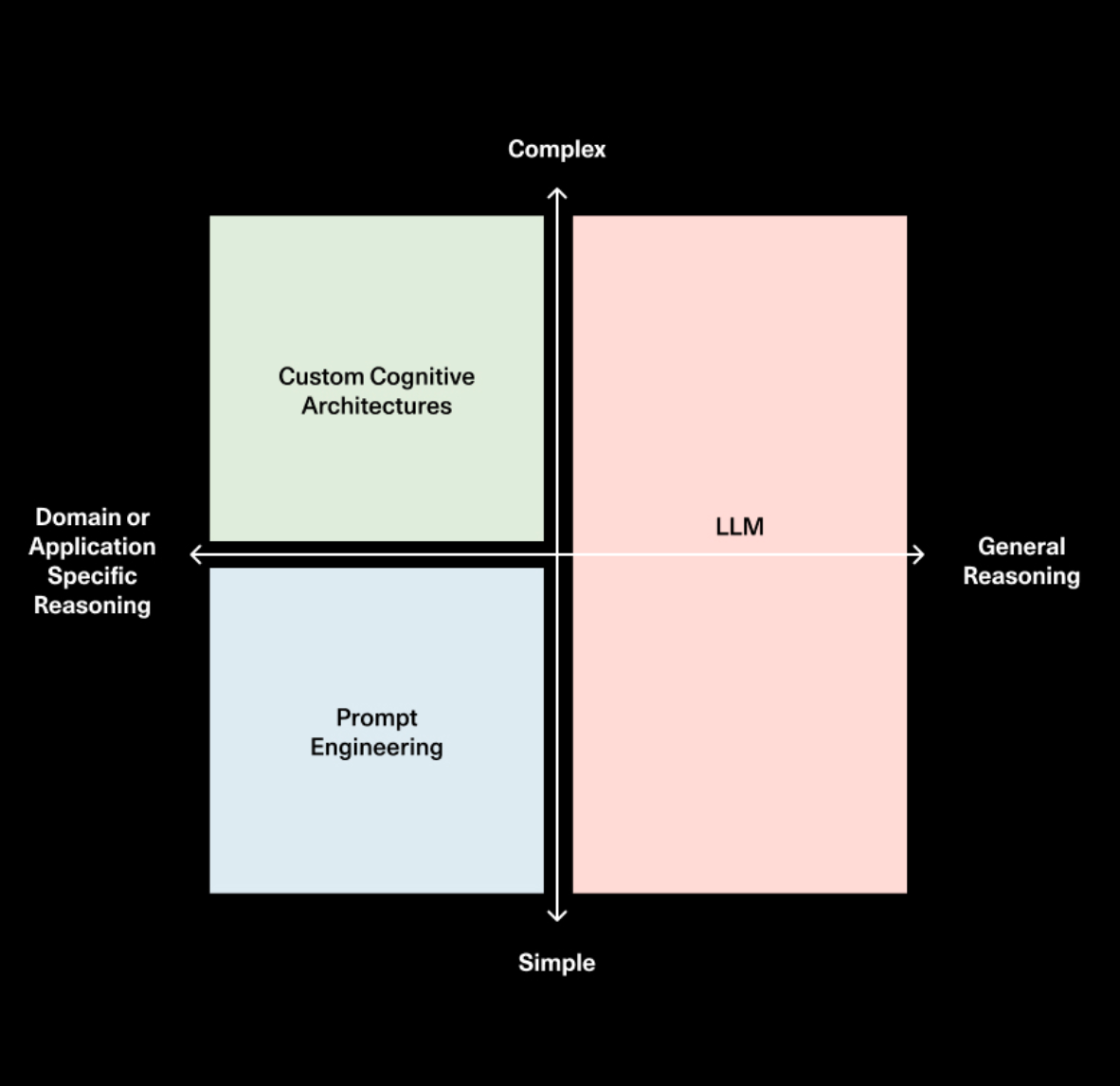

Planning for Agents

This article examines the limitations of LLMs in agent planning and reasoning, particularly in long-term scenarios. While function calling allows for immediate action selection, complex tasks demand a series of steps. The article proposes enhancing agent planning by providing comprehensive information to the LLM, modifying cognitive architectures, and employing domain-specific approaches. Notably, it highlights the effectiveness of domain-specific cognitive architectures in guiding agents by hardcoding specific steps, reducing the LLM's planning burden. The article introduces LangGraph, a tool designed to simplify building custom cognitive architectures, and predicts that while LLMs will become more intelligent, prompting and custom architectures will remain essential for controlling agent behavior, especially in complex tasks.

AI Agent: What Industry Leaders are Focusing On (Part 2) - Analyzing AI Agent with the 5W1H Framework

This article delves into the construction process of AI Agents, encompassing perception, brain, and action, and analyzes key technologies such as vector databases, RAG (Retrieval-Augmented Generation), and Rerank Technology. It explores various AI Agent mechanisms and architectures, including ReAct, Basic Reflection, Reflexion, REWOO, Plan and Execute, LLM Compiler, LATS, and Self-Discovery, as well as the action phase's Function Calling and API Bank. The article also envisions the future integration of AI Agents with physical entities, such as robots for rescue missions. Finally, it emphasizes the controllability and security of AI Agents and provides practical learning suggestions, including hands-on experience, understanding the core principles, and practical implementation.

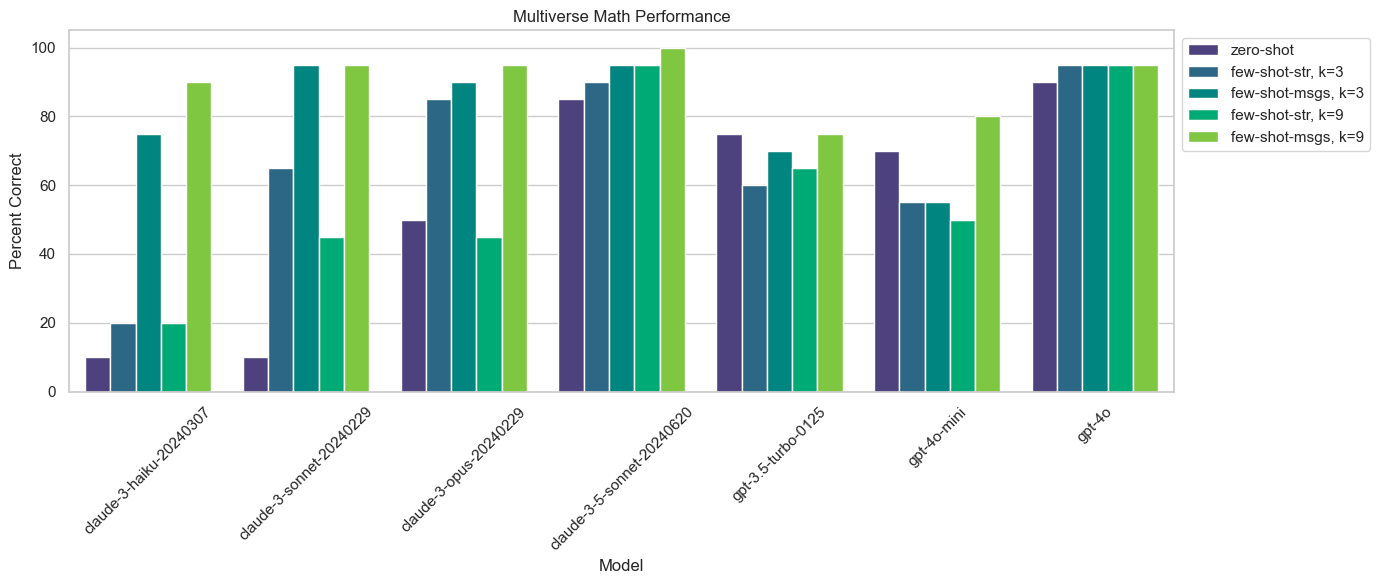

Few-shot prompting to improve tool-calling performance

This LangChain blog post delves into the application of few-shot prompting to boost the tool-calling capabilities of Large Language Models (LLMs). The authors emphasize the importance of tools in LLM applications and discuss LangChain's efforts in refining tool interfaces. The post elucidates the concept of few-shot prompting, where example inputs and desired outputs are incorporated into the model prompt to enhance performance. Through experiments on two datasets, 'Query Analysis' and 'Multiverse Math', the authors demonstrate the effectiveness of various few-shot prompting techniques. Notably, using semantically similar examples as messages significantly improves performance, especially for Claude models. The post concludes by highlighting future research avenues, including the use of negative examples and optimal methods for semantic search retrieval of few-shot examples.

Introducing LlamaExtract Beta: structured data extraction in just a few clicks

This article announces the beta release of LlamaExtract, a managed service designed for structured data extraction from unstructured documents. This service is particularly beneficial for LLM applications, simplifying data processing for retrieval and RAG use cases. LlamaExtract allows users to infer a schema from a set of documents or manually define one. It then extracts values based on this schema, supporting various use cases like resume analysis, invoice processing, and product categorization. Accessible through a user-friendly UI and an API, LlamaExtract caters to both prototyping and integration needs. While currently in beta with limitations on file and page processing, LlamaExtract is under active development, with planned improvements including multimodal extraction and more robust schema inference. The service is available to all users, encouraging community feedback for future enhancements.

AI Engineer | New Position Gains Traction Overseas, What About Domestic? Successful Transition: How Long Does It Take? Comprehensive Question Bank & Interview Experience | ShowMeAI Daily

This article from the ShowMeAI research center analyzes the global development trend of AI Engineer, particularly in the context of the large language model (LLM) wave.

The article first reviews the emergence of the AI Engineer concept and the formation of overseas consensus, pointing out the relative lag in this field in China. It then delves into the skill spectrum of AI Engineer, including the transition from data/research constraints to product/user constraints, and the distinction between AI Engineer and traditional ML Engineer: AI Engineer focuses more on applying AI models to products, while ML Engineer focuses more on building and optimizing models themselves. Additionally, the article shares the experiences of several successful AI Engineers, including their learning paths, work content, and essential skills. Finally, the article explores the skill combination and interview points of AI Engineer from the recruiter's perspective, providing valuable references for readers who aspire to become AI Engineers.

Issue 3321: Directly Calling Large Language Model APIs in Chrome

Google is developing a series of Web platform APIs and browser functionalities aimed at enabling Large Language Models, including Gemini Nano, to run directly within the browser. Compared to self-deployed device-side AI, browser-integrated AI offers advantages such as ease of deployment, use of hardware acceleration, and offline usage. After enabling Gemini Nano in Chrome Canary, developers can use the Prompt API for text processing tasks such as translation, summarization, and question-answering. This technology promises to simplify the deployment and use of AI features, opening up new possibilities for frontend development.

Lessons Learned from Building Large Language Models in the Past Year

This article shares the experiences of six experts in using large language models (LLMs) to build applications over the past year. The article first emphasizes the importance of prompt engineering, delving into methods such as few-shot prompts, in-context learning, chain-of-thought prompting, and structured input-output. It then provides practical advice on optimizing prompt structures and context selection. Furthermore, the article introduces the application of RAG technology and how to improve retrieval effects through keyword search and embedding-based retrieval methods. Additionally, the article discusses the comparison between RAG and fine-tuning in handling new information and the impact of long-context models on RAG. In terms of model evaluation and optimization, the article highlights the limitations of the LLM-as-Judge method and recommends more reliable evaluation strategies, such as assertion-based unit testing, binary classification, and pairwise comparison. Finally, the article points out that content auditing and fact inconsistency issues are significant challenges that need to be addressed in practical applications and proposes some solutions.

Exploring and Implementing Vertical Domain Large Models in ToB

Authored by Alibaba Cloud's logistics technology team, this article shares their practical experience in developing and deploying the 'Logistics AI' large model over the past year, focusing on the 'logistics experience' vertical domain.

The article begins by outlining the characteristics of ToB vertical domain large models. These models offer strong domain professionalism, high output quality, and effective performance on specific tasks. However, they also face challenges such as strict accuracy demands, complex knowledge base maintenance, and limited adaptability.

To address these challenges, the team adopted a Black-Box Prompt Optimization (BPO) approach to refine questioning strategies. They combined this with the Reflexion framework, RAG technology, and SFT + DPO (Supervised Fine-Tuning and Data-driven Preference Optimization) methods to effectively address the challenges of aligned enhancement, Text2API, and knowledge base maintenance, ultimately improving model performance and adaptability.

Finally, the article showcases the application of 'Logistics AI' in real-world scenarios such as the 'Logistics Assistant', DingTalk group logistics service robots, and Qiniu Cloud's logistics merchant backend. It also introduces the 'Logistics AI Platform' product, which empowers users to quickly create and deploy customized logistics assistants, providing efficient and convenient solutions for the logistics industry.

Behind the Competition for the 'World's Longest Context Window': Does Longer Context Mean the End of RAG?

This article delves into the development of long context technology in large models and its potential impact on traditional information retrieval enhanced generation technology (RAG). The article gathers insights from multiple field experts, analyzing the advantages of long text technology in handling complex tasks, such as multimodal questions and code generation, while also pointing out its cost and technical trade-offs. The article also explores the optimization direction of long text technology in hardware, machine learning engineering, and model architecture, and compares its advantages and disadvantages with RAG technology in practical application scenarios. Furthermore, the article covers multi-tenant challenges, model hallucination solutions, the impact of price competition on technological progress, and trends in vectorization, multimodal large models, and other technologies, providing developers and tech enthusiasts with a comprehensive technical interpretation and future outlook.

Is RAG Technology Really 'Overhyped'?

This article provides a comprehensive analysis of RAG technology's applications in large language models, including its benefits in precise question answering, recommendation systems, and information extraction. It also addresses the challenges it faces, such as data inconsistencies and semantic gaps. The article emphasizes the collaborative relationship between RAG technology and long-context models, Agent, and points out that RAG plus Agent essentially involves dividing complex problems into manageable parts. Additionally, the article explores the potential of RAG technology in recommendation systems and its future development directions, including multimodal applications and data security.

Mem0: Open-sourced Just One Day Ago, It Gained Nearly 10,000 Stars, Surpassing RAG and Giving Large Models Super Strong Memory Power!

This article introduces Mem0, an open-source AI memory technology that has garnered widespread attention since its release. Mem0 aims to provide intelligent, self-improving memory layers for large language models, enabling personalized AI experiences across applications. Its core features include multi-layered memory, adaptive personalization, developer-friendly APIs, and cross-platform consistency. Compared to traditional RAG (Retrieval-Augmented Generation) technology, Mem0 offers significant advantages in entity relationship understanding, contextual continuity, adaptive learning, and dynamic information updating. Mem0 is easy to install and use, providing an easy-to-operate API, suitable for various AI application scenarios such as virtual companions, productivity tools, and AI agent customer support. The article also delves into Mem0's technical details, application cases, and differences with RAG, along with some simple code examples.

Mastering Prompt Writing Techniques: The Secret to Crafting Effective Prompts

This article delves into the art of crafting effective prompts to fully utilize the potential of large language models (LLMs), generating high-quality output content. The article first introduces the definition, function, and mechanism of prompts, which are structured input sequences used to guide pre-trained language models to generate expected output. It then elaborates on the key elements of excellent prompts, including clear goals and tasks, specific language descriptions, sufficient context information, detailed evaluation criteria, and assessment dimensions. These elements help reduce ambiguity and ensure models understand user intent. Furthermore, the article highlights eight optimization techniques for prompt design, including using samples and examples, maintaining simplicity and directness, avoiding ambiguity, providing step-by-step and hierarchical guidance, considering multiple possibilities and boundary conditions, setting up error correction mechanisms, maintaining language and cultural sensitivity, and ensuring data privacy and security. By applying these techniques, the quality of prompts can be significantly improved, guiding models to generate more accurate, relevant, and reliable output.

Redis Improves Performance of Vector Semantic Search with Multi-Threaded Query Engine

/filters:no_upscale()/news/2024/07/redis-vector-database-genai-rag/en/resources/30fig2-1721385502604.jpg)

Redis, the popular in-memory data store, has released an enhanced Redis Query Engine leveraging multi-threading to boost query throughput while preserving low latency, which is critical for the growing use of vector databases in retrieval-augmented generation (RAG) for GenAI applications. This enhancement enables Redis to scale vertically, allowing concurrent index access for faster query processing even with large datasets. Redis claims sub-millisecond response times and average query latency under 10 milliseconds. The article highlights the limitations of traditional single-threaded architectures and how Redis's multi-threaded approach overcomes these limitations by enabling concurrent query processing without affecting the performance of standard Redis operations. Benchmark results demonstrate the superior performance of Redis's Query Engine compared to other vector database providers, general-purpose databases, and fully managed in-memory Redis cloud service providers. While focusing on technical capabilities, the article also provides insights into the vector database market, emphasizing the need for comprehensive solutions that address the broader challenges in AI-driven data retrieval. The article concludes by highlighting the importance of real-time RAG in GenAI applications like chatbots and emphasizes the need for data architectures that support real-time interactions.

A Letter from Andrew Ng: Working on Concrete Ideas

Andrew Ng emphasizes the importance of defining a concrete product vision in AI entrepreneurship and advancing projects through rapid iteration. A concrete product vision can accelerate team execution and help them quickly identify and solve problems. Rapid iteration enables the team to identify product flaws more quickly, learn from them, and pivot to more effective concrete ideas. Before implementing concrete ideas, it's necessary to assess their technical and commercial feasibility, ensuring the product's actual value and market demand.

Notion Surpasses 100 Million Users: Early Funding Pitch Deck Reveals AI Software as the New Hardware

- Notion has surpassed 100 million users, reflecting the success of its product and market strategy.

- Notion's mission is to 'Democratize Software', providing computing power to non-programmers, a mission that has been clear since 2013.

- As an AI-driven software product, Notion is viewed as a super AI RAG system, capable of understanding user input and providing intelligent feedback.

- Notion's success is partly attributed to its community and user-driven development model, allowing users to create and sell tools on the platform.

- Notion plans to continue integrating new features and launching new products to enhance user experience and expand market influence.

From App to OS: The Global Revolution of Conch AI Floating Button

The floating button feature launched by Conch AI breaks through application boundaries, achieving global availability of AI assistants, allowing users to obtain AI support at any time in any application, and through the floating button for quick on-screen recognition, initiating voice conversations, etc. The floating button also provides customized services based on different scenarios, such as adjusting response styles in social scenarios, reflecting a deep understanding of user needs.

AI-Generated Video Practices for Qunar International Hotels

- Challenges and Opportunities: International hotels have low video coverage, but videos significantly improve booking rates, highlighting the immense market potential for video generation in this sector.

- AI-Powered Process: We have streamlined the professional film and television production process into four key steps: creative planning, storyboard creation, on-site shooting, and post-production editing, optimizing each step with AI technology.

- Quality Standards: Value and interest, clear image quality, and high-quality themes are crucial for attracting users and enhancing user experience.

- Demonstration of Results: We showcased the actual effects of AI-generated videos and shared lessons learned in practice, such as the selection of video resolution and the use of narration and background music.

- Future Prospects: We plan to achieve customized video generation for high-end hotels and provide rapid market response capabilities to assist the operations team in collaborating with hotels.

A Comprehensive Look at Kimi: Features, Versions, Pricing, Advantages, and Applications

The Kimi Chat AI assistant supports extended context input of up to 2 million characters, providing customized services in areas like academic research, internet industry, programming, independent media creation, and legal profession. Its key features include web search, efficient reading, professional document interpretation, data organization, creative assistance, and coding support, assisting users in enhancing work efficiency and learning outcomes.

Midjourney Business Model Canvas Analysis

Midjourney, founded in 2021, is an AI image generation tool that empowers users, even without professional technical backgrounds, to create high-quality images using simple text descriptions within the Discord community. Its underlying technology leverages Stable Diffusion, and it generates revenue through a subscription model, with prices ranging from $10/month to $120/month. Midjourney's success can be attributed to its low-cost training model, the social attributes of the Discord platform fostering community co-creation, and its unique artistic style image generation effects. Despite its small team size, Midjourney prioritizes independence and agility, relying on external consultants for strategic guidance and focusing on profitability and technology research and development.

Is This the PMF for AI Hardware? European Company Launches AI-Guided Glasses, 1/10th the Price of a Guide Dog

Romanian medical company .lumen has developed AI-guided glasses that utilize pedestrian-oriented AI technology, providing an alternative to guide dogs for visually impaired individuals. These glasses guide direction through a haptic feedback system, offering a more accessible and affordable solution. The device has been tested in multiple countries and is set for a limited release in the fourth quarter of 2024, with the US market targeted for the fourth quarter of 2025. The article also highlights other assistive devices for the visually impaired, such as smart guide dogs, walking aids, and guide shoes, showcasing the wide range of AI applications in improving the quality of life for visually impaired individuals.

The Only Thing That Matters for a Startup is PMF | Z Talk

This article emphasizes that Product-Market Fit (PMF), the alignment of a product with a market need, is the most critical factor determining the success or failure of a startup. Through reviewing the concept of PMF, analyzing cases, and exploring market impacts, the article illustrates the importance of the market. Even with an excellent team and product, a poor market can still lead to failure. The article recommends that startups achieve PMF at all costs, as this is the sole key to startup success.

Entrepreneur's Post-mortem: Mistakes I Made in 'Localization' During Overseas Expansion

This article reflects on the failures in localizing an overseas SaaS product. It emphasizes that localization goes beyond language and pricing adjustments, encompassing detailed work in areas like payment methods, market understanding, and user respect. The author analyzes the reasons for the failure of regional pricing and language localization, acknowledging the lack of reverence and short-term gain seeking. They propose a more meticulous approach to planning and executing localization strategies if they were to start over.

Amazon Fully Launches AI Shopping Gateway, Significantly Alters Traffic Landscape and Operational Logic

Amazon's AI shopping assistant, Rufus, leverages the COSMO algorithm to provide personalized product recommendations to users through Q&A interaction. Rufus offers a range of functions, including product detail inquiries, scenario-based recommendations, product comparisons, order inquiries, and answers to open-ended questions. To maintain platform ecosystem balance, Rufus avoids price comparisons. Sellers should focus on content around Rufus, optimizing Q&A, reviews, and on-site and off-site information to gain more traffic and orders.

Scale AI: A $138 Billion Company Solving the Right Problems, Doing the "Lowest-Tech" Business

Scale AI founder, Alexandr Wang, believes that data is a cornerstone of AI development, and high-quality data is crucial for AI model performance. Scale AI establishes an efficient data preparation system, providing high-quality data for AI models and tackling data scarcity issues through innovative methods like human-AI collaboration and synthetic data generation. Scale AI's goal is to become the data platform for the AI industry, supporting the AI ecosystem and driving the realization of AGI.

Deep Dive: AI's Seven Pioneers (OpenAI, Figure, Cognition, Scale, etc.): Innovation and Challenges in the AI Era?

This article brings together the insights of leading figures in the AI industry, exploring the application prospects, challenges, and development trends of AI in fintech, design, product development, artistic creation, and other fields. In the fintech sector, experts emphasize the importance of deeply understanding merchant identities, and AI technology plays a crucial role in credit risk assessment and compliance with payment network regulations. In the design field, AI is seen as an assistant to designers rather than a replacement, particularly in capturing cultural atmospheres and emotional states. In the product development process, iterative design methodologies and rapid prototyping are critical, helping to identify issues and optimize designs. In the realm of artistic creation, generative models have opened up new paradigms, with AI's application in video generation attracting significant attention. Additionally, the article discusses the evaluation of AI models and issues of social trust, highlighting the necessity of transparency and expert review.

a16z Founders Discuss AI Entrepreneurship: AI Provides Services, Not Products; Hardware Entrepreneurship is More Challenging but Offers Greater Competitive Advantage

This article is the second part of a conversation between Andreessen Horowitz founders Mark Andreessen and Ben Horowitz, focusing on AI entrepreneurship. They first discuss the role and selection of CEOs, pointing out that CEOs should possess domain-specific knowledge rather than just management skills. They believe that hardware entrepreneurship, although facing long cycles, high risks, and high capital requirements, can establish stronger competitive advantages after success. The article also delves into the AI service model, highlighting that AI companies sell services rather than products, using Tesla as an example to emphasize the importance of data-driven AI development. Finally, they look forward to the future of AI-robotics integration, which requires overcoming technical and commercial barriers and relies on massive data to build a data flywheel.

A16z In-Depth Discussion: Challenges and Opportunities for AI in Healthcare

This article, presented as a discussion, delves into the application of AI in healthcare, covering areas such as data automation, digital contracting, clinical trial optimization, and scheduling mechanism improvement. It emphasizes AI's potential to address staff shortages and its crucial role in enhancing efficiency and reducing costs. The article also examines the need to address data and infrastructure digitization issues and the potential impact of AI on healthcare decision-making and regulatory frameworks.

DialogueWall AI CTO Zeng Guoyang: Large Models Are Expensive, How to Compete and Win?

This article summarizes Tencent Technology's interview with Zeng Guoyang, CTO of DialogueWall AI, discussing the competitive landscape of large models, the advantages of edge models, future development trends, and the importance of data and open-source communities.

Zeng Guoyang pointed out that while large models are constantly expanding in terms of parameters, the high resource consumption and overfitting issues cannot be ignored. In contrast, edge models can significantly reduce resource consumption and costs while maintaining high performance, making them more suitable for startups and practical applications. He predicts that edge models will surpass cloud models in user interaction and real-time feedback, forming a new edge-cloud collaboration model.

Furthermore, Zeng Guoyang emphasized the key role of data in driving model performance improvement and the recognition of domestic innovative technologies in the large model field. He believes that open-source communities are crucial for the popularization and application of large model technologies, and DialogueWall AI will continue to promote the development of edge models and explore multimodal models and model constraints.

Challenges in Integrating Technology and Business, Uncertain ROI: Can Financial Large Models Overcome These Hurdles?

The financial industry is a prime candidate for large model applications, but implementation faces obstacles. Deep integration of technology and business is crucial, demanding careful consideration of cost-benefit analysis and ROI. Building a strong talent system and employing agile iteration are essential. The convergence of large models with traditional AI technologies will revolutionize human-computer collaboration, propelling the financial industry towards a new era of intelligence.

In-Depth Analysis: 10 Most Noteworthy AI Products in the First Half of 2024 (Overseas Edition)

During the first half of 2024, tech giants such as OpenAI, Apple, Google, Microsoft, Meta, and NVIDIA released a wave of new AI products, spanning across multiple domains including multimodal AI, high-performance computing, and open-source models. These releases showcase the dynamic growth and immense potential of AI technology.

OpenAI's ChatGPT-4o achieved breakthroughs in multimodal support, response speed, and multilingual processing. Apple unveiled the Apple Intelligence project, leveraging high-performance generative models to deliver system-level personal assistants. Google's Project Astra aims to develop universal AI agents. Microsoft introduced Copilot Plus PC and the new Surface Pro, significantly enhancing AI performance. Meta open-sourced the Llama 3 model, enabling multi-platform applications. NVIDIA released the Blackwell chip, offering higher performance and lower costs for large language models.

Furthermore, Mistral's Codestral-22B code model, Anthropic's Claude 3.5 Sonnet multimodal model, Adobe's GenStudio marketing platform, and Salesforce's Einstein Copilot enterprise-level chatbot all demonstrated innovative applications of AI technology across various fields. The introduction of these AI products will drive AI technology adoption across a wider range of scenarios, bringing transformative changes to various industries.