BestBlogs.dev Highlights Issue #15

Subscribe NowDear friends,

👋 Welcome to this edition of curated articles from BestBlogs.dev!

🚀 This issue spotlights the latest breakthroughs, innovative applications, and industry dynamics in the AI field. We bring you essential insights on model advancements, development tools, product innovations, and market strategies. Join us as we explore the cutting edge of AI development!

🧠 AI Models and Development Technology: Performance Leaps, Streamlined Development

- Alibaba's Tongyi team open-sources Qwen2-Math model, outperforming mainstream models in mathematical evaluations; MiniCPM-V 2.6 achieves state-of-the-art performance in multimodal tasks, featuring real-time video understanding.

- OpenAI introduces a ChatGPT API with JSON structured output, significantly enhancing output accuracy; DeepSeek API implements hard disk caching, substantially reducing service costs.

- PaddleNLP 3.0 and GitHub Model Platform launch, offering developers comprehensive solutions for large language model development and access to industry-leading AI models.

💡 AI Product Innovation: Real-World Applications, Enhanced User Experiences

- Figure AI unveils Figure 02, a humanoid robot integrating cutting-edge technologies, showcasing AI's potential in physical robotics.

- Perplexity AI revolutionizes user search experience by merging LLM technology with search engines; Lepton AI introduces real-time voice interaction, elevating AI assistant user experiences.

- Several companies debut AI video generation models, facing technological and commercialization challenges; AI transcription company Notta achieves rapid growth through strategic targeting of the Japanese market.

🌐 AI Industry Dynamics: Navigating Opportunities and Challenges

- The departure of key OpenAI executives raises questions about the company's future direction, highlighting challenges in AI ethics and corporate governance.

- Scientists oppose California's AI restriction bill, underscoring the delicate balance between regulation and innovation in AI.

- Spatial computing technology merges with AI, opening new frontiers; AI-enabled devices (like AI phones and PCs) emerge as development hotspots, while setbacks with standalone hardware like AI Pin reveal AI's current limitations in certain applications.

🔗 Intrigued to learn more? Click through to read the full articles for deeper insights!

Table of Contents

- Qwen2-Math Open Source! Initial Exploration of Mathematical Synthetic Data Generation!

- MiniCPM-V 2.6: A Mobile-Ready "GPT-4V" with Multi-Image and Video Understanding on the Edge

- Zero-Error Precision: OpenAI Announces API Support for Structured Output, 100% JSON Accuracy

- DeepSeek API Innovatively Adopts Disk Caching, Reducing Prices by an Order of Magnitude

- A Comprehensive Overview of LLM Alignment Techniques: RLHF, RLAIF, PPO, DPO, and More

- Eight Questions to Debunk the Transformer's Secrets

- A RoCE network for distributed AI training at scale

- Introducing GitHub Models: A new generation of AI engineers building on GitHub

- Spring AI Embraces OpenAI's Structured Outputs: Enhancing JSON Response Reliability

- PaddleNLP 3.0 Major Release: A Ready-to-Use Industrial-Grade Large Language Model Development Toolkit

- Andrew Ng's Letter: Programming Has Never Been Easier

- Introducing TextImage Augmentation for Document Images

- Dynamic few-shot examples with LangSmith datasets

- Industrial Quality Inspection in the Era of Large Language Models: Technological Innovation and Practical Exploration

- UX for Agents, Part 2: Ambient

- Improve AI assistant response accuracy using Knowledge Bases for Amazon Bedrock and a reranking model | Amazon Web Services

- LlamaIndex Newsletter 2024-08-06

- Recording the Installation and Deployment Process of the QWen2-72B-Instruct Model

- Andrew Ng Teaches, LLM as 'Teaching Assistant', Python Programming Course for Beginners Launches

- Figure AI Unveils Advanced Humanoid Robot: Figure 02

- The next big AI-UX trend—it’s not Conversational UI

- Z Product | Achieved $2 Million ARR in One Year, This AI Podcast Transcription Is Dominating the New Frontier at a16z AI Scribe

- Perplexity AI: User Understanding and Product Excellence Outweigh Model Power

- Dissecting SearchGPT: Unveiling the Barriers, Breakthroughs, and Future of AI-Powered Search | Jiazi, a Technology News Platform

- AI capabilities and controls to power up your workday with Chrome Enterprise

- Embodied Intelligence Companies Navigating Uncharted Territory Seek 'Certainty'

- Ten Questions on Gamma: How the Breakthrough AI Presentation Tool King Was Trained?

- AI Products Facing Monetization Challenges in Overseas Markets

- Practical Evaluation of 4 Leading Chinese AI Video Generation Models: Falling Short of Expectations, with Significant Differences

- Practical Sharing | Calculating LTV for AI Subscription Users

- What is Good Design in the Age of AI? How Will AI Influence Experience Design?

- Achieving AI Transcription Success in Japan, Reaching Nearly $10 Million ARR

- Tencent AI Reader: Deep Reading for Long Texts, Enhancing Professional Reading Efficiency

- Fu Sheng's Innovation Formula: How Can Chinese Robotics Overtake on a Curve?

- Character.AI Founders Depart, Industry Enters New Phase

- OpenAI's Leadership Shakeup: Is a 'Tyrant' at the Helm Leading the Company Towards a Dangerous Future?

- Five Insights from Jobs' 55-Minute Speech 41 Years Ago, Still Relevant in the AI Era

- Elon Musk's August Latest 20,000-Word Interview: xAI, Neuralink, and the Future of Humanity (with Edited Video)

- Interview with 'Spatial Computing' Author: If Jobs Were Alive, Vision Pro Would Be Cheaper | The Epoch

- AI Phones, AIPC: A False Proposition?

- Andrew Ng's Letter: How to Brainstorm AI Startup Ideas

- Bengio's Unveiled Plans: World Model + Mathematical Proof to Ensure AI System Functionality

- This AI Standalone Hardware: Returns Outpace Sales

- AI in Action | AI Creates a Solo Living Girl's Life Vlog, Gaining Tens of Thousands of Likes in 3 Days

Qwen2-Math Open Source! Initial Exploration of Mathematical Synthetic Data Generation!

The Alibaba Tongyi team has open-sourced the next-generation mathematical model Qwen2-Math, which includes three parameter versions. The base model is pre-trained on a mathematical corpus, and the instruction-tuned version optimizes performance through a reward mechanism and rejection sampling. Qwen2-Math surpasses mainstream models in mathematical evaluations, becoming the most advanced specialized mathematical model. The article discusses the advantages of synthetic data generation, such as addressing privacy concerns and enhancing data structurization, and provides model download and inference guidelines, showcasing methods for generating mathematical data in the educational field. This model offers high-quality data support for mathematical modeling, educational technology, and model fine-tuning, promoting the development of mathematical modeling.

MiniCPM-V 2.6: A Mobile-Ready "GPT-4V" with Multi-Image and Video Understanding on the Edge

MiniCPM-V 2.6, with its compact 8B parameter design, sets a new standard for multimodal tasks like single-image, multi-image, and video understanding, outperforming GPT-4V. This model pioneers the integration of real-time video understanding, multi-image joint understanding, and multi-image OCR into an edge-side model, significantly enhancing the application potential and user experience of edge AI. MiniCPM-V 2.6 excels in energy efficiency, memory usage, and inference speed, requiring only 6GB of memory and achieving an inference speed of 18 tokens/s. It supports multiple languages and platforms, demonstrating its broad applicability and high efficiency in practical applications. The model's open-source release will further drive the adoption and development of multimodal AI in mobile devices, AR/VR, and other fields. However, challenges remain in areas like model compression, data security, and privacy protection, requiring further exploration of more efficient and secure solutions.

Zero-Error Precision: OpenAI Announces API Support for Structured Output, 100% JSON Accuracy

OpenAI's latest ChatGPT API update introduces support for structured output in JSON format, empowering developers to define JSON schemas for standardizing model output. This ensures data consistency and ease of use, addressing the long-standing challenge of LLM output being difficult to parse and utilize.

OpenAI's implementation of constraint decoding technology guarantees that model output adheres perfectly to the defined schema, achieving a 100% schema matching rate. This ensures the reliability and accuracy of the generated data.

Furthermore, the new gpt-4o-2024-08-06 model boasts optimized cost efficiency, with a 50% reduction in input costs and a 33% reduction in output costs. This makes the API even more appealing to developers, offering tangible economic benefits.

The structured output feature unlocks a wide range of applications, including data extraction, UI generation based on user intent, and building multi-step intelligent workflows. This empowers AI developers to create more robust and user-friendly applications.

While the structured output feature offers significant advantages, practical implementation may face challenges. These include designing appropriate schemas to accommodate diverse data structures and requirements, and addressing potential errors or biases in model output. Developers need to adapt and optimize their approaches based on specific application scenarios.

DeepSeek API Innovatively Adopts Disk Caching, Reducing Prices by an Order of Magnitude

DeepSeek API addresses the challenge of high repetition rates in user inputs through disk caching technology, reducing service latency and costs. The cost for cached data is only 0.1 yuan per million tokens, leading to a substantial price drop for large models. Additionally, this service does not require users to modify their code or switch interfaces; the system automatically bills based on actual cache hits.

A Comprehensive Overview of LLM Alignment Techniques: RLHF, RLAIF, PPO, DPO, and More

This article provides a detailed overview of different techniques used to align large language models (LLMs) with human values. These techniques include Reinforcement Learning with Human Feedback (RLHF), Reinforcement Learning with Artificial Intelligence Feedback (RLAIF), Proximal Policy Optimization (PPO), and Distributed Proximal Optimization (DPO). The article emphasizes the importance of alignment, explaining how these techniques help models better reflect human values and intentions. It then examines the applications and challenges of these techniques in enhancing model performance and reducing alignment costs. This includes the superior performance of InstructGPT models in terms of usefulness and toxicity compared to GPT-3, despite having significantly fewer parameters. The article also discusses current challenges in alignment technologies, such as catastrophic forgetting and efficiency issues arising from the continuous implementation of self-fine-tuning (SFT) and alignment processes. It presents the advantages and disadvantages of Parallel Adaptive Fine-Tuning (PAFT) and Online Reward-based Policy Optimization (ORPO) technologies.

Eight Questions to Debunk the Transformer's Secrets

Sakana AI dives into the Transformer's secrets through a series of experiments, particularly focusing on the information flow in pre-trained Transformers. By drawing parallels between Transformer layers and a painter's workflow, the study proposes and verifies several hypotheses, including whether each layer uses the same representation space, whether all layers are necessary, whether intermediate layers have the same function, whether layer order is important, and whether layers can run in parallel. The experimental results show that intermediate layers share a representation space but perform different functions. Layer order has a certain impact on model performance, but parallel execution and iterative processes can also effectively improve performance. The study also finds that randomizing layer order and parallel execution have the least impact on model performance, while repeating a single layer has the most severe impact.

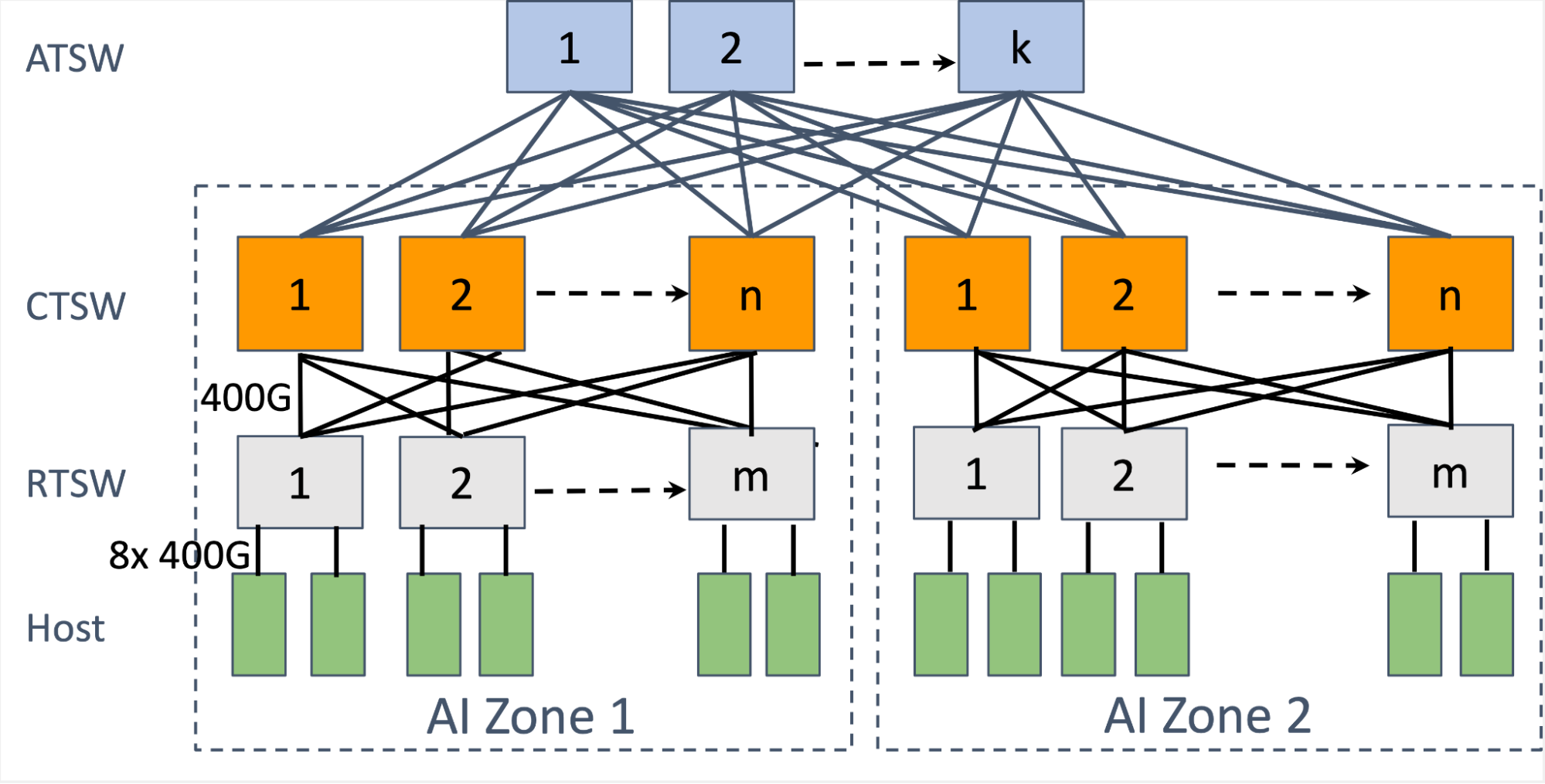

A RoCE network for distributed AI training at scale

Meta has built a robust RoCE (RDMA over Converged Ethernet) network to support large-scale distributed AI training, addressing the increasing demands for computational density and scale. The network, detailed in a paper presented at ACM SIGCOMM 2024, separates frontend (FE) and backend (BE) networks to optimize data ingestion and training processes. The BE network, based on RoCEv2, connects thousands of GPUs in a non-blocking architecture, providing high bandwidth and low latency. Meta has evolved its network from a simple star topology to a two-stage Clos topology, known as an AI Zone, to enhance scalability and availability. The network design includes strategies for routing, such as path pinning and queue pair scaling, and congestion control mechanisms like receiver-driven traffic admission. These innovations aim to balance network traffic and optimize performance for AI workloads, particularly for large language models like LLAMA 3.1.

Introducing GitHub Models: A new generation of AI engineers building on GitHub

GitHub has launched GitHub Models, a platform designed to empower over 100 million developers to become AI engineers by providing easy access to a variety of industry-leading AI models. This initiative aims to democratize AI development, making it more accessible to a broader audience. The platform includes a built-in playground where developers can experiment with models like Llama 3.1, GPT-4o, and Mistral Large 2, adjusting prompts and parameters for free. Additionally, GitHub Models integrates seamlessly with Codespaces and VS Code, allowing developers to transition from experimentation to production with minimal friction. Azure AI supports the production deployment, offering enterprise-grade security and global availability. The platform also emphasizes privacy, ensuring that no data is shared with model providers for training purposes. GitHub Models is set to expand, with plans to include more models in the future, and it aligns with GitHub's broader vision of fostering a creator network for the age of AI, contributing to the advancement towards artificial general intelligence (AGI). The platform also aims to support AI education, as seen with its integration into Harvard's CS50 course.

Spring AI Embraces OpenAI's Structured Outputs: Enhancing JSON Response Reliability

This article announces the integration of OpenAI's Structured Outputs feature into Spring AI, a popular Java development framework. This integration allows developers to define the expected structure of AI-generated responses using JSON schemas, ensuring type safety, handling refusals programmatically, and simplifying prompt engineering. Spring AI offers flexible configuration options, allowing developers to set response formats programmatically or via application properties. Additionally, the BeanOutputConverter utility simplifies the integration by automatically generating JSON schemas from Java domain objects and converting responses into corresponding instances. This enhancement significantly improves the reliability and maintainability of AI-driven applications built with Spring AI, allowing developers to focus on building innovative features rather than handling unpredictable AI outputs. The article also hints at future plans to integrate OpenAI's Structured Outputs with Spring AI's model-agnostic utilities for broader applicability.

PaddleNLP 3.0 Major Release: A Ready-to-Use Industrial-Grade Large Language Model Development Toolkit

Baidu AI has developed PaddleNLP 3.0, a Large Language Model (LLM) development toolkit based on the PaddlePaddle framework 3.0. It provides a comprehensive solution covering network development, pre-training, fine-tuning and alignment, model compression, and inference deployment. Automatic Parallelism simplifies network development, reducing the amount of distributed core code and supporting full-process solutions for various mainstream models. PaddleNLP 3.0 also offers high-performance fine-tuning and alignment solutions, optimizing training efficiency with FlashMask (a high-performance variable-length attention masking technique) and Zero Padding (a data flow optimization technique that minimizes wasted computation due to inefficient padding). It supports long-text training and Multi-hardware Adaptation, including NVIDIA GPU, Kunlun XPU, Ascend NPU, and more. The toolkit features a Unified Checkpoint storage scheme, enabling rapid saving and recovery of model parameters. The release of PaddleNLP 3.0 marks a significant advancement in LLM development tools, providing strong technical support for industrial-grade applications.

Andrew Ng's Letter: Programming Has Never Been Easier

Professor Andrew Ng announces the launch of the 'AI Python for Beginners' course, a free short course series designed to teach how to use Generative AI to assist in programming, making it simpler and more efficient for beginners in all fields. The core of the course is to teach how to use large language models and other AI APIs to build powerful programs and how to use AI-powered Programming Assistants to accelerate the learning process. The course content aligns with the two major trends of AI changing programming: AI helping programs and AI helping programmers. Through these courses, learners will learn how to write code to use AI for tasks and use AI tools to explain, write, and debug code. The launch of the course aims to encourage more people to learn programming, enhance productivity and creativity, whether in marketing, finance, news, or other fields.

Introducing TextImage Augmentation for Document Images

The article presents a tutorial on a novel data augmentation technique for document images, developed in collaboration with Albumentations AI. The motivation behind this innovation is the need for effective data augmentation techniques that preserve the integrity of text while enhancing the dataset, particularly for fine-tuning Vision Language Models (VLMs) with limited datasets. Traditional image transformations often negatively impact text extraction accuracy. The new pipeline introduced handles both images and text within them, providing a comprehensive solution for document images. This multimodal approach modifies both the image content and the text annotations simultaneously. The augmentation process involves randomly selecting lines within the document, applying text augmentation methods like Random Insertion, Deletion, Swap, and Stopword Replacement, and then blacking out parts of the image where the text is inserted and inpainting them. The article also provides installation instructions, visualization examples, and details on how to combine this technique with other transformations. The conclusion highlights the potential of this technique to generate diverse training samples and enhance document image processing workflows.

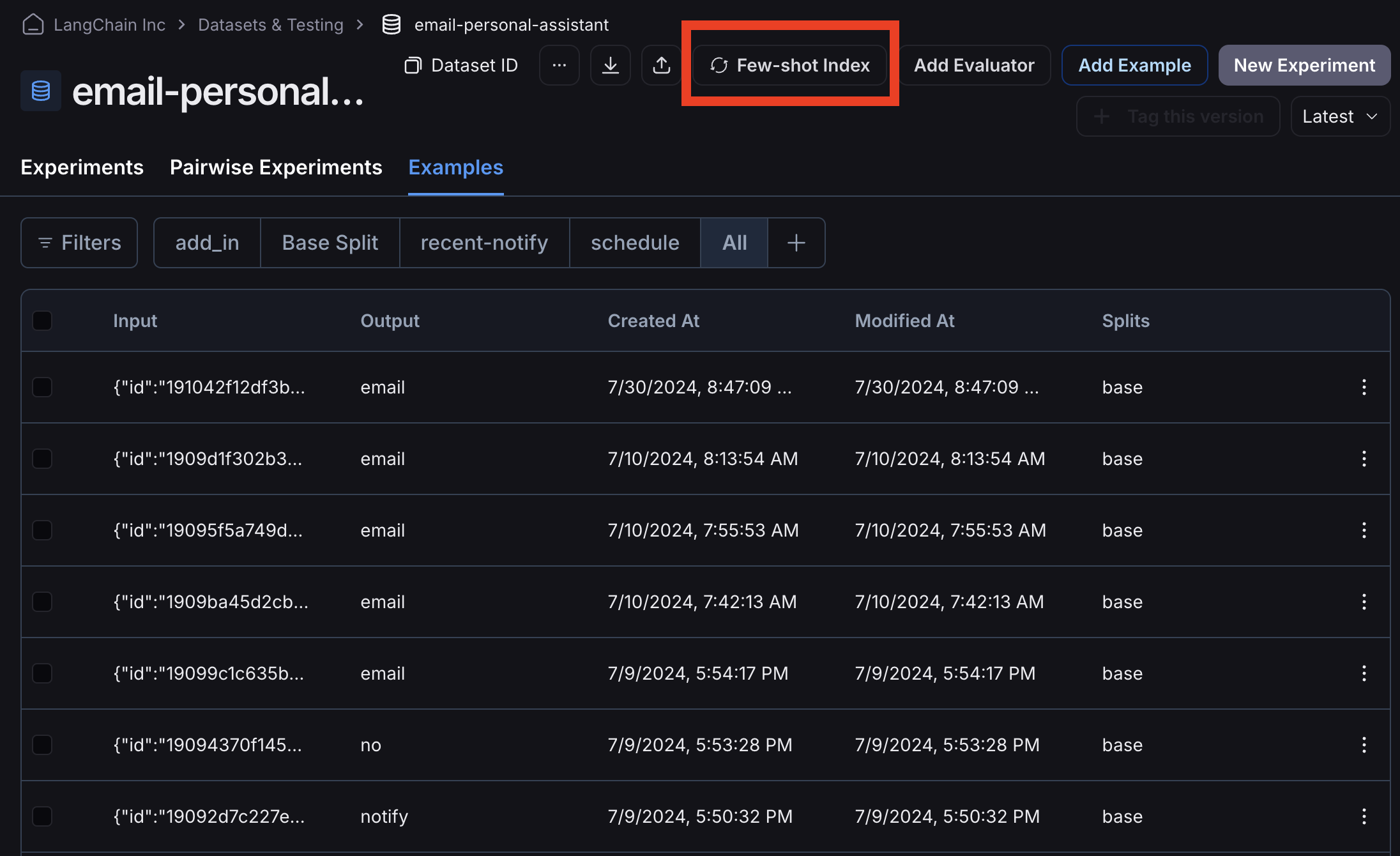

Dynamic few-shot examples with LangSmith datasets

This article introduces LangSmith's new dynamic few-shot example selectors, a feature designed to optimize LLM application performance. The article highlights the limitations of static few-shot prompting as applications become more complex, and while fine-tuning offers an alternative, it presents challenges related to complexity, updating, infrastructure, and personalization. Dynamic few-shot prompting, as presented in this article, allows for the selection of a small set of relevant examples tailored to user input, leading to improved performance compared to static datasets. LangSmith streamlines the implementation of this technique, enabling users to index datasets and retrieve relevant examples dynamically with ease. The article positions dynamic few-shot prompting as a more practical and adaptable solution compared to fine-tuning, especially for applications requiring personalization and rapid iteration.

Industrial Quality Inspection in the Era of Large Language Models: Technological Innovation and Practical Exploration

This article delves into the exploration and implementation of industrial AI quality inspection by Tencent Cloud and YouTu Lab. Tencent, drawing upon its deep learning expertise, has successfully addressed long-standing industry challenges, such as complex defect detection in mobile phone components, by integrating automated optical inspection (AOI) equipment. The article highlights the paramount importance of data standard alignment and unified defect definitions, and analyzes the core challenges of industrial AI quality inspection, including defect location, pixel, type, and quantification. Furthermore, the article explores the new paradigm of industrial AI quality inspection in the age of large language models, which enables rapid application without training or with zero samples through technologies like Visual Prompt and multimodal models. It envisions the significant role this paradigm will play in future manufacturing transformation.

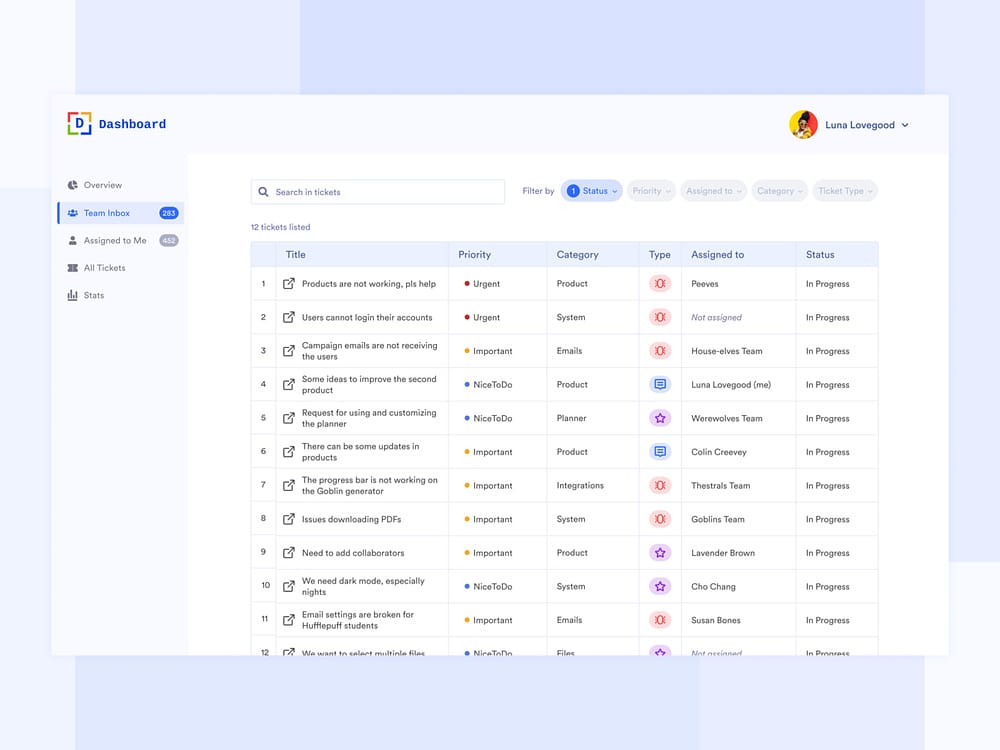

UX for Agents, Part 2: Ambient

The article, published on the LangChain Blog, delves into the concept of ambient agents, which operate in the background to handle tasks autonomously, enhancing user experience and scaling human capabilities. The author argues that for agentic systems to reach their potential, a shift towards background operation is necessary, allowing users to be more tolerant of longer completion times and enabling multiple agents to handle tasks simultaneously. The article emphasizes the importance of building trust with users by showing them the steps the agent is taking and allowing them to correct the agent if necessary. This approach moves the human from being 'in-the-loop' to 'on-the-loop,' allowing for greater observability and control. The article also discusses how agents can ask for human help when needed, using examples like an email assistant that integrates with Slack. The conclusion highlights the author's optimism about ambient agents and their potential to scale human capabilities, with the LangGraph project serving as a practical implementation of these concepts.

Improve AI assistant response accuracy using Knowledge Bases for Amazon Bedrock and a reranking model | Amazon Web Services

AI chatbots and virtual assistants face challenges in generating high-quality and accurate responses. This article explores how to address these challenges and improve response accuracy using Retrieval Augmented Generation (RAG) and reranking models. RAG combines knowledge base retrieval with generative models, providing more relevant and coherent output by retrieving pertinent information from a database before generating a response. The article offers a detailed solution overview, explaining how RAG utilizes vector search for speed and scalability, and how reranking models can further enhance response relevance by selecting the optimal option from multiple candidate responses.

LlamaIndex Newsletter 2024-08-06

LlamaIndex's latest update brings exciting new features, including LlamaIndex Workflows and dynamic retrieval in LlamaCloud. Workflows is an event-driven architecture for building multi-agent applications, supporting batching, async operations, and streaming. The dynamic retrieval feature enhances QA assistants, enabling both chunk-level and file-level document retrieval based on query similarity. Additionally, LongRAG is now available as a LlamaPack in LlamaIndex, utilizing larger document chunks and long-context LLMs for more effective synthesis. To support these new features, LlamaIndex is offering tutorials and upcoming webinars to help users make the most of these developments.

Recording the Installation and Deployment Process of the QWen2-72B-Instruct Model

- Environment Configuration :

- Install Conda and necessary environment dependencies, including Python, PyTorch, Transformers, vLLM, and CUDA.

- Model Download :

- Download the QWen2-72B-Instruct model from Huggingface or ModelScope.

- Dependency Installation :

- Install PyTorch, vLLM, and other dependency packages, and configure environment variables.

- Model Validation :

- Use the scripts provided by vLLM to validate model availability.

- Service Startup :

- Use vLLM to wrap the model service into an OpenAI-style HTTP service and run it via the tmux command.

- Summary :

- Emphasize the advantages of the vLLM framework in simplifying the deployment process of large models.

Andrew Ng Teaches, LLM as 'Teaching Assistant', Python Programming Course for Beginners Launches

Professor Andrew Ng, an AI scholar from Stanford University, has launched a new course for beginners – AI Python for Beginners, aiming to teach Python programming from scratch. The course is divided into four parts, covering Python basics, automation tasks, data and document processing, and extending Python functionality with packages and APIs. Students will learn fundamental programming concepts such as variables, functions, loops, and data structures, and apply this knowledge by building practical projects like custom recipe generators and smart to-do lists. The course features an AI chatbot as a teaching assistant, providing instant feedback and personalized guidance to enhance learning efficiency. This AI chatbot is powered by a Large Language Model (LLM). By the end of the course, students will be able to write Python scripts that interact with large language models, analyze data, and even create simple AI applications.

Figure AI Unveils Advanced Humanoid Robot: Figure 02

Silicon Valley startup Figure has unveiled its latest humanoid robot, Figure 02, integrating multiple advanced technologies, including real-time voice dialogue, visual language models (VLM), high-performance batteries, and advanced mechanical hand design. Figure 02 has achieved a major technological breakthrough and has been tested in BMW's factory, demonstrating its enormous potential in the industrial field. However, humanoid robot technology still faces challenges such as safety and cost control. In the future, Figure will continue to collaborate with OpenAI to develop more advanced AI models, promoting the application of humanoid robots in more fields.

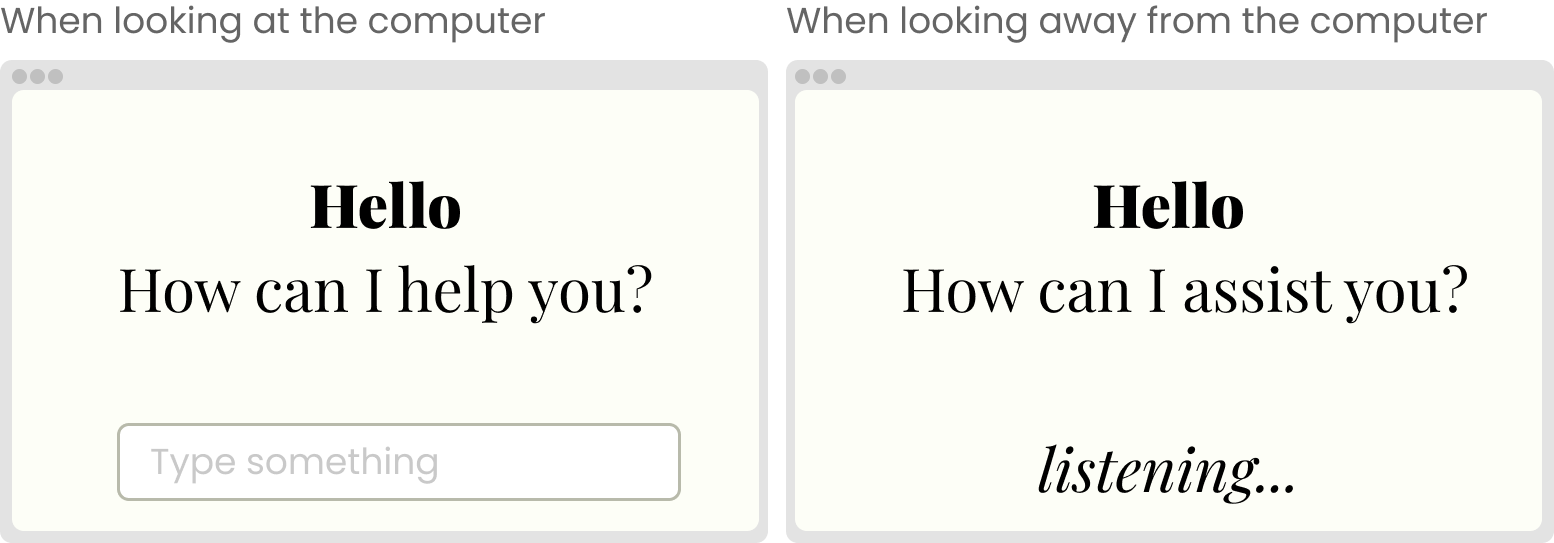

The next big AI-UX trend—it’s not Conversational UI

Current mainstream conversational user interfaces face issues such as user confusion, application state tracking, draft editing storage, travel planning, and research management. The article introduces the concept of aiOS, an AI-driven operating system with four core values:

- Personalized services: Bringing all services to the user.

- App interoperability: Seamless communication between apps, such as dragging a podcast into a notes app.

- Context-based services: Providing services based on user environment and behavior.

- Multimodal input-output: AI understanding and processing various input and output methods.

The article also predicts four major AI innovation trends in UX by 2030: dynamic interfaces, ephemeral interfaces, aiOS, and screen-less UX, emphasizing the importance of aiOS as a future direction.

Z Product | Achieved $2 Million ARR in One Year, This AI Podcast Transcription Is Dominating the New Frontier at a16z AI Scribe

Castmagic, founded in 2023, is an AI transcription and recording tool platform headquartered in Nevada, USA. The company has revolutionized podcast transcription and management using AI technology, providing AI content tools for various scenarios including podcast production, video platform content transcription, and meeting records. Castmagic's core competitiveness lies in its deep audio content processing capabilities, accurately and quickly extracting valuable content and offering innovative solutions to accelerate content distribution. Despite showing significant potential, the company has not yet received external financing.

Perplexity AI: User Understanding and Product Excellence Outweigh Model Power

This article explores Perplexity AI's search technology and its differentiation from Google, emphasizing the importance of user understanding and delivering high-quality answers. Perplexity combines Large Language Model (LLM) technology with search engines to provide answers with verifiable sources, aiming to revolutionize how users find information online. The article also discusses Perplexity's challenges and strategies in real-time information integration, user interface design, and advertising, as well as how to integrate ads without compromising user experience.

Dissecting SearchGPT: Unveiling the Barriers, Breakthroughs, and Future of AI-Powered Search | Jiazi, a Technology News Platform

OpenAI's SearchGPT is an AI-powered search product that has established a data advantage by integrating traditional search engine APIs and collaborating with media to obtain high-quality data. To enhance user experience, SearchGPT employs low latency, multi-turn dialogue, and multimodal presentation, resulting in increased interactivity.

In terms of the business model, SearchGPT faces the challenge of covering costs with advertising revenue and needs to explore new profit models. Additionally, SearchGPT utilizes multimodal technology to provide richer and more understandable search results. Furthermore, SearchGPT implements automated workflows through AI Agents, offering services beyond traditional search engines.

Overall, SearchGPT has innovated in technology, user experience, and business model, positioning itself to potentially dominate the AI-powered search market.

AI capabilities and controls to power up your workday with Chrome Enterprise

The article from the Google Cloud Blog discusses the integration of AI capabilities into Chrome Enterprise to improve workplace efficiency and provide IT teams with necessary controls. It highlights several new AI features such as Google Lens for instant searches, Gemini for quick chat assistance, AI-powered web history retrieval, Tab Compare for comparing across tabs, and writing assistance with 'Help me write.' Additionally, it emphasizes the importance of AI controls and governance, announcing upcoming cloud policies and granular controls for administrators to manage AI features securely. The article aims to showcase how Chrome Enterprise leverages AI to create a more intuitive and efficient browsing experience, ensuring data safety and compliance with corporate policies.

Embodied Intelligence Companies Navigating Uncharted Territory Seek 'Certainty'

This article delves into Embodied Intelligence as a new frontier in AI, drawing parallels to autonomous driving technology. Driven by the advancement of Large Models, the maturity of Embodied Intelligence is expected to accelerate. The article analyzes the divergent definitions, varying technical approaches, and similarities and challenges compared to autonomous driving. It also highlights the multiple obstacles to commercialization, yet emphasizes the vast market potential and accelerated development fueled by Large Models and autonomous driving technology.

Ten Questions on Gamma: How the Breakthrough AI Presentation Tool King Was Trained?

Gamma is an innovative content presentation tool driven by AI. It transforms PPT creation into a writing-like experience, simplifying the content creation process, reducing formatting and design work, and enhancing shareability and asynchronous consumption. Gamma adopts a modular component management and block editing concept, combined with AI technology, to offer a clean and beautiful visual PPT creation experience, enabling rapid iteration and improvement. Its success lies in its user-oriented and rapid iteration product strategy, by deeply understanding user needs, continuously optimizing product experience, and maintaining efficient development and release cycles.

AI Products Facing Monetization Challenges in Overseas Markets

AI products are encountering payment hurdles in overseas markets, particularly social and image AI products, which have become targets for payment service providers' rejection due to stringent risk control policies and high compliance requirements. AI products face challenges in validating PMF (Product-Market Fit), while AI role-playing chat products demonstrate strong performance in traffic and cash flow. Payment service providers determine whether to cut off payments based on three red lines: borderline content (referring to content that is close to but not yet violating regulations), political sensitivity, and protection of minors. Compliance issues are crucial for the success or failure of AI products' commercialization.

Practical Evaluation of 4 Leading Chinese AI Video Generation Models: Falling Short of Expectations, with Significant Differences

This article provides a comprehensive evaluation of four leading Chinese AI video generation models: Keling, Jidian, PixVerse, and Qingying. The article explores the development of AI video generation technology, particularly the influence of OpenAI's Sora model on the industry. It then delves into four key aspects of the models: video generation quality and clarity, content generation accuracy, consistency, and richness, usage cost, and generation speed and interface. Through specific test scenarios and prompts, the article demonstrates the performance of each model in various applications, including derivative creation, human eating scenes, animals acting human, and futuristic cities. The article also analyzes the strengths and weaknesses of each model, highlighting Keling's superior video quality and clarity, and Jidian and PixVerse's exceptional content richness. Furthermore, the article discusses the commercialization challenges facing AI video generation technology, including high development costs and user willingness to pay, emphasizing the need for sustainable commercialization strategies for the growth of Chinese AI video generation models.

Practical Sharing | Calculating LTV for AI Subscription Users

This guide details the three steps to calculate the lifetime value (LTV) of AI product subscription users: determining the average revenue per user (ARPU), estimating the average customer lifespan (ACL), and calculating LTV. Through a case study, it demonstrates how to calculate LTV based on different renewal rates for monthly and annual subscriptions, and discusses the LTV recovery period and setting customer acquisition costs. The article emphasizes the importance of regularly validating and adjusting the LTV model to ensure the ongoing effectiveness of business strategies.

What is Good Design in the Age of AI? How Will AI Influence Experience Design?

- AI-assisted design tools need to establish a solid design foundation, guided by refined design guidelines to generate high-quality UI designs.

- AI tools will blur the boundaries between design and development, promoting the integration of designers' and engineers' skills to form full-stack product developers.

- AI technology should be utilized pragmatically and efficiently, such as AI-powered search functionality, to enhance design efficiency rather than over-pursuing an all-purpose AI assistant.

- AI tools should develop towards the direction of 'collaborative creation', deeply understanding users' work context, providing intelligent prediction and flexible adaptation support.

Achieving AI Transcription Success in Japan, Reaching Nearly $10 Million ARR

Notta is an AI transcription startup headquartered in Singapore with its R&D center in Shenzhen, founded by Chinese entrepreneur Zhang Yan. The company focuses on voice transcription services for meeting scenarios, achieving rapid growth in the competitive meeting transcription sector through precise targeting of the Japanese market and deep localization strategies. Notta's product has garnered over 4 million monthly visits in the Japanese market and has achieved nearly $10 million in Annual Recurring Revenue (ARR). Its success lies not only in its technological advantages but also in its profound understanding of the Japanese market, localization execution, and the strategic shift from ToC (To Consumer) to ToB (To Business).

Tencent AI Reader: Deep Reading for Long Texts, Enhancing Professional Reading Efficiency

Tencent AI Reader's Deep Reading for Long Texts feature is based on the Tencent Hunyuan Large Model, supporting the processing of up to 500,000 words of professional content. The deep reading mode provides an overview of core content, modular analysis, and summary charts, helping users quickly grasp key information. For foreign language literature, the feature can extract the innovation points and shortcomings of the paper, assisting in judging the quality of the paper. In terms of financial data processing, Tencent AI Reader organizes data from multiple dimensions, generating professional charts to make financial status more visual, and integrates a calculator function to ensure accurate numerical calculations. Additionally, the feature supports multimodal interaction and offline review, providing a one-stop service for professional reading.

Fu Sheng's Innovation Formula: How Can Chinese Robotics Overtake on a Curve?

Fu Sheng shared his entrepreneurial journey and insights on the development of the Chinese robotics industry. He believes that robotics hardware development doesn't follow Moore's Law and needs to be supplemented by intelligence to compensate for the lack of precision. To achieve overtaking on a curve, Chinese robotics companies must focus on AI applications, especially in the field of service robots. Fu Sheng also introduced the 'Innovation Formula', which emphasizes focusing on fundamental human needs and emphasizes that technological innovation requires continuous trial and error and quick, small steps. Additionally, he discussed the global layout of the Chinese robotics industry and how to gain competitive advantages in terms of cost, reliability, and scenario-specific applications.

Character.AI Founders Depart, Industry Enters New Phase

A portion of the Character.AI founding team has joined Google, marking a strategic adjustment for the company. Character.AI will transition towards utilizing more third-party LLMs and open-source models to optimize resource allocation and product experience. This adjustment reflects the AI field's evolving thinking about technological transformation and resource optimization.

Character.AI will enter into an agreement with Google to provide non-exclusive licenses for LLM technology, while receiving financial support from Google. The remaining team will continue to focus on product development at Character.AI.

Industry observers believe that Character.AI has pioneered the 'emotional connection' track for large model products, demonstrating the value of these models. However, the C.AI product itself has not yet achieved product-market fit. The departure of the founding team and the strategic adjustment reflect the mismatch between the technical and R&D background of the founding team and the company's developmental stage needs, as well as the industry's trend towards a new phase.

OpenAI's Leadership Shakeup: Is a 'Tyrant' at the Helm Leading the Company Towards a Dangerous Future?

OpenAI has recently experienced a significant exodus of key executives, including President Greg Brockman taking a long-term leave of absence, co-founder John Schulman and product head Peter Deng departing. Only a few founders, including Sam Altman, remain. This wave of departures has raised concerns about OpenAI's stability and future direction. The article analyzes the reasons behind OpenAI's talent drain, including internal conflicts over AI safety philosophies and Sam Altman's assertive management style. This personnel shakeup also reflects the multiple challenges OpenAI faces in its pursuit of AGI breakthroughs, including balancing development speed, ethical considerations, and corporate governance.

Five Insights from Jobs' 55-Minute Speech 41 Years Ago, Still Relevant in the AI Era

In his 1983 speech, Steve Jobs shared profound insights on AI and technological development. He envisioned the possibility of chatting with Aristotle, emphasized the information gap brought by new technologies, proposed future-oriented product planning concepts, explored the potential of AI information assistants, and shared strategies for entrepreneurship in the era of technological revolution. These views were not only forward-looking at the time but also remain highly valuable in today's AI era, providing precious guidance for AI practitioners and entrepreneurs.

Elon Musk's August Latest 20,000-Word Interview: xAI, Neuralink, and the Future of Humanity (with Edited Video)

This article is a deep interview between Lex Fridman and Elon Musk, covering multiple cutting-edge technology fields, including the latest advancements in Neuralink, the integration of AI with humans, and the challenges for the future of humanity. Musk detailed the implantation technology of Neuralink and its potential impact on neurobiology, predicting a significant increase in future communication speeds. Additionally, the interview explored the applications of AI in enhancing human experiences, memory recovery, and the exploration of extraterrestrial life, as well as the potential and engineering challenges of humanoid robot Optimus. Musk emphasized the safety and ethical issues of AI systems, and the critical role of technological innovation in the rise and fall of civilizations.

Interview with 'Spatial Computing' Author: If Jobs Were Alive, Vision Pro Would Be Cheaper | The Epoch

Spatial computing is a technology that encompasses software and hardware, enabling unique experiences and movements in 3D space for both humans and robots. It liberates people from screens, making every surface a potential interactive interface. Apple's Vision Pro is a representative product in the field of spatial computing, but due to its high price and short battery life, it has struggled to gain traction in the market. Apple plans to release a cheaper version to expand its user base. Spatial computing has broad application prospects in fields such as healthcare, content creation, and data analysis, and when combined with Generative AI, it will bring more innovation and convenience.

AI Phones, AIPC: A False Proposition?

This article delves into the impact of artificial intelligence (AI) technology on the market value of tech giants like Apple, Microsoft, and NVIDIA. It explores the development trends of AI terminal applications, emphasizing the crucial role of computing power, large models, and terminal applications in driving AI development. AI terminal applications act as a bridge between users and AI technology, offering immense potential for growth. Despite the slower-than-expected development of AI application software, the industrial logic of AI terminals is clear, driven by supply-side momentum. The article focuses on the development of AI phones and AIPC, analyzing the market competition landscape and key technical elements, such as the importance of chips and operating systems. It predicts that AI terminals may experience a significant surge in adoption by 2025.

Andrew Ng's Letter: How to Brainstorm AI Startup Ideas

- Collaborate with domain experts to quickly concretize vague ideas using their intuition.

- Generate a large number of ideas, at least 10, for comparison and selection, avoiding over-reliance on a single idea.

- Set clear evaluation criteria, such as business value and technical feasibility, to consistently judge ideas.

Bengio's Unveiled Plans: World Model + Mathematical Proof to Ensure AI System Functionality

Yoshua Bengio, a leading figure in deep learning, has joined the Safeguarded AI project, which aims to build a system capable of understanding and mitigating the risks posed by other AI agents. This project, supported by the UK's Advanced Research and Invention Agency (ARIA), plans to invest £59 million. The project is divided into three technical areas: scaffolding, machine learning, and application, each with specific goals and budgets. Bengio is focusing on TA3 and TA2, providing strategic scientific guidance. The project proposes a 'Guaranteed Safe AI' model, which quantifies AI system security through the interaction of three core components: world models, security standards, and validators. Additionally, the project categorizes the strategy for creating world models into L0-L5 security levels.

This AI Standalone Hardware: Returns Outpace Sales

Since its release, AI standalone hardware AI Pin has faced a return wave, with the number of returns exceeding purchases. The product evaluation for AI Pin is extremely poor, with users expressing disappointment in its usability. Humane company is under financial pressure, with negligible sales revenue and executive departures. The article points out that the application of AI in hardware still has limitations and is difficult to replace existing terminal devices like smartphones.

AI in Action | AI Creates a Solo Living Girl's Life Vlog, Gaining Tens of Thousands of Likes in 3 Days

This article presents a real-life case of creating a solo living girl's life Vlog, detailing the application of AI tools in content creation. It first emphasizes that AI technology is transforming our lives and work methods, then demonstrates how to generate scripts, create images, and splice videos using the combination of Kimi, Jimeng, and Jianying. The process is simple and understandable. The article highlights the ease of use and practicality of AI tools, encouraging content creators to explore the possibilities of AI in content creation.