BestBlogs.dev Highlights Issue #17

Subscribe NowDear friends,

👋 Welcome to this edition of curated articles from BestBlogs.dev!

🚀 In this issue, we dive into the latest breakthroughs, innovative applications, and industry dynamics in the AI field. We'll explore cutting-edge developments in model advancements, development tools, product innovations, and market strategies. Join us on this exciting journey through the frontiers of AI!

🧠 AI Models & Technology: Architectural Innovation, Performance Leaps

- Jamba 1.5, based on the Mamba architecture, significantly enhances long-context processing, ushering in a new era for non-Transformer models.

- Meta's Transfusion model, fusing Transformer and Diffusion technologies, achieves breakthrough progress in multimodal AI.

- Open-source models like Gemma 2 and GLM-4-Flash promote AI accessibility, lowering entry barriers and offering comprehensive training and fine-tuning guides.

⚙️ AI Development & Tools: Efficiency Boost, Application Expansion

- In-depth analysis of RAG system optimization techniques enhances unstructured data processing, featuring innovative "late chunking" technology.

- Google Cloud showcases Imagen 3 on Vertex AI, advancing high-quality visual content generation and multimodal search system construction.

- Detailed explanations of AI Agent design patterns (like ReAct) and unified tool usage APIs facilitate the development of smarter, more efficient AI systems.

💡 AI Products & Applications: Creative Spark, Business Innovation

- Ideogram 2.0 and CapCut make significant strides in image generation and video editing, showcasing the vast potential of AI creative tools in global markets.

- AI-assisted programming tools (like Cursor AI) attract major investments, highlighting the market's strong demand for enhanced development efficiency.

- Analysis of pricing models across 40 leading AI products reveals emerging trends in AI commercialization, exploring innovative business models that blend B2C and B2B approaches.

🌐 AI Industry Dynamics: Shared Insights, Future Outlook

- Experts including Li Mu, Demis Hassabis, and Zhang Hongjiang discuss AI technology trends and challenges, focusing on large model scaling, efficiency, and multimodal AI development.

- Mark Zuckerberg and a16z analyze AI's profound impact on industries, predicting software industry restructuring and the acceleration of "software becoming labor."

- Thought leaders like Andrew Ng explore AI ethics, employment impacts, and AGI development prospects, while comparing AI development paths in China and the US, offering insights into global AI innovation and investment landscapes.

Intrigued by these exciting AI developments? Click through to read the full articles and explore more fascinating content!

Table of Contents

- Jamba 1.5 Released: Longest Context, Non-Transformer Architecture Takes the Lead

- New in Gemini: Custom Gems and improved image generation with Imagen 3

- Meta Releases Three Comprehensive LLaMA Fine-tuning Guides: A Must-Read for Beginners

- Bringing Llama 3 to life

- Unified Language and Image Model Revolution: Meta Combines Transformer and Diffusion, Ushering in a New Era of Multimodal AI

- A Small Step Towards Inclusive AI: GLM-4-Flash, Free!

- Gemma explained: What’s new in Gemma 2

- 7000 Words In-Depth Explanation! Even Kindergarteners Can Understand the Working Principle of Stable Diffusion

- Andrew Ng's Letter: The Decline in LLM Token Prices and Its Implications for AI Companies

- Deep Dive into Large Language Models: Data, Evaluation, and Systems | Stanford's Latest Lecture on Building LLMs: A Comprehensive 30,000-Word Guide (with Video)

- How We Fine-Tuned Open-Sora in 1000 GPU Hours

- A developer's guide to Imagen 3 on Vertex AI

- Optimizing RAG Performance: A Detailed Guide to High-Quality Document Parsing

- Building a serverless RAG application with LlamaIndex and Azure OpenAI

- Tool Use, Unified

- Large Language Model in Action: AI Agent Design Patterns, ReAct

- Multimodel search using NLP, BigQuery and embeddings

- Learn to Use the Gemini AI MultiModal Model

- Unlocking Creativity with Prompt Engineering

- How We Built Townie - A Full-Stack Application Generator [Translated]

- 10GB of VRAM, Fine-tuning Qwen2 with Unsloth and Inference with Ollama

- Survey: The AI wave continues to grow on software development teams

- Customized 'Black Myth:悟空', Outperforming Midjourney, This AI Art Generator is Highly Captivating

- Z Product|a16z's Investment in 4 MIT Geniuses Pioneering AI Programming, OpenAI and Perplexity as Clients

- Ten Thoughts on AI Super Apps: AI Alone Is Not Enough, We Also Need Humans

- Benchmarking Four Leading AI-powered PPT Generators: Frequent Image Errors and Data Visualization Mishaps

- Global AI Products Top 100 Released! Only One Domestic App in the Top Ten, ByteDance Begins to Dominate the Rankings

- Evaluating the Top 6 Smart Question Answering Software in China: Which One is the Best?

- Real-world Testing of Four AI Image Generation Tools: Midjourney's Dominance Remains Unshaken

- Top 20 AI-Generated Video Products Worldwide: Categorization and Application Scenarios

- Z Product | Too Many AI APIs? This Platform Solves the Problem, Securing $8 Million in Seed Funding from US Investors

- ByteDance's Most Lucrative Overseas AI App: CapCut Surpasses 30 Million MAUs, Generating $1.25 Billion Annually

- Z Potentials | Exclusive Interview with Replika's Former AI Lead, Startup Secured A16Z Investment, Building a Multimodal Interaction Platform with Millions of Dollars in ARR

- Z Product | How a Webcam Received a Significant Investment from OpenAI and Transitioned to the Next Generation of AI-Powered Creative Tools?

- How to Commercialize AI Applications? 40 Leading AI Products Tell You

- Interview with Motiff's Zhang Haoran: Our Only Rival is Figma | Jiazi Guangnian

- Viggle: A Chinese AI Startup Disrupting Video Creation on Discord

- Li Mu Returns to Alma Mater Shanghai Jiao Tong University, Discussing LLMs and Personal Career: Full Speech Transcript

- Mark Zuckerberg: Don't Fear Big Companies, 2/3 of Opportunities They Miss

- A16Z: The Core of AI+SaaS is Accelerating the Process of 'Software Becoming Labor'

- General-purpose Humanoid Robots: A Year Away?

- AI Robot Revolution: Brett Adcock on Figure 2 and a Future World of 100 Billion Robots (2,000-word video included)

- Hassabis: Google Aims to Create a Second Transformer and Integrate AlphaGo with Gemini

- From Dial-up to a $55 Billion Start-up: An In-Depth Interview with the Transformer Author on AI Trends

- Zhang Hongjiang: My Eight Observations and Opinions on AI and Large Models | AI Light-year

- Zoom Founder Eric Yuan: How Zoom Is Using AI to Grow After a $150 Billion Market Value Drop?

- Tencent's Hun Yuan Large-Scale MoE Model Lead Wang Di: Unveiling the Billion-Scale MoE System Engineering Path | Expert Interview

- Coding Alone Is Outdated: How Microsoft Engineers Are Winning the AI Breakthrough?

- Depth | Cohere Founder's Latest Thoughts: Model Development Is Getting Harder! AI's Next Big Breakthrough Will Be in Robotics, Models Need to Be More Powerful and Affordable

- Deep Interview with Andrew Ng: According to the Standard Definition of AGI, We Still Need Decades

- Rewind Founder Shares Honest Experience: How to Secure Over 1,000 Investment Intentions with a 7-Minute Video?

- After 7 Years of AI Companionship, How Does Replika's Founder See the Future of This Field?

- 10 Lessons from Zuckerberg: Building a $100 Million AI Business in 9 Months

- AIGC Weekly #86: Ideogram 2.0 with Powerful Typography and Non-Transformer Models Catching Up

- Why SaaS Flourished in the US While China Dominated Super Apps in the Past Decade?

Jamba 1.5 Released: Longest Context, Non-Transformer Architecture Takes the Lead

AI21 Labs, founded in 2017 by AI pioneers, has released the Jamba 1.5 series model, the world's first production-level model based on the Mamba architecture. Developed by researchers at Carnegie Mellon University and Princeton University, the Mamba architecture addresses the limitations of traditional Transformer models in memory usage and inference speed. By combining the strengths of Transformer and Mamba, the Jamba 1.5 series achieves significant improvements in long context processing, speed, and quality. It supports multiple languages and offers the longest effective context window in the market, reaching 256K tokens. The Jamba 1.5 series excels in various benchmark tests, particularly in the Arena Hard benchmark, outperforming its peers. This breakthrough for non-Transformer architectures in the AI model field provides enterprises and developers with more efficient and powerful tools.

New in Gemini: Custom Gems and improved image generation with Imagen 3

Google has announced new features for its Gemini platform, including customizable AI experts known as 'Gems' and an upgraded image generation model called 'Imagen 3'. Gems allow users to create personalized AI assistants on any topic, from coding to career advice, and are available for Gemini Advanced, Business, and Enterprise users. These Gems can be customized with specific instructions and are accessible on both desktop and mobile devices in over 150 countries and most languages. Additionally, Imagen 3, Google's latest image generation model, is being rolled out to enhance creative capabilities. It sets a new standard for image quality, generating high-quality images from simple text prompts and supporting various styles such as photorealistic landscapes and textured oil paintings. Imagen 3 includes built-in safeguards and adheres to Google's product design principles, ensuring user control over the creative process. The model also uses SynthID for watermarking AI-generated images and will soon support the generation of images of people, starting with an early access version in English for Gemini Advanced, Business, and Enterprise users.

Meta Releases Three Comprehensive LLaMA Fine-tuning Guides: A Must-Read for Beginners

Meta recently unveiled three detailed guides on fine-tuning the LLaMA model, exploring each stage from pre-training to fine-tuning, including continued pre-training, full-parameter fine-tuning, PEFT, RAG, and ICL. The articles discuss the advantages and disadvantages of these methods, their application scenarios, and highlight the importance of dataset management and human-machine collaboration in data annotation. These guides provide practical fine-tuning strategies and step-by-step instructions for resource-constrained users, helping them achieve better performance on specific tasks and adapt to real-world application needs.

Bringing Llama 3 to life

The article from Engineering at Meta outlines the release of Llama 3, Meta's most advanced open-source large language model (LLM), with a focus on the model's enhancements in version 3.1. This new iteration is designed to support workflows like synthetic data generation and model distillation, with capabilities that rival top closed-source models. At the AI Infra @ Scale 2024 conference, Meta engineers shared their insights on Llama 3's development process. They discussed everything from data preparation and diversity to model training infrastructure, and the complex challenges of scaling and optimizing inference across Meta’s cloud and hardware systems.

Unified Language and Image Model Revolution: Meta Combines Transformer and Diffusion, Ushering in a New Era of Multimodal AI

Meta's latest Transfusion model, a groundbreaking combination of Transformer and Diffusion technologies, successfully integrates language and image generation into a single model. This model trains a single Transformer on multimodal sequences, significantly enhancing the efficiency and quality of multimodal processing through innovative methods. Transfusion demonstrates exceptional performance in both single-modal and multimodal benchmark tests, generating images comparable to diffusion models while retaining powerful text generation capabilities. This model showcases the immense potential of multimodal AI and predicts the widespread adoption of multimodal technology in the future.

A Small Step Towards Inclusive AI: GLM-4-Flash, Free!

Amidst the continuous advancements in large model technology, Zhipu announces the free release of the GLM-4-Flash model to everyone. Users can register on the bigmodel.cn platform to access this model for free, enabling them to build their own models and applications. GLM-4-Flash boasts high speed and cost-effectiveness, making it suitable for simple, low-cost, and quick response tasks. It features advanced capabilities such as multi-turn dialogue, web browsing, Function Call, and long text reasoning, supporting 26 languages. It has demonstrated ease of use and convenience in areas such as scientific research data preprocessing, information extraction, multilingual translation, and multi-turn dialogue. Furthermore, GLM-4-Flash has been optimized through techniques like adaptive weight quantization, various parallelization methods, batch processing strategies, and speculative sampling, achieving improved model operation efficiency and reduced inference costs. In terms of pre-training, large language models were introduced for data filtering, and FP8 technology was used for efficient pre-training, enhancing training efficiency and computational volume. GLM-4-Flash also showcases strong logical reasoning capabilities and rapid generation speed, with web retrieval and function calling abilities, aiming to promote the development of Inclusive AI, enabling more people to utilize AI technology to solve practical problems.

Gemma explained: What’s new in Gemma 2

The article presents Gemma 2, a groundbreaking open model series that includes 2B, 9B, and 27B parameter sizes, designed to set new benchmarks in performance and accessibility. Gemma 2 has demonstrated exceptional performance in real-world conversational tasks, particularly in the LMSYS Chatbot Arena, where it has outperformed larger models. Key architectural innovations include Alternating Local and Global Attention, Logit Soft-Capping, RMSNorm for pre and post-normalization, and Grouped-Query Attention (GQA). These advancements enhance the model's efficiency, stability, and overall accuracy. Additionally, Gemma 2 offers seamless fine-tuning and integration capabilities across various platforms and hardware configurations.

7000 Words In-Depth Explanation! Even Kindergarteners Can Understand the Working Principle of Stable Diffusion

This article delves into the working principle of Stable Diffusion and its application in AI painting technology. By comparing generative adversarial networks (GANs) and diffusion models, it highlights Stable Diffusion's advantages in terms of sample quality, diversity, and stability. The article further explains the forward and reverse diffusion processes in diffusion models, along with Stable Diffusion's conditional generation techniques, such as text-to-image and image-to-image conversion. Additionally, it explores how Stable Diffusion's open-source nature has fostered research and plugin development, emphasizing the new demands for continuous learning in designers due to AIGC technology while lowering the design barrier.

Andrew Ng's Letter: The Decline in LLM Token Prices and Its Implications for AI Companies

In this article, Andrew Ng discusses the major change in OpenAI's GPT-4 token prices, which have dropped from $36 per million tokens to $4, and its impact on the AI industry. This price decline is primarily driven by the release of open-source models like Llama 3.1 and hardware innovations, including advancements from companies like Groq, Samba Nova, and Cerebras. Andrew Ng advises AI companies to focus on building practical applications rather than over-optimizing costs, and to consider the economic feasibility brought by future price declines. He also mentions that it is wise to regularly evaluate and switch models to take advantage of price drops and feature enhancements as new models are introduced. Additionally, Andrew Ng highlights the positive impact of evaluating technological advancements on simplifying the model switching process and predicts that token prices will continue to decline.

Deep Dive into Large Language Models: Data, Evaluation, and Systems | Stanford's Latest Lecture on Building LLMs: A Comprehensive 30,000-Word Guide (with Video)

Based on Stanford University's latest lecture on building Large Language Models (LLMs), this article comprehensively analyzes the LLM construction process, including model architecture, data processing, pre-training and post-training, evaluation metrics, and system optimization. It emphasizes the importance of data, evaluation, and systems in LLM development, detailing the workings of autoregressive language models, the role of tokenizers, evaluation methods, the complexities of data processing, the application of scaling laws, and the training costs and environmental impacts. This exploration provides readers with a deep understanding of LLM technical details and practical challenges.

How We Fine-Tuned Open-Sora in 1000 GPU Hours

This article, published by AI Frontline, explains how to utilize the Open-Sora model for fine-tuning to generate high-quality stop-motion animation videos. It first introduces the application background of the Text2Video model, pointing out that fine-tuning open-source models can enhance their ability to generate videos that meet specific requirements. Then, it details the process of fine-tuning the Open-Sora 1.1 Stage3 model, including hardware configuration, software environment, data preparation, and model training. In terms of hardware, a 32-GPU cluster provided by Lambda was used, equipped with NVIDIA H100 GPUs and high-speed network connection. In terms of software, Conda environment management was used to ensure consistent environment configuration. In terms of data, high-quality stop-motion animation videos were obtained from YouTube channels and annotated using GPT-4. The article then details the training process and results of two fine-tuned models, showcasing the model's output effects at different stages. Finally, the article discusses future improvement directions, including enhancing time consistency, reducing noise in unconditional generation, and increasing resolution and frame rate.

A developer's guide to Imagen 3 on Vertex AI

The article from the Google Cloud Blog introduces Imagen 3, a powerful text-to-image model available on Vertex AI. It outlines feedback from early users and identifies three key themes: demand for high-quality visuals across various styles and formats, strong adherence to prompts for precise image generation, and controls for trust and safety through SynthID watermarking and advanced safety filters. The article delves into the model's capabilities, providing code examples and best practices for maximizing its potential. It showcases Imagen 3's ability to produce photorealistic images with exceptional composition and resolution, its enhanced text rendering capabilities, and its prompt comprehension. Additionally, it introduces Imagen 3 Fast, optimized for speed, suitable for creating brighter, higher contrast images with reduced latency. The article concludes with how to access Imagen 3 and additional resources for integration.

Optimizing RAG Performance: A Detailed Guide to High-Quality Document Parsing

This article, published by Alibaba Cloud developers, primarily discusses how to convert unstructured data (such as PDF and Word documents) into structured data for use by RAG (Retrieval-Augmented Generation) systems. The article first introduces the background, highlighting that while General Large Language Models (LLM) have made progress in knowledge-based question answering, they still fall short in specialized fields. RAG systems combine the user's original question with private domain data to provide more accurate information, thereby enhancing the answering effect. The article compares the parsing methods of Word and PDF documents in detail. This includes their format structures, parsing difficulties, and processing strategies. Additionally, the article introduces the overall architecture of Alibaba Cloud's document content parsing for search, demonstrating how document parsing can enhance the performance of RAG systems. Finally, the article provides service experiences and reference links to help readers further understand and apply the relevant technologies.

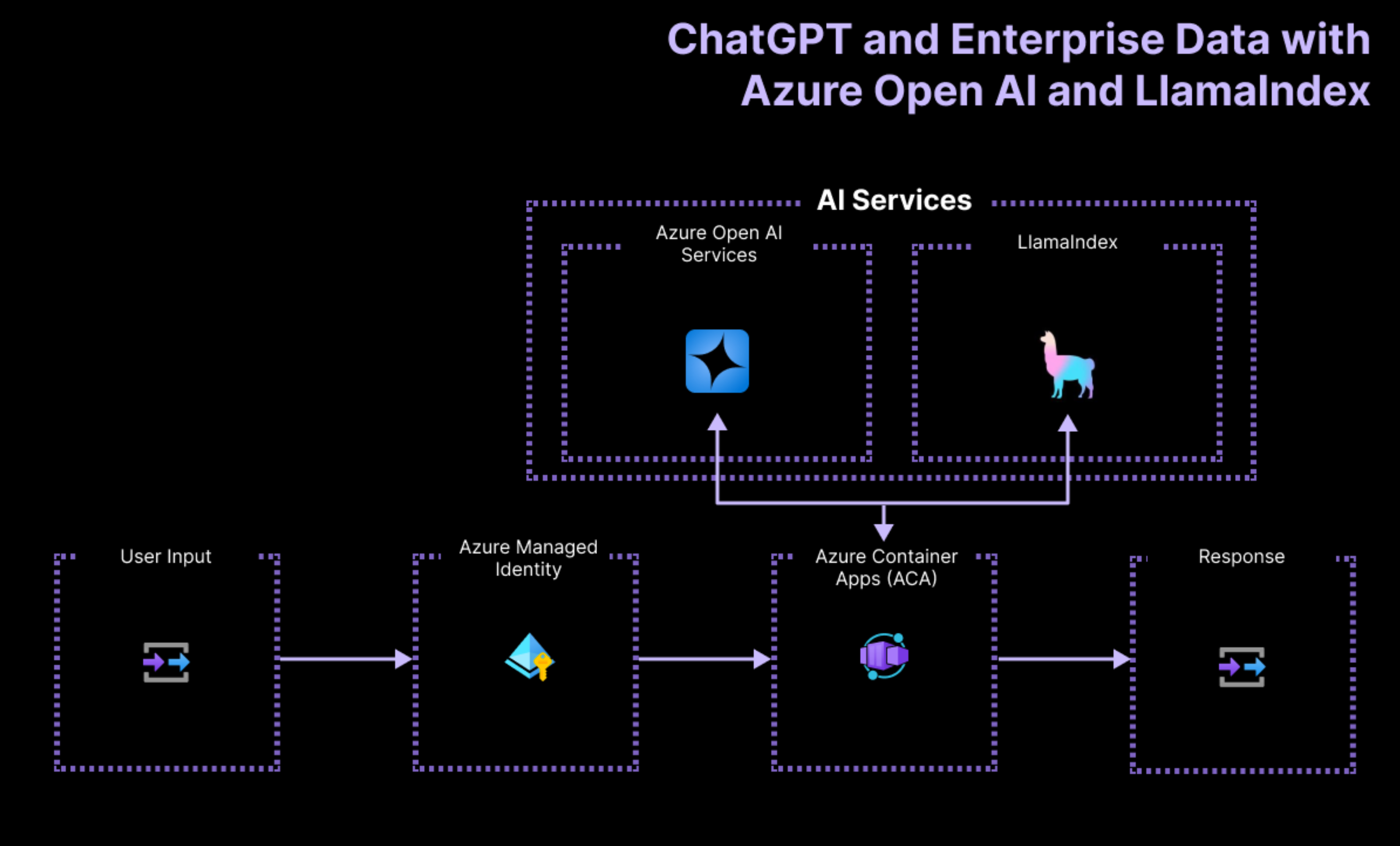

Building a serverless RAG application with LlamaIndex and Azure OpenAI

The article offers a comprehensive guide for developers to create serverless Retrieval-Augmented Generation (RAG) applications using LlamaIndex and Azure OpenAI, and to deploy them on Microsoft Azure. It introduces the RAG architecture, which enhances AI text generation by integrating external knowledge through a retriever-generator model. Detailed steps are provided for implementing RAG with LlamaIndex, including data ingestion, index creation, query engine setup, and the retrieval and generation process. Practical examples in TypeScript and Python illustrate the complete implementation using Azure OpenAI, with deployment facilitated by the Azure Developer CLI. The article highlights the benefits of integrating proprietary business data into AI applications to improve response quality and relevance, while also leveraging Azure's scalability and security.

Tool Use, Unified

Hugging Face has developed a unified tool use API that allows for seamless integration of tools across multiple model families, including Mistral, Cohere, NousResearch, and Llama. This API minimizes the need for model-specific changes, enhancing portability and ease of use. The Transformers library now includes helper functions to facilitate tool calling, along with extensive documentation and examples. The API addresses significant limitations of LLMs, such as imprecision in calculations and facts, and lack of up-to-date knowledge. The introduction of chat templates further supports tool use, allowing users to define tools using JSON schemas or Python functions, which are automatically converted. This approach simplifies the process and ensures compatibility across different programming languages.

Large Language Model in Action: AI Agent Design Patterns, ReAct

The article begins by introducing eight design patterns for AI Agents, with a particular focus on the characteristics and applications of the ReAct framework. By mimicking human thinking and action processes, the ReAct framework, which combines reasoning and action within language models, provides an effective method for addressing diverse language reasoning and decision-making tasks. The article details the Think-Act-Observe (TAO) loop of the ReAct framework and illustrates the differences between the ReAct approach and the Reasoning-Only and Action-Only approaches using an example of a smart calendar management assistant. Additionally, the article provides a source code example, detailing the implementation process of the ReAct framework, including preparing a Prompt template, constructing an Agent, defining Tools, looping execution, and practical running examples. Finally, the article summarizes the advantages and shortcomings of the ReAct framework and points out its potential applications in scenarios such as AI-powered customer support and knowledge-based chatbots. The article also mentions some challenges encountered in practical applications, such as unstable content output, high costs, and uncontrollable response times.

Multimodel search using NLP, BigQuery and embeddings

The article from the Google Cloud Blog presents a demonstration of a multimodal search system that leverages natural language processing (NLP), BigQuery, and embeddings to perform searches across images and videos. Traditional search engines are predominantly text-based and struggle with visual content analysis. This new approach uses multimodal embeddings to enable users to search for images or videos, or information within them, using text queries. The solution involves storing media files in Google Cloud Storage, creating object tables in BigQuery to reference these files, generating semantic embeddings for images and videos, and indexing these embeddings in BigQuery for efficient similarity search. The article provides detailed steps on how to implement this solution, including uploading media files to Cloud Storage, creating object tables in BigQuery, generating multimodal embeddings, creating a vector index for these embeddings, and performing similarity searches based on user queries. This system not only enhances user experience by streamlining content discovery across different media types but also presents potential market implications by setting a new standard for content search technology.

Learn to Use the Gemini AI MultiModal Model

The article provides an in-depth overview of a newly published course on the freeCodeCamp.org YouTube channel, which is dedicated to teaching developers how to use Google's Gemini AI MultiModal Model. Led by Ania Kubow, the course covers essential topics such as the introduction to Gemini, setting up the development environment, exploring different Gemini models, and building an application that interprets images and answers questions. Advanced features like embedding generation are also briefly introduced, offering developers a comprehensive learning experience. The course is suitable for both AI beginners and experienced developers, providing practical knowledge and hands-on experience to help integrate Gemini's capabilities into real-world projects.

Unlocking Creativity with Prompt Engineering

This article delves into the art of prompt engineering, showcasing how it can significantly enhance the efficiency and accuracy of large language models (LLMs) and AI applications. It begins by exploring the latest prompt engineering strategies, drawing upon resources from major model companies and open-source communities to offer a detailed practical guide. The article then delves into techniques for optimizing model-generated text quality, including clear and direct prompts, using examples, role-playing, labeling, and Chain Prompt linking. It highlights specific practices employed by companies like Anthropic and Google. The CO-STAR framework, a key component of this process, further enhances the generation capabilities of LLMs. Additionally, the article explores how prompt optimization can improve the quality of AI model-generated code, particularly in the field of Img2code (Image-to-Code) applications. Finally, the article emphasizes the paramount importance of prompt engineering in large model applications, particularly in boosting development efficiency and fostering creativity. It shares practical experiences from Alibaba Cloud's overseas business, demonstrating the real-world impact of these techniques.

How We Built Townie - A Full-Stack Application Generator [Translated]

This article describes the author's journey in building Townie, a full-stack application generator, leveraging large language models (LLMs) and various AI tools like Claude 3.5 Sonnet, Vercel v0, and Websim. The interaction with LLMs has revolutionized code generation, enabling the creation of full-stack applications complete with backend and database functionality. The author delves into key aspects of the building process, including model selection, database persistence, differential code generation, and UI integration. The article also explores strategies for optimizing model performance and controlling costs. It addresses challenges encountered during technical implementation and outlines solutions, ultimately demonstrating how these technologies can be integrated into a streamlined tool for simplifying full-stack development. Townie holds the potential to significantly advance end-user programming in the future.

10GB of VRAM, Fine-tuning Qwen2 with Unsloth and Inference with Ollama

This article demonstrates how to fine-tune the Qwen2 model using the Unsloth framework on free GPU provided by the ModelScope community, and perform local inference with the Ollama tool. The Unsloth framework, with kernels written in Triton, significantly improves training speed and reduces VRAM use while maintaining accuracy. The article provides detailed code examples and explanations from setup, model selection, fine-tuning parameter settings, dataset preparation, model training to final model export and local execution. Additionally, it introduces how to use the Ollama tool for model creation and inference, and how to install and start the Ollama service in Linux.

Survey: The AI wave continues to grow on software development teams

GitHub's latest survey of 2,000 software development professionals across four countries reveals that nearly all respondents have used AI coding tools. While individual adoption is high, organizational support varies significantly by region, with the U.S. demonstrating the most support and Germany the least. This discrepancy highlights a crucial need for companies to establish clear guidelines, policies, and infrastructure to effectively integrate AI into their workflows. The survey also identifies key benefits associated with AI coding tools, including improved code quality, faster onboarding, and enhanced test case generation. These benefits translate to significant time savings, allowing developers to focus on more strategic tasks like system design and collaboration. Despite the positive individual experiences, the survey emphasizes the need for a strategic organizational approach to maximize the potential of AI in software development, fostering trust, providing clear guidelines, and measuring outcomes.

Customized 'Black Myth:悟空', Outperforming Midjourney, This AI Art Generator is Highly Captivating

Ideogram 2.0 is an AI image generation tool specializing in text rendering, offering a variety of image styles and a user-friendly interface. Its unique 'Magic Prompt' feature automatically translates and optimizes Chinese prompts, making it accessible to a wider audience. The article compares Ideogram with Midjourney, highlighting its advantages in generating specific images and exploring its potential applications in areas like poster design. Additionally, Ideogram has launched a mobile app, enhancing its convenience.

Z Product|a16z's Investment in 4 MIT Geniuses Pioneering AI Programming, OpenAI and Perplexity as Clients

Anysphere was founded by four graduates from the Massachusetts Institute of Technology's class of 2022, majoring in Computer Science and Mathematics. The company focuses on developing the AI code editor Cursor. Cursor aims to help programmers improve their coding efficiency through AI technology. It supports rapid code generation, analysis optimization, and online query functions. Several tech companies, including OpenAI and Perplexity, have adopted Cursor. In 2023, Anysphere received an $8 million seed round led by OpenAI. In July 2024, it received a new round of funding led by a16z, with a valuation of at least $400 million. The article details the functions of Cursor, including its intelligent code generation, multimodal intelligent generation, intelligent rewriting, and intelligent query, as well as the backgrounds of the Anysphere team members and the company's funding situation. Additionally, the article explores the market competition between Anysphere and giants like Microsoft, emphasizing the huge potential of the AI programming market and Anysphere's technological development direction.

Ten Thoughts on AI Super Apps: AI Alone Is Not Enough, We Also Need Humans

This article argues that the development of AI Super Apps requires human-machine collaboration. Relying solely on AI technology cannot meet the needs of the general public. AI products have a low market penetration rate because they have not truly solved users' problems. There are differences between AI and humans in providing value and emotional value, and Agent technology is still in the realm of science fiction. Constructing a win-win platform where all participants (developers, users, platforms) can benefit is a pragmatic path to the development of AI Super Apps.

Benchmarking Four Leading AI-powered PPT Generators: Frequent Image Errors and Data Visualization Mishaps

This article conducts an in-depth evaluation of four leading AI-powered PPT generators, including Kimi, Xunfei Zhwen, Baidu Wenku, and WPS AI. It explores the market landscape and key players in the field of AI-powered PPT generation and details the evaluation methodology. The results demonstrate that these AI tools have made strides in generation speed and certain content aspects but generally struggle with image accuracy and data visualization, failing to fully meet user expectations. The article further analyzes factors such as response speed, cost, template styles, user experience, and privacy protection, highlighting the challenges faced by AI-powered PPT generation technology and emphasizing the gap between technological advancements and user expectations.

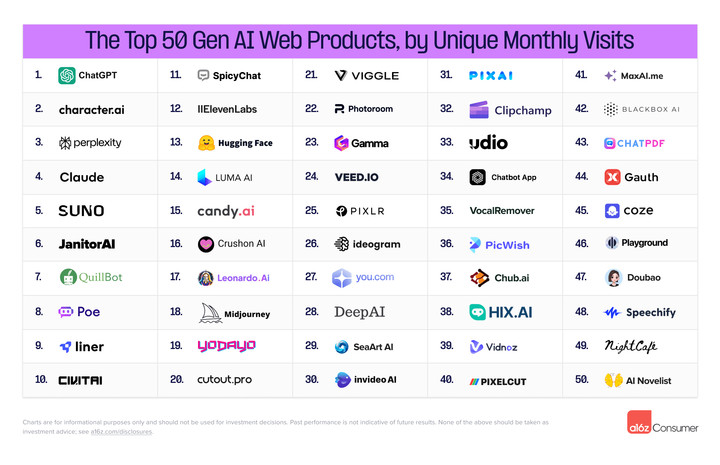

Global AI Products Top 100 Released! Only One Domestic App in the Top Ten, ByteDance Begins to Dominate the Rankings

The latest Global AI Products Top 100 list released by a16z shows that ChatGPT continues to dominate the general AI assistant field. Multiple ByteDance products are listed, demonstrating its extensive presence in the AI field. AI applications are expanding continuously in vertical fields such as image, music, and video creation, and are emerging in new categories like aesthetics and dating. The article highlights the intense competition and innovation in the AI product market, as well as the potential of AI technology to meet real needs.

Evaluating the Top 6 Smart Question Answering Software in China: Which One is the Best?

With the popularity of smart question answering software, various products have emerged in the market, each with its unique features and advantages. This article details the evaluation of six mainstream smart question answering software, from feature introductions to practical test cases, assessing their intelligent question answering capabilities and characteristics. The article points out that Tongyi Qianwen and Tiangong stand out in feature abundance and entertainment value, iFlytek Spark performs the strongest in agent capabilities, Kimi has the most straightforward and simple interface, Wenxin Yiyan performs strongly in context analysis, and Doubao excels in text-to-image generation. Finally, the article summarizes the strengths and weaknesses of each software and expresses expectations for the future development of smart question answering software.

Real-world Testing of Four AI Image Generation Tools: Midjourney's Dominance Remains Unshaken

Ideogram 2.0 has seen significant improvements in text rendering, capable of generating longer and more accurate text images. Additionally, it has put considerable effort into photorealistic image generation, making realistic style images even more lifelike. Notably, Ideogram 2.0 has introduced a palette control feature, allowing users to precisely control image colors.

In the real-world testing comparison, Ideogram 2.0 performed well in photo realism, text rendering, and multi-style generation. However, considering the overall picture, Midjourney, with its strong technical capabilities and wide application, still holds the dominant position in the AI image generation field.

The founding team of Ideogram comes from Google and has a deep background in the AI field, providing a solid technical foundation for its development.

Top 20 AI-Generated Video Products Worldwide: Categorization and Application Scenarios

This article examines the top 20 AI-generated video products with the highest global traffic in June, encompassing various types from text-to-video to digital human video editing. The article delves into the commercial value and technical barriers of these products, highlighting that digital human video editing tools, short video clipping tools, and traditional video editing tools currently hold significant commercial value. Meanwhile, the article emphasizes that the potential of AI-generated video technology remains largely untapped, with most products relying on traditional AI techniques rather than the latest model-based Text-to-Video approaches. Furthermore, the article forecasts the future development trends and potential challenges of AI-generated video technology, emphasizing the importance of identifying vertical needs within technological advancements.

Z Product | Too Many AI APIs? This Platform Solves the Problem, Securing $8 Million in Seed Funding from US Investors

Substrate Labs is an AI infrastructure startup focused on optimizing AI model deployment efficiency. Their API platform enables the efficient construction and deployment of modular AI systems, optimizing infrastructure for high performance and ease of use. The platform aims to simplify the construction of complex AI workflows and save resources. The article details Substrate Labs' vision, technical advantages, market applications, and mentions their funding and founding team background.

ByteDance's Most Lucrative Overseas AI App: CapCut Surpasses 30 Million MAUs, Generating $1.25 Billion Annually

This article delves into the development and commercialization strategy of CapCut, an overseas AI video editing app developed by ByteDance. Since its launch in 2020, CapCut has rapidly garnered over 30 million MAUs in the international market, accounting for 81% of mobile video editing users, thanks to its user-friendly video editing features and diverse templates. As its user base expands, CapCut is accelerating its commercialization efforts, with its mobile app revenue reaching $1.25 billion by the end of July 2023. CapCut leverages TikTok's traffic advantage for user acquisition and builds brand awareness through SEO strategies. The integration of generative AI tools has further enhanced its functionality and commercial value, but high computing costs and market competition present challenges for CapCut. The article also explores CapCut's potential future market direction and impact.

Z Potentials | Exclusive Interview with Replika's Former AI Lead, Startup Secured A16Z Investment, Building a Multimodal Interaction Platform with Millions of Dollars in ARR

Artem Rodichev, the former AI Lead at Replika, founded Ex-Human and secured investment from Andreessen Horowitz (A16Z). Ex-Human focuses on developing a multimodal interaction platform with APIs, combining B2C Chatbot and image generation products with B2B API business, aiming to reshape human-technology interaction. Through high user engagement products like Botify and Photify, Ex-Human has achieved significant success in the entertainment and image generation fields, and also provides customized services for businesses. The company leverages the large amount of user interaction data accumulated from B2C products to continuously enhance technology, improving the quality and effectiveness of B2B services. Additionally, Ex-Human develops models with excellent emotional intelligence by using open-source models and its own high-quality dialogue data, significantly increasing user engagement. Artem's goal is to create empathetic digital humans and AI characters through technology, changing human-technology interaction, and anticipating that by 2030, interactions with digital humans will be more frequent than with real humans.

Z Product | How a Webcam Received a Significant Investment from OpenAI and Transitioned to the Next Generation of AI-Powered Creative Tools?

Opal Camera was founded in 2020, led by experienced founders Veeraj Chugh, Stefan Sohlstrom, and Kenneth Sweet, and specializes in developing high-quality webcams. During the COVID-19 pandemic, the company identified a pressing market demand for high-quality video and audio equipment and launched the Opal C1 and Opal Tadpole products, targeting professional users and the mobile office market, respectively. Opal Camera's technological innovations include using the Intel Myriad chip for real-time image processing and industry-first phase-detection autofocus, significantly enhancing image quality and performance in low-light conditions. In 2023 and 2024, Opal Camera completed Series A and B funding rounds of $17 million and $60 million, respectively, with the latter led by OpenAI, indicating OpenAI's interest in expanding the AI hardware sector. This investment not only provided financial support to Opal Camera but also marked its strategic transformation from a traditional webcam manufacturer to an AI-powered creative tools provider.

How to Commercialize AI Applications? 40 Leading AI Products Tell You

This article analyzes the pricing models of 40 leading AI products. The analysis explores the latest trends in AI application commercialization. The research covers aspects such as pricing models, value indicators, public announcements, free versions, and pricing transparency, indicating that most AI applications adopt subscription and user-based pricing methods. Despite this, the market demand for innovative pricing models remains strong, especially in the second wave of AI applications, where more innovative pricing structures may emerge. Moreover, the importance of free versions in promoting user adoption cannot be ignored, and pricing transparency varies significantly across different types of applications. This article provides valuable insights into AI product commercialization strategies and predicts future development directions.

Interview with Motiff's Zhang Haoran: Our Only Rival is Figma | Jiazi Guangnian

Motiff, a UI design SaaS company supported by Yuanli Technology, focuses on revolutionizing the UI design field through AI technology. The company considers Figma its only competitor and has launched several AI features, including the AI Toolbox, AI Component Organization System, and Motiff AI Lab. Motiff has also developed the Motiff Miao Duo Large Model, which has performed excellently in multiple UI interface scenario evaluations, even surpassing Google's ScreenAI Large Model. Motiff's founder, Zhang Haoran, emphasizes the importance of AI technology in design tools and explains how AI enhances design efficiency and user experience. Motiff's business strategy is to target large customers and plan for the global market, avoiding local deployment to maintain product iteration speed and AI capabilities.

Viggle: A Chinese AI Startup Disrupting Video Creation on Discord

Viggle AI, a startup focused on video generation technology led by Chinese entrepreneur Chu Hang, recently secured $19 million in early-stage funding led by Andreessen Horowitz. Starting from the Discord community, the company rapidly gained over 4 million users and launched its standalone app in March. Viggle utilizes its proprietary JST-1 model to empower users to create more realistic human movements and expressions. The article delves into Viggle's user base, technical features, market strategies, and challenges, including copyright issues and the legality of model training data. Notably, Viggle has achieved widespread traction on TikTok, showcasing strong user engagement and market potential. Looking ahead, Viggle plans to further enhance its technology, expand its features, and explore applications beyond entertainment.

Li Mu Returns to Alma Mater Shanghai Jiao Tong University, Discussing LLMs and Personal Career: Full Speech Transcript

Li Mu's speech at Shanghai Jiao Tong University explores the core components of language models, technical challenges, and personal career choices. He begins by analyzing the roles of computational resources, data, and algorithms in language models, predicting their future development. He emphasizes the memory bottleneck as a potential constraint on model size and efficiency, impacting the future of AI. He then delves into the development of multi-modal technology, particularly the progress and applications of speech, music, and image models. In terms of AI applications, Li Mu analyzes the current state and future potential of AI in white-collar, blue-collar, and technical jobs, highlighting the interplay between technology and social needs. Finally, he shares his personal career experiences, exploring the challenges and motivations associated with different paths, including working for a large company, pursuing a Ph.D., and entrepreneurship.

Mark Zuckerberg: Don't Fear Big Companies, 2/3 of Opportunities They Miss

In this interview, Mark Zuckerberg shared Meta's exploration and future plans in AR and AI, emphasizing that big companies, despite their resource advantages, often miss two-thirds of opportunities. He encouraged entrepreneurs to try multiple directions and revealed Meta's long-term vision in AR and AI, such as developing next-generation computing platforms and smart glasses. Zuckerberg also discussed the importance of open-source projects like LLaMA, sharing his entrepreneurial experiences and advice, and emphasizing the importance of staying flexible and avoiding early final decisions.

A16Z: The Core of AI+SaaS is Accelerating the Process of 'Software Becoming Labor'

Written by a16z partner Alex Rampell, this article delves into how AI is driving the software industry from traditional service models to more automated and intelligent labor models. Through historical analogies and specific cases, the article highlights the significant potential of AI in transforming software into labor. It also discusses how AI is changing the pricing model of software, moving from traditional per-seat fees to more flexible and efficient billing methods. Furthermore, the article explores AI applications in various industries such as human resources, accounting, healthcare, and sales, indicating that AI not only replaces some human tasks but also creates new job opportunities and markets.

General-purpose Humanoid Robots: A Year Away?

The 2024 World Robot Conference, held in Beijing, featured a range of humanoid robots, including Unitree Robotics' G1 and Zhiyuan Robotics' Expedition series, marking a significant step towards commercialization and mass production. As AI technology advances, the applications and technical approaches for humanoid robots are becoming increasingly diverse. Startups are leading the way by integrating hardware and software and leveraging data-driven methods to enhance robot generalization and intelligence. The article highlights the importance of 'hardware-software integration' for humanoid robots, emphasizing the need for seamless collaboration between physical capabilities and AI algorithms. It also discusses the challenges of integrating AI large models with robots, including data acquisition and generalization. Unitree Robotics' G1, a low-cost, high-performance humanoid robot, demonstrates the power of combined innovation and cost optimization. The article explores the role of simulation data and remote operation in robot training, highlighting their advantages and limitations. Finally, the article discusses the globalization strategies and hardware advantages of Chinese robot companies, suggesting a promising future for the industry.

AI Robot Revolution: Brett Adcock on Figure 2 and a Future World of 100 Billion Robots (2,000-word video included)

Brett Adcock, founder of Figure Robotics and Figure AI, demonstrates significant progress in humanoid robot technology with the release of Figure 2. Figure 2 boasts major hardware and design upgrades, including a threefold increase in CPU and GPU power, enhanced battery capacity, and the integration of six high-efficiency camera systems. Through collaborations with tech giants like OpenAI and Microsoft, Figure 2 incorporates advanced AI models, boosting the robot's intelligence and operational capabilities. Adcock predicts that by 2040, there will be 100 billion robots worldwide, fundamentally transforming the labor market and freeing humans from dangerous and monotonous tasks. He emphasizes that robot technology is not only a technological breakthrough but also a key driver for entering an era of abundance, and expresses concern about China's rise in the robotics field.

Hassabis: Google Aims to Create a Second Transformer and Integrate AlphaGo with Gemini

This article, published by Machine Heart, delves into Demis Hassabis' latest developments in Google's AI field. It highlights Google DeepMind's efforts in pushing the boundaries of AI technology after the merger with DeepMind, including the invention of new architectures and improvements in multi-modal understanding, long-term memory, and reasoning capabilities. Hassabis then explores the ability of AI systems to learn from vast amounts of data and generalize patterns, addressing the hype and underestimation surrounding AI. He emphasizes the multi-modal processing capabilities and innovative long-context memory of the Gemini project, outlining plans to integrate AlphaGo and Gemini, marking a new direction for AI applications. Finally, Hassabis stresses the need for AI ethics and regulation, particularly regarding open-source model security and intelligent system safety. He proposes penetration testing as a crucial method to ensure AI technology stability and security.

From Dial-up to a $55 Billion Start-up: An In-Depth Interview with the Transformer Author on AI Trends

Aidan Gomez shares his in-depth insights on the challenges and future development trends of AI companies. He discusses the ineffective but low-risk approach of expanding model scale, emphasizing the importance of exploring other development paths, such as data collection and application layer innovation. He also points out that relying solely on model API for profit will face challenges, and application layers are more attractive. Furthermore, he predicts that AI technology will lead to breakthroughs in humanoid robots and stresses the importance of data quality in building AI technology.

Zhang Hongjiang: My Eight Observations and Opinions on AI and Large Models | AI Light-year

Dr. Zhang Hongjiang detailed his eight main observations on AI and Large Models during a sharing session in Silicon Valley. He emphasized the scaling law of Large Models and their central role in AI development, discussing the shift of computational focus from CPUs to GPUs and its impact on Data Center architecture. Dr. Zhang also pointed out that Large Models as operating systems will establish new ecosystems and drive the restructuring of the software industry. He analyzed the application levels of Large Models and future development stages, particularly the rise of personalized and To B applications. The session also explored the strategies for entrepreneurs choosing between Large Models and Small Models, as well as market opportunities for Large Model investments. Predicting that multi-modal Large Models are key to achieving AGI, they will greatly empower Robotics technology and drive the development of general-purpose robots.

Zoom Founder Eric Yuan: How Zoom Is Using AI to Grow After a $150 Billion Market Value Drop?

In an exclusive interview, Zoom founder Eric Yuan reflects on the company's journey from its pre-pandemic peak to a substantial market value decline. He outlines how AI technology is driving new growth. Yuan emphasizes Zoom's transformation into an AI-centric collaboration platform, featuring AI virtual avatar functionality and productivity tools to compete with Microsoft and Google in the SaaS market. He highlights Zoom's success factors, including video quality, user-friendliness, and mobile support, while acknowledging the positive influence of Silicon Valley culture on his entrepreneurial journey. The article explores the potential of AI to automate daily tasks, boost work efficiency, and reshape future work patterns, while emphasizing the importance of AI security and privacy protection.

Tencent's Hun Yuan Large-Scale MoE Model Lead Wang Di: Unveiling the Billion-Scale MoE System Engineering Path | Expert Interview

In the Expert Interview, Wang Di, General Manager of Tencent's Machine Learning Platform Department, delved into how Tencent built a billion-scale MoE large model from scratch and shared valuable practical experience. Wang Di emphasized that large model development requires the integration of engineering, algorithms, data, and business applications, and that optimization strategies such as Scaling Law for Mixture-of-Experts Models can improve efficiency and effectiveness under limited resources. Tencent leverages its rich application scenarios to continuously enhance model universality and performance through fine-tuning and data feedback loops, supporting hundreds of internal businesses through its Tai Chi Hun Yuan Platform. Wang Di believes that future AI infrastructure will develop towards greater generalization and platformization, providing users with more convenient and low-cost AI computing capabilities.

Coding Alone Is Outdated: How Microsoft Engineers Are Winning the AI Breakthrough?

This article delves into Microsoft's latest advancements in the AI-Generated Content (AIGC) field, including the integration of GPT series models into Github Copilot and Office, and the optimization of large model efficiency through enhanced computational methods. The article also underscores the importance of responsible AI, highlighting the need for process restructuring and effective data utilization as AI applications become more widespread. Additionally, Microsoft engineer Wei Qing discusses the evolving role of programmers, emphasizing the need for deeper technical understanding, industry knowledge, and a pragmatic approach to future trends.

Depth | Cohere Founder's Latest Thoughts: Model Development Is Getting Harder! AI's Next Big Breakthrough Will Be in Robotics, Models Need to Be More Powerful and Affordable

This article delves into Cohere founder Aidan Gomez's latest insights on AI model development. He points out that despite the increasing difficulty and cost of developing AI models, data quality remains paramount. Gomez predicts that AI's next major breakthrough will occur in robotics, demanding more powerful and affordable models. He further emphasizes the significance of AI technology in boosting productivity, discussing market demand, changes in the semiconductor supply chain, voice as the next-generation user interface, and the future relationship between AI technology and humans. The article also explores the challenges of AI model development, cost issues, market acceptance of new technologies, and the non-linear cost and difficulty in distinguishing between model generations.

Deep Interview with Andrew Ng: According to the Standard Definition of AGI, We Still Need Decades

Andrew Ng delves into various aspects of AI in an interview, including the rapid development of Generative AI, its impact on jobs, ethical issues, and the future of AGI. He mentions that Generative AI has significantly reduced development costs and complexity, enabling more new applications to be realized. Ng believes that AI will replace some repetitive jobs but will not completely replace humans; the key lies in enhancing workers' AI skills. He also emphasizes the importance of AI ethics, noting that these issues are typically application-related. When discussing AGI, Ng believes that according to the standard AGI definition, achieving AGI still requires decades. Additionally, Ng has promoted the global accessibility of AI education through Coursera and DeepLearning.AI, helping people acquire the skills needed to use AI.

Rewind Founder Shares Honest Experience: How to Secure Over 1,000 Investment Intentions with a 7-Minute Video?

This article, shared by Limitless AI founder Dan Siroker, delves into his experiences as a serial entrepreneur, covering aspects like rapid idea validation, strategic pivoting, team management, and effective financing strategies. Siroker highlights the advantages of serial entrepreneurs in their ability to focus on key tasks and leverage accumulated experience, particularly in minimizing time wasted on non-essential activities. The article further explores how to select suitable investors, utilize social media to attract investment opportunities, and manage financing timelines, providing comprehensive practical guidance for entrepreneurs.

After 7 Years of AI Companionship, How Does Replika's Founder See the Future of This Field?

Replika is an AI companion app founded in 2017, aiming to provide emotional support and social interaction to help users overcome emotional difficulties. Founder Eugenia Kuyda emphasizes Replika's goal of creating a new type of AI companion relationship, similar to a pet's role, rather than replacing human relationships. Replika's users are primarily highly active users over 35 years old, offering multiple interaction methods such as text, voice, augmented reality, and virtual reality. Its business model does not rely on user data but focuses on improving dialogue quality to enhance service quality. The Replika team collaborates across disciplines to improve user experience and product functionality, shifting from simple emotional support to building a companion that drives users towards a happier life.

10 Lessons from Zuckerberg: Building a $100 Million AI Business in 9 Months

Noah Kagen, a former early employee of Facebook, learned 10 valuable lessons from Mark Zuckerberg during his 9 months at the company. These lessons helped him establish AppSumo, a software product promotion and marketing platform generating $100 million in annual revenue. Key takeaways include: 1) Focusing on a single goal; 2) Accelerating; 3) Hiring only the best employees; 4) Treating employees well; 5) Following one's own path; 6) Paying attention to details; 7) Empowering the team; 8) Treating them as 'people' not 'users'; 9) Retaining only the right people; 10) Taking a long-term view. These lessons cover various aspects from strategic goals to team management and personal growth, providing valuable insights for readers.

AIGC Weekly #86: Ideogram 2.0 with Powerful Typography and Non-Transformer Models Catching Up

This article, from 'The AI Knowledge Vault,' explores several recent developments in the AIGC field. It introduces Ideogram 2.0, an image generation tool that supports intricate typography and color control, making it suitable for designs like marketing posters. The article then discusses AI21's Jamba 1.5 series models, which are the first non-Transformer models to match the performance of leading models. These models feature a 256K effective context window and support multiple languages. Additionally, the article covers updates and releases of various AI tools and models, including ComfyUI's version update, Zed AI code editor's release, and Vercel V0's upgrade, showcasing AI's progress in image generation, code editing, and interface design. Finally, the article mentions a16z's AI application Top 100 ranking and explores how to evaluate the effectiveness of large language models.

Why SaaS Flourished in the US While China Dominated Super Apps in the Past Decade?

This article compares the tech development strategies of Silicon Valley and China in the 2010s, highlighting the rise of US SaaS companies and China's dominance of integrated platform apps. While both countries achieved success, they also faced failures. Software, through the SaaS model, has deeply penetrated enterprise business processes, reshaping traditional industries. Meanwhile, China's integrated platform apps, like WeChat and Alipay, have formed a near-monopoly, influencing the direction of China's tech industry. The article further explores the differences in consumer internet and enterprise internet development paths between China and the US, as well as the ecological and investment logic behind these differences. Finally, it emphasizes the value of China's consumer internet experience for SaaS companies and the importance of adjusting mindset during the AI cycle, calling for diversifying early-stage investment ecosystems to support AI entrepreneurs targeting the global market.