BestBlogs.dev Highlights Issue #18

Subscribe Now👋 Dear friends, welcome to this week's curated selection of AI articles from BestBlogs.dev!

🚀 This week, we've seen continued momentum in the AI field, with advancements ranging from model performance improvements to innovative real-world applications. Let's dive into these exciting developments!

💫 Week's Highlights

- MiniMax and Reflection 70B achieve significant breakthroughs in multimodal processing

- AI coding tools gain traction, substantially boosting developer productivity

- Alipay unveils "Zhi Xiaobao," showcasing the potential of AI-native applications

- YC founder Paul Graham introduces the "Founder Mode" concept, sparking industry-wide discussions

🧠 AI Model Breakthroughs: Enhanced Performance and Multimodal Integration

- Google releases Gemini 1.5 with controlled generation, improving AI output controllability and predictability

- Researchers develop a method to convert Llama to Mamba, significantly enhancing inference speed and efficiency

- MiniMax demonstrates powerful multimodal capabilities, while Reflection 70B achieves an impressive 99.2% accuracy on the GSM8K math test

⚡ AI Development Innovations: Productivity Boost and Tool Evolution

- AI coding tools see widespread adoption, with over 200 developers reporting substantial efficiency gains

- Novel AI Agent design patterns like REWOO and Plan-and-Execute optimize system planning and execution

- GraphRAG technology excels in complex queries, offering deeper and more nuanced information retrieval compared to traditional RAG

💡 AI Applications in Action: Enhancing User Experience and Exploring Business Models

- Alipay's "Zhi Xiaobao" demonstrates AI's seamless integration into daily life, streamlining user interactions

- ByteDance's Gauth educational app surpasses 200 million users, becoming the second-largest educational app globally

- NightCafe AI art platform reports $2 million in annual revenue, highlighting the commercial viability of AI creative tools

🌐 AI Industry Trends: Startup Boom and Future Outlook

- YC founder Paul Graham proposes the "Founder Mode" concept, challenging traditional management models for AI-era startups

- AI startup SSI reaches a $5 billion valuation in just three months, underscoring investor enthusiasm for AI safety

- 1X Company introduces NEO Beta, a consumer-grade robot, heralding AI's growing role in home automation

Intrigued by these AI developments? Click through to read the full articles and explore more in-depth content!

Table of Contents

- Mastering Controlled Generation with Gemini 1.5: Schema Adherence for Developers

- Training Llama into Mamba in 3 Days: Faster Inference Without Performance Loss

- Gemma explained: PaliGemma architecture

- Mini Transformer Evolution: MiniCPM 3.0 Open Source! 4B Parameters Surpass GPT-3.5 Performance, Infinite Length Text, Powerful RAG Trio! Model Inference and Fine-tuning in Action!

- Open-Source Large Model Reflection 70B Outperforms GPT-4o, New Technology Enables Self-Correction, Achieves 99.2% Accuracy in Mathematics

- Fine-tune FLUX.1 to create images of yourself

- Tencent Hunyuan Tops Chinese Large Model Evaluation, Leading the Pack!

- 3Blue1Brown's Viral Video Explains How LLMs Store Facts with Intuitive Animations

- AI Coding Landscape: Exploring LLM Programming Capabilities, Popular Tools, and Developer Feedback

- Guide for AI Engineers: Who Am I, Where Do I Come From, Where Am I Going? | A Conversation with Silicon-based Flow Founder Yuan Jinhui and Independent Developer idoubi

- Practical Guide to Large Language Models: AI Agent Design Pattern – REWOO

- AI Large Model Practical Series: AI Agent Design Pattern – Plan and Execute

- Databricks announces significant improvements to the built-in LLM judges in Agent Evaluation

- Evaluating prompts at scale with Prompt Management and Prompt Flows for Amazon Bedrock

- LlamaIndex Newsletter 2024-09-03

- How to Build a RAG Pipeline with LlamaIndex

- Build reliable agents in JavaScript with LangGraph.js v0.2: Now supporting Cloud and Studio

- The Future of AI Programmers | Interview with Cognition Founder Scott Wu and Q&A (with Video)

- Master Multimodal Data Analysis with LLMs and Python

- Li Ge Team from Peking University Proposes a Novel Method for Large Model-Based Unit Test Generation, Significantly Enhancing Code Test Coverage

- Pieter Levels: 13 Years, 70 Startups, A Legend of Independent Development

- 20,000 Words to Clarify: How Difficult is it to Develop AI Products Now?

- How to Become a Product Manager Who 'Understands' AI: A Comprehensive Guide

- Alipay Launches New AI-Powered App 'ZhiXiaobao' to Supercharge Daily Life

- A 9-Person Company, Creating Text-to-Image Art, Boasts 25 Million Users and $2 Million in Annual Net Profit

- Unstructured.io: Making Enterprise Unstructured Data LLM-Ready

- ByteDance's Another AI Product is Booming Overseas, Ranking Second in the Education Category, Just Behind Duolingo

- Z Product | Coursera Vice President's AI Online Learning Startup: 20x Completion Rate, $10M Funding, and Addressing the AI-driven Education Revolution

- Z Potentials | Qu Xiaoyin: From Stanford Dropout to AI Education Startup, Heeyo, Backed by OpenAI, Creates Personalized Tutors and Playmates for Kids

- Interview with Digital Artist Shisan: The Journey from Designer to Founding an AI Studio

- Challenging the Managerial Mindset: What is the 'Founder Mode' Buzzing in Silicon Valley?

- Andrej Karpathy's Deep Dive: From Autonomous Driving to Educational Revolution, Exploring AI's Role in Shaping the Future of Humanity

- Mid-Year Review: The Big Six of Large Models - A Survival Status Check

- Z Product | Former DeepMind Scientist and AlphaGo Engineer Team Up, Secure Sequoia's First Round Investment, Build Personal AI Agent with Zero Programming Experience

- Domestic Trendy Community Company Incubates Global AI Image Generator with 3 Million+ Monthly Visits | A Conversation with the Frontline

- Ilya Birthed a $5 Billion AI Unicorn with a 10-Person Team in Just Three Months! SSI Breaks From OpenAI's Model with a Focus on AI Safety.

- What Human Abilities Cannot Be Replaced by AI in the Next Decade

- 1X Unveils Consumer-Grade Humanoid Robot NEO Beta, OpenAI Completes Training of New Inference Model, and Other Top AI News from Last Week #86

- Technology Enthusiast Weekly (Issue 316): The Story of Your Life

Mastering Controlled Generation with Gemini 1.5: Schema Adherence for Developers

Google's latest advancement in AI technology focuses on enhancing the control and predictability of AI-generated outputs with the introduction of Controlled Generation for Gemini 1.5. This feature allows developers to define a response schema that dictates the precise format and structure of AI responses, ensuring consistency and reducing the need for post-processing. By integrating this feature, developers can seamlessly incorporate AI outputs into existing systems, producing machine-readable data in formats like JSON. The controlled generation is built on Google's controlled decoding technology and supports OpenAPI 3.0 schema definitions, ensuring compatibility and standardization. This tool is particularly beneficial for applications requiring structured data outputs, such as meal planning apps or product condition classifiers. Google emphasizes the minimal latency added to API calls and the privacy-preserving nature of the schema enforcement, making it a practical addition to any developer's toolkit.

Training Llama into Mamba in 3 Days: Faster Inference Without Performance Loss

This research, conducted by researchers from Cornell University, Princeton University, and other institutions, explores the conversion of the large Transformer model Llama into the Mamba model. The study also introduces a novel speculative decoding algorithm designed to enhance the model's inference speed. The researchers employed a progressive distillation strategy, gradually replacing the Attention layers, and developed a new speculative decoding algorithm aligned with hardware characteristics. This approach enables the Mamba model to maintain performance while significantly increasing inference speed. Experimental results demonstrate that this method achieves comparable results to training the Mamba model from scratch using only 20 billion tokens. The paper further details adjustments in the model architecture, training process, and hardware-specific optimization strategies.

Gemma explained: PaliGemma architecture

The article delves into the PaliGemma architecture, a lightweight open vision-language model (VLM) inspired by PaLI-3 and built using open components such as the SigLIP vision model and the Gemma language model. PaliGemma is designed to handle both image and text inputs, producing text outputs, and is showcased through a fine-tuning guide available on Google's AI development platform. Its architecture involves adding a vision model to the BaseGemma model, which includes an image encoder. This encoder, along with text tokens, is processed by a specialized Gemma 2B model, trained independently and together. The article also highlights PaliGemma's object segmentation abilities through practical examples, illustrating its versatility and suitability for a variety of tasks.

Mini Transformer Evolution: MiniCPM 3.0 Open Source! 4B Parameters Surpass GPT-3.5 Performance, Infinite Length Text, Powerful RAG Trio! Model Inference and Fine-tuning in Action!

MiniCPM 3.0 is the latest generation base model in the 'Mini Cannon' series from Babel. With 4B parameters, it surpasses the performance of GPT-3.5. After quantization, it only occupies 2GB of memory, making it ideal for edge applications. This model boasts several key features:

- Infinite Length Text Processing: MiniCPM 3.0 breaks the memory limitations of large models by utilizing the LLMxMapReduce technology for long text frame processing.

- Powerful Function Calling: MiniCPM 3.0 demonstrates performance close to GPT-4o on the Berkeley Function-Calling Leaderboard, making it suitable for terminal agent applications.

- RAG Trio: MiniCPM 3.0 includes MiniCPM-Embedding (retrieval model), MiniCPM-Reranker (reranking model), and a LoRA plugin specifically designed for RAG scenarios. This combination outperforms other industry-leading models like Llama3-8B and Baichuan2-13B.

The article provides detailed information about the open-source address of MiniCPM 3.0, its model capabilities, and practical code for inference and fine-tuning. It showcases best practices for using MiniCPM 3.0 within the ModelScope (魔搭) Community, a leading AI community in China.

Open-Source Large Model Reflection 70B Outperforms GPT-4o, New Technology Enables Self-Correction, Achieves 99.2% Accuracy in Mathematics

This article highlights the groundbreaking open-source large model Reflection 70B, developed by a small startup team. The model utilizes innovative Reflection-Tuning training technology, allowing it to self-reflect and correct errors during reasoning, significantly enhancing accuracy and reliability. In the GSM8K mathematical benchmark test, Reflection 70B achieved an impressive 99.2% accuracy, surpassing several leading models, including GPT-4o. This achievement has garnered widespread attention within the industry and received high praise from OpenAI scientists. The article also introduces the team's background, related products, and plans for the future release of a larger version, Reflection 405B. The success of Reflection 70B demonstrates the innovative capabilities of small teams in the AI field, bringing renewed hope and momentum to the open-source community.

Fine-tune FLUX.1 to create images of yourself

The article introduces the FLUX.1 family of image generation models, highlighting their superior quality compared to existing open-source models like Stable Diffusion. It emphasizes the ease of fine-tuning these models on the Replicate platform, even for users without extensive technical expertise. The guide walks through the steps required to fine-tune FLUX.1 using personal images, including gathering training images, choosing a unique trigger word, and training the model. Additionally, it discusses how integrating language models can enhance prompt generation, ultimately allowing users to create more imaginative images. The article concludes with suggestions for iterating and having fun with the fine-tuned model.

Tencent Hunyuan Tops Chinese Large Model Evaluation, Leading the Pack!

In August 2024, the SuperCLUE benchmark for Chinese large models released its latest evaluation report. Tencent Hunyuan Large Model secured the top spot among domestic models, excelling in eight out of eleven core tasks. This achievement is attributed to its innovative Mixture of Experts (MoE) architecture, which significantly enhances performance while reducing inference costs. Tencent Hunyuan demonstrates remarkable capabilities in science, liberal arts, and challenging tasks, particularly achieving a score of 74.33 in the latter, the only domestic model to surpass 70 points. As the industry rapidly evolves, Tencent Hunyuan and other domestic models are closing the gap with leading international models, actively pushing for real-world applications. It has already been integrated into nearly 700 business scenarios, demonstrating its broad applicability and significant value.

3Blue1Brown's Viral Video Explains How LLMs Store Facts with Intuitive Animations

This article dives into how Large Language Models (LLMs) store factual information, drawing on 3Blue1Brown's video from the 'Deep Learning' course, Lesson 7. Using the specific example 'Michael Jordan's sport is basketball', it demonstrates how LLMs store specific knowledge within their hundreds of millions of parameters. The article first reviews the workings of Transformers and elaborates on the role of Multilayer Perceptrons (MLPs) in LLMs, explaining how MLPs process and store information through a series of operations. Additionally, the article calculates the number of parameters in GPT-3 and briefly introduces the concept of Superposition, a hypothesis that might explain the model's interpretability and scalability. Overall, the article helps readers better understand the internal mechanisms of LLMs through vivid animation demonstrations, while highlighting the educational value of 3Blue1Brown's resources.

AI Coding Landscape: Exploring LLM Programming Capabilities, Popular Tools, and Developer Feedback

The article, from the ShowMeAI Research Center, focuses on the evolving landscape of AI coding tools. The emergence of tools like Cursor signifies a pragmatic shift in the field, emphasizing the practical application of AI in coding. The article provides a comprehensive list of the most popular AI coding tools, categorized by type (LLMs, Chatbots, Web Tools, etc.), and offers multiple rankings and evaluation websites to help readers assess the programming capabilities of different AI models. Through a survey of over 200 programmers, the article explores the real-world use cases and challenges of AI coding tools, including high learning costs and the need for improved accuracy. Finally, the article presents a complete AI Coding Workflow, including practical prompts, and showcases examples of senior algorithm engineers utilizing AI for programming and research, highlighting the potential of AI in revolutionizing coding practices.

Guide for AI Engineers: Who Am I, Where Do I Come From, Where Am I Going? | A Conversation with Silicon-based Flow Founder Yuan Jinhui and Independent Developer idoubi

This article is an episode of the 'Crossroads' podcast, featuring Silicon-based Flow founder Yuan Jinhui and independent developer idoubi, discussing the current state and future directions of AI engineers' careers, as well as the challenges and opportunities of AI technology in practical applications. The article emphasizes the rapidly changing industry environment and diverse career path choices for AI engineers, particularly the importance of AI-native applications. idoubi shares the efficient assistance in AI application development and discusses trends in prompt engineering and low-code development. Additionally, the article explores the demand for full-stack engineers and the transformation of the engineer's role in the AI era, concluding with the emphasis on self-innovation and personal growth in the entrepreneurial process.

Practical Guide to Large Language Models: AI Agent Design Pattern – REWOO

This article, written by Feng and published on the 'Everyone's a Product Manager' platform, details the REWOO method in AI Agent design patterns. REWOO (Reason without Observation) is an optimization method for the ReAct pattern, which separates reasoning from observation and employs modular design to reduce redundant calculations and token consumption. The article reviews the issues with the ReAct pattern, introduces the REWOO architecture, including the Planner, Worker, and Solver components, and provides actual source code demonstrations. REWOO enhances efficiency and accuracy by generating a one-time complete toolchain and simplifying the fine-tuning process, but it highly depends on the Planner's planning capabilities. The article suggests that to improve the Agent's accuracy, a planning adjustment mechanism is needed.

AI Large Model Practical Series: AI Agent Design Pattern – Plan and Execute

This article is part of the 'AI Large Model Practical Series', focusing on the Plan-and-Execute method within AI Agent design patterns. It first reviews the ReWOO Design Pattern mentioned in previous articles, highlighting its reliance on the planner's accuracy and introducing the Replan mechanism to improve adaptability and flexibility. The Plan-and-Execute pattern emphasizes planning before execution, dynamically adjusting the plan based on execution conditions. Its architecture includes a planner, executor, and replanner, each with distinct responsibilities and interactions. The article provides detailed source code examples demonstrating how to build an executor, define system states, design a planner and replanner, and construct the entire flowchart. Finally, it discusses the advantages and disadvantages of the Plan-and-Execute pattern and suggests further optimization directions, such as using a Directed Acyclic Graph (DAG) to enable parallel task execution.

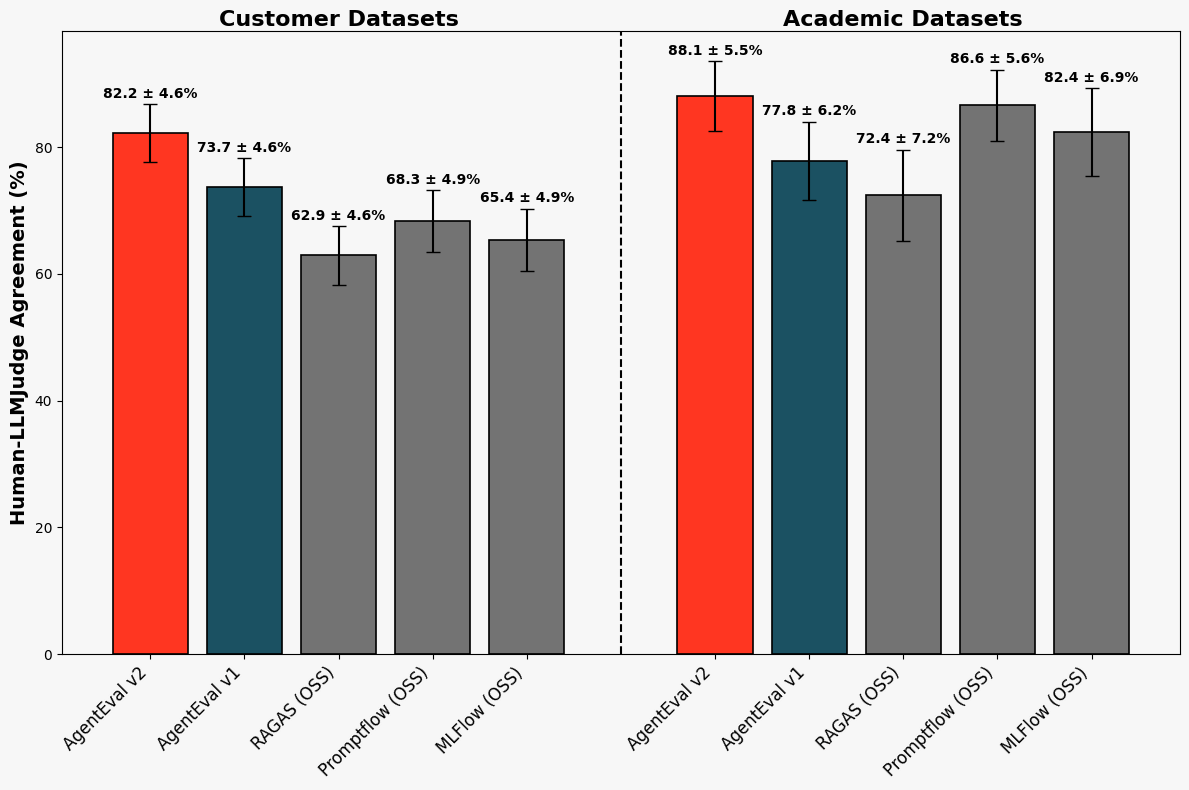

Databricks announces significant improvements to the built-in LLM judges in Agent Evaluation

Databricks has announced substantial enhancements to the built-in LLM judges within its Agent Evaluation framework. Agent Evaluation is designed to help Databricks customers define, measure, and improve the quality of generative AI applications, particularly in industry-specific contexts dealing with complex, open-ended questions and long-form answers. The framework addresses the challenge of evaluating the quality of ML outputs by employing both human subject-matter experts and automated LLM judges. The new answer-correctness judge, which is now available to all customers, provides significant improvements over previous versions and baseline systems, especially in handling customer-representative use cases. This judge evaluates the semantic correctness of generated answers by focusing on the inclusion of specific facts and claims, rather than relying on ambiguous similarity metrics. The evaluation methodology includes comparisons against academic and industry datasets, demonstrating strong agreement with human labelers and outperforming existing baselines.

Evaluating prompts at scale with Prompt Management and Prompt Flows for Amazon Bedrock

The article discusses the importance of prompt engineering in generative AI applications and how it impacts the quality, performance, cost efficiency, and user experience. It introduces the concept of prompt evaluation as a critical aspect of developing high-quality AI-powered solutions. The article then demonstrates how to implement an automated prompt evaluation system using Amazon Bedrock's Prompt Management and Prompt Flows. This system uses the LLM-as-a-judge method to evaluate prompts based on predefined criteria and provides a numerical score for standardization and automation. The article provides a step-by-step guide on setting up the evaluation prompt and flow, including creating a prompt template, selecting models, and configuring inference parameters. It also discusses best practices for prompt refinement, such as iterative improvement, context provision, specificity, and testing edge cases. The article concludes by highlighting the benefits of this systematic approach to prompt evaluation and optimization, which can improve the quality and consistency of AI-generated content, streamline the development process, and potentially reduce costs.

LlamaIndex Newsletter 2024-09-03

This week's LlamaIndex newsletter showcases significant developments in AI frameworks, such as the Dynamic RAG Retrieval Guide, which enhances context retrieval efficiency. The newsletter also features an Auto-Document Retrieval Guide, a tutorial on building Agentic Report Generation Systems, and a comprehensive overview of Workflows. A notable case study highlights GymNation's deployment of AI agents, leading to enhanced sales and customer service outcomes. Additionally, community contributions and upcoming events, including hackathons and podcasts, are presented to engage the audience further.

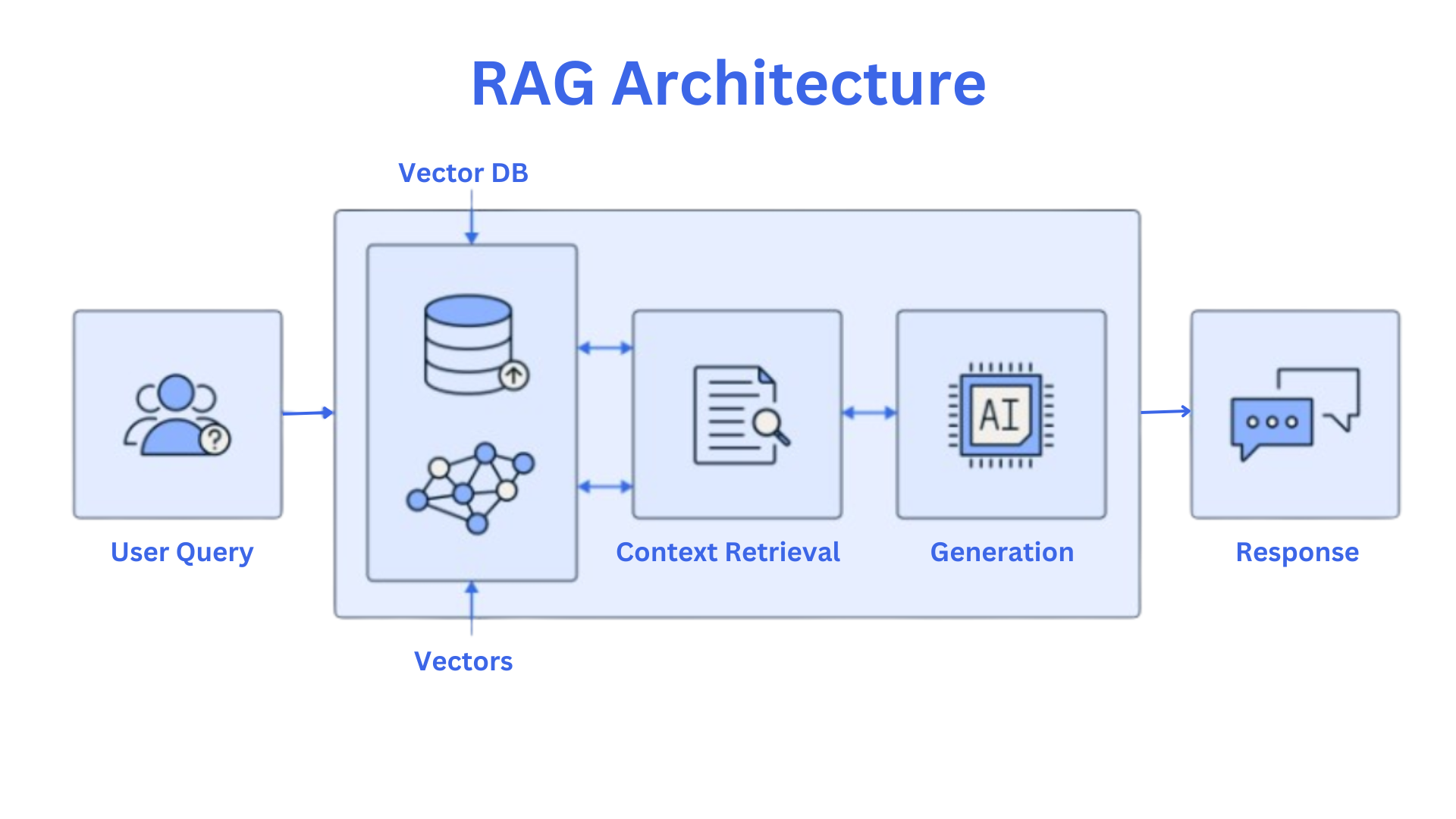

How to Build a RAG Pipeline with LlamaIndex

The article delves into the intricacies of building a Retrieval-Augmented Generation (RAG) pipeline using LlamaIndex, a framework designed to enhance the accuracy and reliability of Large Language Models (LLMs) like ChatGPT. It addresses the challenge of hallucination in LLMs, where models generate factually incorrect or misleading text. RAG mitigates this issue by integrating information retrieval from a vast knowledge base, ensuring that responses are grounded in real-world facts. The article outlines the components of a RAG pipeline, including vector databases, embedding models, and language models, and explains how LlamaIndex facilitates the connection between these components. It provides a step-by-step guide on setting up the pipeline using Python and IBM watsonx, covering topics such as embedding creation, retrieval, contextualization, and response generation. The article also discusses fine-tuning the pipeline for optimal performance, evaluating its effectiveness using metrics like accuracy, relevance, coherence, and factuality. Real-world applications of RAG pipelines, such as customer support chatbots and knowledge base search, are highlighted, showcasing the practical value of this technology.

Build reliable agents in JavaScript with LangGraph.js v0.2: Now supporting Cloud and Studio

The article announces the release of LangGraph.js v0.2.0, a JavaScript/TypeScript framework designed for building LLM-powered agents. Key features introduced in this version include flexible streaming of intermediate steps and chat model messages, a built-in checkpointing system for error debugging and state rewinding, first-class human-in-the-loop support for state updates and interruptions, and parallel node support for simultaneous execution. Additionally, LangGraph.js now supports beta versions of LangGraph Studio, an agent IDE, and LangGraph Cloud, a scalable deployment infrastructure. These tools aim to enhance the responsiveness, resilience, and access control of applications, running across various JavaScript runtimes including browsers. The integration with LangSmith for detailed tracing and checkpointing in LangGraph Cloud, along with community feedback, further emphasizes its commitment to addressing specific development challenges.

The Future of AI Programmers | Interview with Cognition Founder Scott Wu and Q&A (with Video)

Cognition founder Scott Wu delves into the comprehensive programming capabilities of AI programmer Devin, including its ability to write code, browse the web, execute commands, and make complex decisions. This signifies a new direction for AI-assisted programming. He envisions future software engineers as a blend of product managers and technical architects, focusing on problem decomposition and high-level design while delegating coding tasks to AI. Wu also explores the challenges and opportunities of AI entrepreneurship, emphasizing the need for entrepreneurs to possess foresight and adaptability, making strategic bets on technology before it matures. He believes that the adoption and practical application of AI will be a gradual process rather than a sudden shift. He anticipates the emergence of a few large platform companies driven by AI technology, with numerous applications built upon them.

Master Multimodal Data Analysis with LLMs and Python

The article presents a newly published course on the freeCodeCamp.org YouTube channel, focusing on multimodal data analysis using Large Language Models (LLMs) and Python. Developed by Dr. Immanuel Trummer, an associate professor at Cornell University, the course offers in-depth insights into advanced techniques for analyzing various types of data. Key topics include text classification, image analysis, audio data processing, and natural language querying of databases. The course stands out for its hands-on learning approach, multimodal coverage, expert instruction, and comprehensive resources. Designed for data scientists, machine learning engineers, and anyone interested in AI-powered data analysis, it provides practical skills and a solid foundation for real-world applications, emphasizing its potential industry impact and practical application scenarios.

Li Ge Team from Peking University Proposes a Novel Method for Large Model-Based Unit Test Generation, Significantly Enhancing Code Test Coverage

A new method called HITS, developed by the Li Ge Team from Peking University, was introduced in their latest research. HITS enhances the coverage of large models in unit testing of complex functions through program slicing technology. This method decomposes complex functions into multiple simple segments based on semantics, then utilizes large models to generate targeted test cases for each segment, thereby reducing the difficulty of analysis and increasing coverage. HITS leverages the natural language processing capabilities of large models through contextual learning to help them better understand the intermediate states of code execution and generate more effective test cases. Experimental results show that HITS significantly outperforms other large model-based unit testing methods and traditional methods in code coverage on complex functions. This technology not only improves the efficiency and effectiveness of unit testing but also is expected to help teams in practical software development discover and fix errors earlier, enhancing the quality of software delivery.

Pieter Levels: 13 Years, 70 Startups, A Legend of Independent Development

Pieter Levels, a self-taught independent developer, has launched 70 startup projects over 13 years, successfully operating 40 of them, with 4 achieving significant success. Levels emphasizes quick action and transparent development, achieving a 5% success rate through extensive trial and rapid iteration. He advocates for keeping products simple to quickly validate market demand, believing that the technology stack is less important than meeting user needs in the early stages. His entrepreneurial approach involves identifying problems from daily life and solving them through coding, traveling to gain new perspectives and solutions, and exploring and innovating with new technologies like AI. Levels' experience highlights the importance of streamlining processes and rapid implementation, showcasing the commercial potential of AI technology.

20,000 Words to Clarify: How Difficult is it to Develop AI Products Now?

This article explores the difficulties and challenges of AI product development from multiple perspectives, including the use of large model APIs, the design of large and small models, context window issues, data acquisition and security mechanisms, cross-modal processing, applications of RAG (Retrieval-Augmented Generation) and AutoGPT, etc. The article emphasizes that AI product success depends not only on advanced technology but also on the collaborative work of product managers and engineers, and a deep understanding of media and content transformation. Additionally, the article discusses the commercial value and future directions of AI products, emphasizing the need to avoid over-reliance on large models in AI product development, but rather to optimize the overall system by integrating practical tools and data.

How to Become a Product Manager Who 'Understands' AI: A Comprehensive Guide

The article highlights the crucial role of AI technology in product development, particularly focusing on the engineering of large language models (LLMs) and their limitations. Product managers need to deeply understand the cost, performance, and response speed of LLMs, while also considering the constraints of context windows. The article delves into the challenges of LLMs in practical applications, such as the phenomenon of hallucinations and their impact on user experience. Additionally, the author discusses the practical applications and limitations of technologies like RAG (Retrieval-Augmented Generation) and AutoGPT, ultimately urging product managers to comprehensively consider user value, new and old experiences, and replacement costs when choosing AI solutions.

Alipay Launches New AI-Powered App 'ZhiXiaobao' to Supercharge Daily Life

Alipay, a national-level application serving billions of users for 20 years, has expanded its services to encompass payment, travel, wealth management, medical treatment, and government services. With the advancement of AI technology, Alipay has launched 'ZhiXiaobao', a new AI-native app designed to further enhance users' lifestyle service experience. 'ZhiXiaobao' goes beyond understanding users' natural language needs; it takes direct action, such as booking tickets, ordering meals, and topping up, significantly simplifying user operations. The article delves into the core functions and design philosophy of 'ZhiXiaobao', emphasizing its transformation from understanding (Chat) to taking action (Act). Through ACT technology, 'ZhiXiaobao' simulates human interaction, achieving screen perception and simulation execution, allowing users to complete complex operations with just language expression. Alipay has further enhanced the action capability and user experience of large models through multi-modal data collection and optimization of Function Call, which enables 'ZhiXiaobao' to understand and call the appropriate tools more efficiently. Alipay's AI strategy extends beyond simply investing in AI; it embraces AI in all its operations, using AI technology to comprehensively enhance the service capabilities of its existing platform. The article also highlights Alipay's intelligent agent ecosystem open plan, which provides a one-stop intelligent agent development platform called 'Treasure Box'. This platform allows businesses and institutions to quickly create exclusive service intelligent agents, promoting the application of AI technology across various industries.

A 9-Person Company, Creating Text-to-Image Art, Boasts 25 Million Users and $2 Million in Annual Net Profit

NightCafe, founded by Angus and Elle Russell, is a unique AI art generation platform with 25 million users and $2 million in annual net profit. The platform has achieved a self-sustaining business model through its point system and community building. By aggregating various image models and providing fine-tuning capabilities, it empowers users to easily create art. NightCafe takes a cautious approach to AI art copyright issues, using user agreements and manual review to avoid legal risks. The company has chosen to avoid external investment, focusing on becoming a leading model aggregator and building a strong community and social center. NightCafe actively addresses the challenges of technology commodification to maintain its competitive edge in the AI art field.

Unstructured.io: Making Enterprise Unstructured Data LLM-Ready

Unstructured.io is a company specializing in unstructured data processing, helping enterprises effectively deploy large language models (LLMs) with its refined data ingestion technology. The company has established partnerships with multiple government departments, including the U.S. Air Force, demonstrating its strengths in data security and precise processing. Unstructured.io offers a variety of products, including an open-source Python library, commercial API, and enterprise-level platform, to meet different customer needs. Despite intense market competition, Unstructured.io's strong team background and deep understanding of large enterprises and government needs support its commercialization process. Future developments in Multi-step Agents and multimodal technology may bring more opportunities for the company.

ByteDance's Another AI Product is Booming Overseas, Ranking Second in the Education Category, Just Behind Duolingo

Gauth is an AI educational app launched by ByteDance, focusing on helping students solve basic high school math problems and has expanded to subjects like chemistry and physics. Powered by OpenAI's large models, Gauth has attracted over 200 million student users and has been downloaded millions of times. The app has consistently received a 4.8-star rating on both Apple and Google app stores. Gauth not only offers AI problem-solving services but also provides human tutors through a paid 'Plus' version, enhancing user experience. Its success relies not only on technology but also on increasing user engagement time, indirectly boosting TikTok's user engagement and advertising revenue. Gauth's rapid rise also faces challenges from the tense Sino-US political situation, and its future prospects need close attention.

Z Product | Coursera Vice President's AI Online Learning Startup: 20x Completion Rate, $10M Funding, and Addressing the AI-driven Education Revolution

Uplimit, founded by Julia, a former Coursera Vice President, is an AI-powered online learning platform dedicated to enhancing the quality and scalability of online education. Uplimit's mission is to bridge the gap between online course quality and scalability by leveraging AI's potential in personalized content, simplified feedback, and learner engagement. This allows instructors to focus on providing inspiration, connections, and insights. Uplimit offers a suite of AI tools to help instructors create and update courses quickly while providing students with a better learning experience, including AI role-playing and learning assistant features. Uplimit has achieved a 15-20x increase in course completion rates through its AI-powered learning platform, attracting prominent enterprise users such as Kraft Heinz, GE Healthcare, Procore, and Databricks. The core team at Uplimit boasts extensive experience in online learning and cutting-edge technology development, all having previously worked at Coursera. On July 19th, Uplimit secured $11 million in Series A funding led by Salesforce Ventures, which will be used to further promote the application of AI in the education sector, enhance course completion rates, and improve learning experiences.

Z Potentials | Qu Xiaoyin: From Stanford Dropout to AI Education Startup, Heeyo, Backed by OpenAI, Creates Personalized Tutors and Playmates for Kids

Qu Xiaoyin, founder of Heeyo AI, highlights the significant role of AI in personalized education and child development by sharing her entrepreneurial journey and the development of AI educational products. Heeyo offers personalized AI educational companions to support and guide children aged 3 to 11, aiming to stimulate their curiosity and creativity. Qu Xiaoyin emphasizes the importance of balancing parental expectations with children's interests in product development, addressing challenges in personalized education including compliance and parental trust. Additionally, the article explores the potential of AI in psychological counseling, especially in meeting personalized needs and providing emotional support.

Interview with Digital Artist Shisan: The Journey from Designer to Founding an AI Studio

This interview delves into the transformation of Digital Artist Shisan from a traditional designer to an AI designer, exploring the innovative practices of his 'AI Haven' studio in the integration of AI and design. Shisan discusses how he uses AI technology to improve design efficiency, inspire creative ideas, and explore various fields such as brand design, film creativity, and interactive experiences. He also describes his experiences in AI design competitions and his views on the future of AI-Generated Content (AIGC), offering advice for designers. He emphasizes the importance of AI in the design industry and suggests how designers should embrace AI technology to form new human-computer collaboration models.

Challenging the Managerial Mindset: What is the 'Founder Mode' Buzzing in Silicon Valley?

This article, inspired by YC founder Paul Graham's piece 'Founder Mode', discusses why founders should not simply mimic the management experiences of large companies. Graham points out that traditional management wisdom, which involves founders managing companies like professional managers, often leads to inefficiency. He proposes 'Founder Mode', emphasizing that founders should be more directly involved in company management, focusing on details, echoing Airbnb CEO Brian Chesky's practice. Chesky's deep involvement in every aspect of the company has enabled Airbnb to achieve rapid growth and stable cash flow. The article further explores the differences between Founder Mode and Managerial Mode, indicating that Founder Mode is more complex but more effective, helping companies maintain an edge in competition. Additionally, the article cites comments from multiple founders and managers, discussing the practical application and potential issues of Founder Mode, as well as its applicability and challenges in different industries.

Andrej Karpathy's Deep Dive: From Autonomous Driving to Educational Revolution, Exploring AI's Role in Shaping the Future of Humanity

Andrej Karpathy, in a recent interview, explored the cutting-edge applications of AI, including autonomous driving, educational revolution, humanoid robots, and Transformer models. He stressed that AI should serve as a tool to empower humans, not replace them, and predicted that future AI models will be smaller and more efficient, potentially composed of multiple specialized models. Karpathy also discussed the distinct challenges faced by Tesla and Waymo in the autonomous driving field, as well as the technological transition from autonomous driving to humanoid robots. He highlighted the transformative impact of the Transformer architecture in AI, making neural network training and application more versatile and efficient. Furthermore, Karpathy explored the potential of AI in education, particularly its ability to amplify the influence of excellent teachers, leading to globalized and personalized learning experiences. He also predicted that in a post-AGI society, education will become more entertainment-focused, with subjects like mathematics, physics, and computer science forming the core of future education.

Mid-Year Review: The Big Six of Large Models - A Survival Status Check

This article provides a comprehensive overview of the development of the 'Big Six' domestic large model startups (Zhipu AI, Baichuan Intelligence, Lingyi Wanyu, Dark Side of the Moon, Minimax, Jieyue Starry Sky) in mid-2024. These companies, facing a slowdown in model capability growth, are vying for users through differentiated product strategies and aggressive marketing campaigns. Despite the uncertainties surrounding commercialization and the pressure of securing funding, these companies maintain their competitive edge through substantial investments and proactive market strategies. The article further explores the distinct B2B and B2C market strategies adopted by these companies, as well as the challenges they encounter in talent acquisition and internal management. Additionally, the article sheds light on the specific technological innovations undertaken by these companies.

Z Product | Former DeepMind Scientist and AlphaGo Engineer Team Up, Secure Sequoia's First Round Investment, Build Personal AI Agent with Zero Programming Experience

Reflection AI, a startup founded by former DeepMind scientist Misha Laskin and AlphaGo engineer Ionnis Antonoglou, focuses on developing AI Agents that require no programming experience. The company has secured a $100 million investment from Sequoia Capital, aiming to create a 'powerful general agent' capable of automating complex knowledge work. Unlike traditional language models, AI Agents, also known as intelligent agents, possess capabilities such as tool invocation, memory, and reasoning, enabling them to store interactions, plan future actions, and improve response accuracy through feedback mechanisms. Reflection AI offers an easy-to-use platform where users can create and customize their own AI Agents using plain language prompts, thereby achieving personalized experiences. Additionally, the platform provides monitoring and analysis tools to help users optimize the behavior of their AI Agents.

Domestic Trendy Community Company Incubates Global AI Image Generator with 3 Million+ Monthly Visits | A Conversation with the Frontline

This article delves into the background, development journey, and unique strategies of Tensor.Art in the AI image generation product field. Incubated by Echo Technology, Tensor.Art was initially positioned as a model marketplace based on the Stable Diffusion open-source ecosystem. Facing a market environment of overall traffic decline, it achieved counter-trend growth by strengthening community building and technical support. The article also analyzes Tensor.Art's commercialization strategies, particularly the different service models for individual and enterprise users, as well as efforts in technical optimization and cost control, showcasing its strong competitiveness and market potential in the AI image generation product field.

Ilya Birthed a $5 Billion AI Unicorn with a 10-Person Team in Just Three Months! SSI Breaks From OpenAI's Model with a Focus on AI Safety.

This article reports on Ilya Sutskever's new company, Safe Superintelligence (SSI). The company completed a $1 billion financing round in just three months, reaching a valuation of $5 billion. SSI aims to develop safe superintelligence and adopts a traditional for-profit company structure, a departure from OpenAI's non-profit model. The article details SSI's financing background, team composition, technical direction, and emphasis on AI safety. Ilya emphasizes that SSI will focus on solving the AI alignment problem, a critical aspect of ensuring AI systems are aligned with human values and do not pose existential risks. Additionally, SSI plans to collaborate with cloud service providers and chip manufacturers to meet computational power needs. Overall, SSI's establishment marks a new chapter in the field of AI safety, and despite technical challenges, its commitment to safety and innovative approaches could lead to new developments in AI technology.

What Human Abilities Cannot Be Replaced by AI in the Next Decade

The article delves into how humans should maintain their uniqueness and competitiveness against the backdrop of rapid development of Artificial Intelligence (AI). Firstly, it points out that the rise of AI is not only a revolution in physical or mental labor but also a profound socioeconomic transformation, emphasizing the importance of human 'cognitive strength' in dealing with complexity, uncertainty, and the unknown. Subsequently, the article lists the core human abilities that AI will find difficult to replace in the next decade, including a well-rounded personality, positive life attitude, curiosity, creativity, and emotional and interpersonal relationship management skills. Additionally, the article predicts a shift in future work patterns, where humans will transition from 'operators' to 'thinkers', collaborating with AI to focus on higher-level thinking and innovation. Finally, the article underscores that the 'unchanging' abilities will determine the future of humanity in the AI era, encouraging people to uphold these eternal values.

1X Unveils Consumer-Grade Humanoid Robot NEO Beta, OpenAI Completes Training of New Inference Model, and Other Top AI News from Last Week #86

Last week saw a series of significant advancements in the AI field, spanning from consumer-grade humanoid robots to new AI inference models, and the release of multimodal LLMs and video generation models. 1X introduced the consumer robot NEO Beta, featuring their proprietary 'tendon-actuated' technology, with delivery expected in 2025. OpenAI has trained a new inference model called Strawberry, designed to generate high-quality synthetic data to aid in the training of the next-generation model, Orion. Magic released the LTM-2-mini model with a 100M token context, introducing a new evaluation method called HashHop, enhancing the ability to handle extremely long contexts. Additionally, Alibaba open-sourced the multimodal LLM Qwen2-VL, which excels in multiple visual understanding benchmarks, capable of processing images of varying resolutions and aspect ratios, as well as understanding videos over 20 minutes long. These developments not only showcase the potential applications of AI in everyday life but also propel the evolution of multimodal learning and video understanding technologies.

Technology Enthusiast Weekly (Issue 316): The Story of Your Life

This article, Issue 316 of Technology Enthusiast Weekly by Ruan Yifeng, begins with a discussion of the science fiction novel 'The Story of Your Life' and its film adaptation 'Arrival.' It then introduces the concept of using AI to create personalized autobiographies, suggesting a method where individuals wear a camera to capture photos and send them to OpenAI for narrative generation. The article also covers technology news, including the design of astronaut tails for balance in space, the incident of fake watermelons used to conceal illegal substances, and the process of making ultrasonic coffee. Finally, it recommends various resources, ranging from GitLab static content hosting to the AI code editor Cursor.