BestBlogs.dev Highlights Issue #22

Subscribe Now👋 Dear readers, welcome to this week's curated article selection from BestBlogs.dev!

🚀 This week, the AI field has once again witnessed an exhilarating wave of innovations. From model breakthroughs to practical applications, AI technology is driving advancements across various domains at an unprecedented pace. Yi-Lightning by Zero One Infinity and Meta's Agent-as-a-Judge showcase significant progress in large-scale pre-training and intelligent agent evaluation. Mistral AI's compact models and Alibaba International AI's Ovis1.6 exemplify innovations in balancing efficiency and performance. On the development front, HKU's LightRAG and Alibaba Cloud's Spring AI Alibaba offer developers more efficient solutions for building AI applications. In terms of products, from Google's NotebookLM to Rabbit's R1 hardware, AI is reshaping our work methods and daily experiences. Join us as we explore these exciting AI developments!

💫 Weekly Highlights

- Zero One Infinity unveils its flagship pre-trained model Yi-Lightning, boasting significant improvements in performance and inference speed

- Meta introduces the Agent-as-a-Judge framework, revolutionizing intelligent agent evaluation methods

- Mistral AI releases Ministral 3B and 8B compact models, excelling in performance and cost-effectiveness

- Alibaba International AI open-sources Ovis1.6 multimodal large model, outperforming GPT-4o-mini in multiple benchmarks

- HKU's Huang Chao team open-sources the LightRAG system, substantially reducing costs for retrieval-augmented generation

- Alibaba Cloud open-sources Spring AI Alibaba framework, empowering Java developers to rapidly build AI applications

- Google's NotebookLM project demonstrates the future potential of AI collaboration tools

- Rabbit founder Lu Chen shares insights on R1 hardware product's market performance and future strategies

- Sequoia Capital releases its annual AI industry report, analyzing the profound impact of the o1 model on the industry

- NVIDIA CEO Jensen Huang discusses the company's development strategy and his vision for AI's future

Eager to delve deeper into these fascinating AI developments? Click to read the full articles and explore more groundbreaking AI innovations!

Table of Contents

- 01.AI Releases Flagship Pre-trained Model, Kai-Fu Lee Addresses Rumors of China's AI Six Little Tigers' Challenges: Funding and Chips Are Not the Issue

- Let's Get This Done! Meta Releases Agent-as-a-Judge

- The Strongest Small Models: Ministral 3B/8B Released

- Alibaba International Open-Sources Ovis1.6, Outperforming GPT-4o-mini in Multiple Areas!

- NeurIPS 2024 | OCR-Omni: A Unified Multimodal Text Understanding and Generation Large Model from ByteDance and East China Normal University

- Dualformer: Integrating Fast and Slow Thinking for Complex Tasks

- Real-world Testing of 13 SORA-inspired Video Generation Models: A Comprehensive Analysis of Over 8000 Cases

- 92-Page Llama 3.1 Technical Report: A Summary

- LightRAG: A Simple and Efficient RAG System Developed by Huang Chao's Team at the University of Hong Kong, Significantly Reducing the Cost of Retrieval Augmentation for Large Language Models

- Alibaba Cloud Open-Sources AI Application Development Framework: Spring AI Alibaba

- How Shopify improved consumer search intent with real-time ML

- From Concept to Code: How to Use AI Tools to Design and Build UI Components

- AI Agent Practice Sharing: Building an Intelligent Q&A Bot from Scratch Using FAQ Documents and LLMs

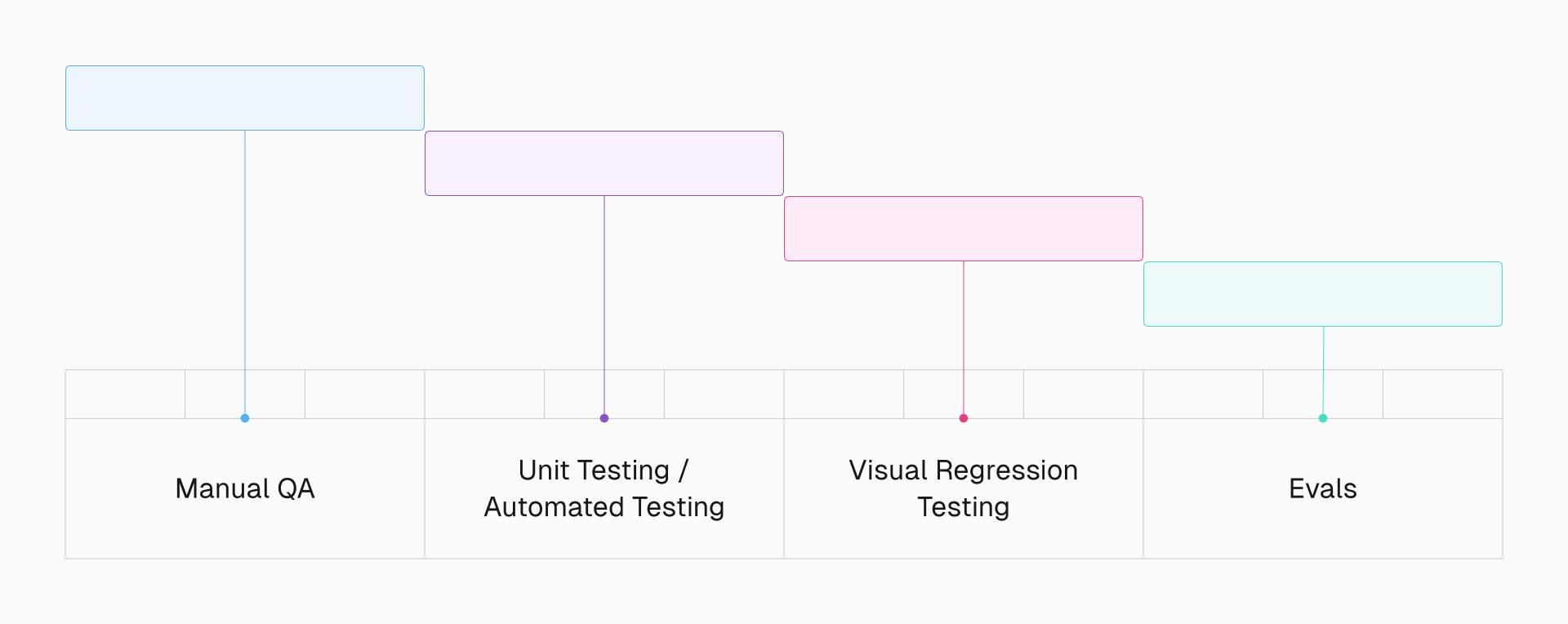

- Eval-driven development: Build better AI faster - Vercel

- 175 Practical Manuals from OpenAI

- Interview with NotebookLM Core Lead: A Project with Less Than 10 People, Experience Stunning Globally, But It's Still Just a Technical Demo

- Duolingo: A Billion-Dollar Company with Over 100 Million Monthly Active Users, a Master of User Engagement: AI Can Be Used, But Make Users Happy

- Breakout AI Hardware Founder Lü Cheng: Raised $50 Million, Shipped 100,000 Units, Opportunity Window Remains for 8 Months

- Voice-first: Reflections on Developing a Voice Product

- AI Designer Guide: Who Am I, Where Do I Come From, Where Am I Going? | Interview with Pozzo and Zhao Chen

- This AI Lets Me Chat with My 60-Year-Old Self, Healing My Mental Exhaustion

- Sequoia Capital: Wrapper Applications Lead the Application Layer, o1 Revolutionized Large Language Models, Annual Industry Report Update

- Jensen Huang's Latest Interview: Reveals Daily Use of ChatGPT, Admits to Nerves Before Every Speech

- After the Fall of 'Little Overlord', Educational Training Companies Turn to Hardware Business

- Meta’s open AI hardware vision

- The Rise of Inference Scaling in Large Models: How SambaNova Challenges NVIDIA's Dominance

01.AI Releases Flagship Pre-trained Model, Kai-Fu Lee Addresses Rumors of China's AI Six Little Tigers' Challenges: Funding and Chips Are Not the Issue

01.AI has released its flagship pre-trained model Yi-Lightning, marking a significant advancement in performance and inference speed. Addressing rumors about difficulties faced by China's AI Six Little Tigers, Kai-Fu Lee emphasized the availability of sufficient funding and chip resources. He also discussed the importance of cost control and performance optimization for large models, highlighting that the core competitiveness of such companies lies in the integration of models, AI infrastructure, and applications. Lee acknowledged that China has the potential to develop world-class pre-trained general models, but the high cost involved might limit the number of companies capable of pursuing this endeavor. 01.AI, through its model infrastructure co-construction and model-application integration strategy, aims to reduce training and inference costs, offering high-cost-performance AI models and expanding into both domestic and international markets. Lee emphasized that the six leading AI startups possess sufficient talent and resources, and funding and chip supply are not major concerns.

Let's Get This Done! Meta Releases Agent-as-a-Judge

Meta's latest research introduces the Agent-as-a-Judge framework, an innovative method for agent evaluation aimed at assessing and optimizing other agents' performance through the agents themselves. Traditional methods of agent evaluation often rely on final outcomes or extensive human effort, while Agent-as-a-Judge introduces an intermediate feedback mechanism to ensure precise evaluation and optimization at every stage of the task. The framework has performed exceptionally well in experiments. It achieved a 90.44% alignment rate with human expert evaluations, far surpassing the traditional LLM-as-a-Judge method. Additionally, Agent-as-a-Judge is significantly more efficient than human evaluators, completing 55 tasks in just 118.43 minutes at a total cost of only $30.58. The article also introduces the DevAI dataset, a new benchmark containing 55 real-world AI development tasks, designed to serve as a proof-of-concept testing platform for Agent-as-a-Judge. The DevAI dataset not only focuses on the final results of tasks but also tracks and evaluates each stage of task execution, providing more comprehensive feedback. Experimental results show that even well-performing agent systems like GPT-Pilot and OpenHands can only meet about 29% of the tasks' requirements in the DevAI dataset, highlighting the dataset's challenges.

The Strongest Small Models: Ministral 3B/8B Released

Mistral AI recently released two small AI models named Ministral 3B and 8B, designed for edge applications. These models perform exceptionally well, with the official claim that they are 'the world's best edge models'. Ministral 3B and 8B are not only more efficient in terms of computational resource requirements but also significantly more cost-effective, at $0.04 per million calls and $0.1 per million calls, respectively. Additionally, these models support private deployment and lossless compression, ensuring flexibility and performance optimization in different application scenarios. The article also provides detailed performance comparison data, showing the comparison of Ministral 3B and 8B with other similar models through tables and charts. These data indicate that despite being small models, Ministral 3B and 8B can match or even surpass larger models in several key metrics. Furthermore, Mistral AI offers multiple invocation methods, including through platforms like Azure AI, AWS Bedrock, Google Cloud Vertex AI Model Garden, Snowflake Cortex, and IBM watsonx, further enhancing the application flexibility of these models.

Alibaba International Open-Sources Ovis1.6, Outperforming GPT-4o-mini in Multiple Areas!

Alibaba International's AI team recently open-sourced the multimodal large model Ovis1.6. This model demonstrates exceptional performance on the OpenCompass benchmark, a comprehensive evaluation standard for multimodal models. Notably, Ovis1.6 outperforms the closed-source GPT-4o-mini in tasks such as mathematical reasoning and visual understanding. The core principle behind Ovis1.6 lies in structurally aligning visual and text embeddings. It comprises three key components: a visual tokenizer, a visual embedding table, and an LLM. Drawing inspiration from text embedding approaches used in large language models, Ovis introduces a learnable visual embedding table. This table converts continuous visual features into probabilistic visual tokens, which are then indexed and weighted multiple times by the visual embedding table to produce structured visual embeddings. For text processing, Ovis adopts the standard approach employed by current large language models, transforming input text into one-hot tokens. Subsequently, it retrieves the corresponding embedding vectors for each text token based on the text embedding table. Finally, Ovis concatenates all visual embedding vectors with text embedding vectors and processes them through a Transformer to accomplish multimodal tasks. Building upon Ovis1.5, Ovis1.6 further enhances its high-resolution image processing capabilities. It leverages a larger, more diverse, and higher-quality dataset for training, and optimizes the training process through a DPO fine-tuning approach that follows instructions. Architecturally, Ovis1.6 employs a dynamic subnetwork solution, enabling flexible handling of image features across different resolutions. This enhances the model's ability to tackle complex visual tasks. In terms of data, Ovis1.6 incorporates diverse datasets during training, including Caption, OCR, Table, Chart, and Math, ensuring its proficiency across a wide range of application scenarios. Regarding training strategies, Ovis1.6 continuously optimizes model performance using DPO and other schemes. This enhances the model's ability to generate text and understand complex instructions, leading to further improvements in its performance on complex tasks.

NeurIPS 2024 | OCR-Omni: A Unified Multimodal Text Understanding and Generation Large Model from ByteDance and East China Normal University

This article introduces TextHarmony, a model developed by a joint research team from ByteDance and East China Normal University. TextHarmony aims to address the challenge of unifying multimodal generation tasks within the OCR domain. By integrating visual text understanding and generation capabilities, TextHarmony achieves multimodal generation within a single model architecture. The research team identified an inherent inconsistency between visual and language modalities in multimodal generation models, leading to performance degradation. To overcome this issue, TextHarmony employs a combined architecture of ViT, MLLM, and Diffusion Model. It dynamically integrates modality-specific and modality-agnostic LoRA experts using the Slide-LoRA technique, partially decoupling the image and text generation spaces. Furthermore, the team developed the DetailedTextCaps-100K dataset, providing richer training resources focused on visual and text elements, enhancing visual text generation performance. TextHarmony demonstrates superior performance in visual text understanding, perception, generation, and editing, surpassing existing models and offering a novel solution for complex tasks in the OCR field.

Dualformer: Integrating Fast and Slow Thinking for Complex Tasks

Meta's Yuandong Tian's team has recently introduced a new model called Dualformer, which significantly improves the efficiency and accuracy of solving complex tasks by integrating fast and slow thinking modes. Dualformer builds upon the capabilities of Searchformer, a model designed for complex reasoning tasks. Searchformer excels at path planning tasks, such as solving mazes and Sokoban puzzles. Dualformer mimics slow thinking while taking shortcuts like fast thinking by training on inference trajectories and final answers and applying specific discarding strategies. This design enables the model to form a more concise chain of thought (CoT), achieving an optimal solution rate of 97.6% with a 45.5% reduction in reasoning steps in slow thinking mode. When automatically switching between fast and slow thinking modes, the optimal rate reaches 96.6% with a 59.9% reduction in reasoning steps. Additionally, Dualformer enhances performance on large models like Mistral-7B and Llama 3-8B, particularly on the Aug-MATH dataset, where the model's accuracy significantly improves. This research not only demonstrates the model's efficient problem-solving capabilities in complex tasks but also provides new insights for optimizing reasoning in large models.

Real-world Testing of 13 SORA-inspired Video Generation Models: A Comprehensive Analysis of Over 8000 Cases

This article, jointly released by Tencent AI Lab and USTC, aims to comprehensively evaluate the most cutting-edge SORA-inspired video generation models. The report focuses on assessing 13 mainstream models, including 10 closed-source and 3 latest open-source models. These models have generated over 8000 video cases, demonstrating their capabilities in text-to-video (T2V), image-to-video (I2V), and video-to-video (V2V) generation. The evaluation spans multiple dimensions, from basic capabilities to application and deployment in various fields. The article emphasizes the importance of human visual perception in evaluating these models and provides access to publicly available evaluation videos for direct comparison. Additionally, the report explores the application of these models in specific domains such as human-centered video generation, robotics, and animation interpolation (frame interpolation). It also delves into the performance gaps between open-source and closed-source models. Finally, the article outlines the challenges and future research directions in the video generation field, including complex motion understanding and generation, concept understanding, and interactive video generation.

92-Page Llama 3.1 Technical Report: A Summary

This article provides a comprehensive analysis of Meta's open-source Llama 3.1 technical report, covering key technical aspects from data processing to model training. The report highlights the significance of open-source in fostering innovation, emphasizing the impact of Llama 3.1's open-source release on the NLP field. The article explores challenges related to data scarcity, the use of synthetic data, model scaling, and complexity management. In terms of model training, Meta has enhanced large-scale model performance through scaling and hyperparameter optimization, addressing technical challenges related to training stability, data volume, and model parameters. Hardware-wise, Meta has deployed a large-scale H100 GPU cluster and implemented targeted network communication and parallel computing optimizations. The report also details Llama 3.1's innovations in multi-modal data processing, model structure optimization, and data security. Overall, the Llama 3.1 technical report showcases Meta's comprehensive approach and innovative thinking in large-scale model training.

LightRAG: A Simple and Efficient RAG System Developed by Huang Chao's Team at the University of Hong Kong, Significantly Reducing the Cost of Retrieval Augmentation for Large Language Models

LightRAG, an efficient retrieval-augmented generation (RAG) system developed by Huang Chao's team at the University of Hong Kong, aims to reduce the cost of information retrieval for large language models while improving retrieval accuracy and efficiency. LightRAG introduces a graph structure and a two-tier retrieval framework to better capture complex dependencies between entities, enabling comprehensive information understanding. Its layered retrieval strategy allows the system to handle both specific and abstract queries, ensuring users receive both relevant and rich responses. Additionally, LightRAG can quickly adapt to new data using incremental update algorithms. Instead of reprocessing the entire external database, it utilizes a graph-based indexing step to process new data and then merges the new graph data with the original data, maintaining efficiency and accuracy in dynamic environments. Experimental results show that LightRAG significantly outperforms baseline models in handling large-scale corpora and complex queries, particularly in terms of diversity metrics.

Alibaba Cloud Open-Sources AI Application Development Framework: Spring AI Alibaba

Alibaba Cloud recently open-sourced the Spring AI Alibaba framework, designed for Java developers to accelerate AI application development. This framework, built upon Spring AI, deeply integrates with Alibaba Cloud's Tongyi series models and services, offering high-level AI API abstractions and cloud-native infrastructure integration solutions. Key features of Spring AI Alibaba include:

- Agent Development Framework: Specifically designed for Spring and Java developers, simplifying the process of building AI agents.

- Abstraction of Common AI Agent Development Paradigm: Provides a comprehensive abstraction, encompassing atomic capabilities like dialog model access, prompt templates, and function calls, as well as high-level abstractions such as agent orchestration and dialog memory.

- Deep Integration with Tongyi Series Models: Offers seamless integration with the Tongyi series models, providing best practices for application deployment and operation, including gateway, configuration management, deployment, and observability.

- Complete AI Application Development Abstraction: Supports various interaction modes, including text, image, and voice, and communication modes like synchronous, asynchronous, and streaming.

- Prompt Template Management Abstraction: Enables developers to pre-define templates and replace keywords at runtime.

The article showcases the ease of developing AI applications with Spring AI Alibaba using the 'Intelligent Flight Assistant' example. It delves into the framework's core concepts and API definitions, including chat models, prompts, formatted outputs, function calls, and Retrieval-Augmented Generation (RAG). Additionally, it provides Hello World examples and practical implementations of the Intelligent Flight Assistant, demonstrating how to quickly develop generative AI applications using Spring AI Alibaba.

How Shopify improved consumer search intent with real-time ML

Shopify integrated AI-powered semantic search to improve the relevance of search results by understanding consumer intent beyond keyword matching. This was achieved through real-time embeddings, which convert text and images into numerical vectors for more accurate search matching. The implementation involved building ML assets and designing real-time streaming pipelines using Google Cloud's Dataflow, handling approximately 2,500 embeddings per second. Challenges included memory management and model optimization, addressed through strategic adjustments in worker configurations and batch processing. The result is a seamless shopping experience for consumers and increased sales for merchants.

From Concept to Code: How to Use AI Tools to Design and Build UI Components

The article from freeCodeCamp.org titled 'From Concept to Code: How to Use AI Tools to Design and Build UI Components' explores the use of AI tools to accelerate UI component design and development. It emphasizes the importance of UI/UX design in website development and cites a study showing a 400% increase in website conversions from improved UX design. The core of the article is a tutorial on using Sourcegraph's Cody AI tool and Tailwind CSS to quickly build UI components. Cody assists in understanding, writing, and fixing code by accessing the entire codebase and referencing documentation, while Tailwind CSS is a utility-first CSS framework that facilitates rapid UI development. The tutorial includes setting up the development environment, creating various UI components like Header, Footer, TodoContainer, and TodoItem, and managing existing codebases with Cody.

AI Agent Practice Sharing: Building an Intelligent Q&A Bot from Scratch Using FAQ Documents and LLMs

This article details how to build an intelligent question-answering system from scratch using FAQ documents and Large Language Models (LLMs) through two practical cases. It highlights the core role of LLMs in building intelligent systems while acknowledging challenges such as hallucinations, difficulty following instructions, and high computational costs. The article then introduces technical frameworks like FunctionCall, RAG, few-shot learning, Supervised Fine-Tuning (SFT), and AI Agent platforms, which simplify the use of LLMs. However, practical applications still face issues like large model hallucinations. Optimizing prompts and plugin configurations can partially address these issues, but further fine-tuning and optimization are necessary. The article also discusses the commercialization of intelligent question-answering systems, suggesting the use of LLMs with larger parameter scales for optimization. Finally, it invites readers to discuss further applications of LLMs in customer service systems, such as knowledge base construction, similar question generation, and automated evaluation.

Eval-driven development: Build better AI faster - Vercel

The article introduces eval-driven development as a new paradigm in AI-native software development, particularly at Vercel. Traditional testing methods are insufficient for AI's unpredictable nature, leading to the need for a more flexible and continuous evaluation process. Evals, or evaluations, are described as end-to-end tests for AI systems that assess output quality against defined criteria using automated checks, human judgment, and AI-assisted grading. This approach recognizes the inherent variability in AI outputs and focuses on overall performance rather than individual code paths. Vercel's flagship AI product, v0, exemplifies this approach, demonstrating how evals catch errors early, speed up iteration, and drive continuous improvement based on real-world feedback.

175 Practical Manuals from OpenAI

OpenAI has released a series of practical manuals aimed at helping developers optimize and expand AI applications. These manuals cover multiple key areas, including agent coordination, prompt caching optimization, structured output techniques, autonomous browsing tool construction, ChatGPT integration with various platforms (such as Canvas LMS, Retool, Snowflake, Google Cloud Function, Google Drive, Redshift, AWS, Zapier, Box, GCP, Google Cloud, Confluence, SQL database, Notion, Gmail, Jira, Salesforce, Outlook, weather API, BigQuery, SharePoint, etc.), and inference-driven data validation methods. Additionally, the manuals provide tutorials and application cases for fine-tuning the GPT-4o mini model, showcasing its powerful capabilities in clothing matching, image tagging, and multimodal AI model parsing. Through these practical guides, developers can build and deploy AI applications more efficiently, enhancing system robustness and performance to meet different business needs.

Interview with NotebookLM Core Lead: A Project with Less Than 10 People, Experience Stunning Globally, But It's Still Just a Technical Demo

Google's NotebookLM project initially started as a test project developed by a small team during their spare time, combining audio models and content studios to achieve impressive audio summarization features. Project lead Raiza Martin shared the journey of NotebookLM from an internal project to a popular product, emphasizing new trends in voice interaction and AI-generated content. The team continuously iterated and optimized the product by observing user behaviors and needs, highlighting the importance of an entrepreneurial mindset and agile development. NotebookLM operates within Google as a startup, with streamlined processes and high efficiency, engaging with users through the Discord community, resulting in significant growth in user retention and diversity. Future plans include exploring commercialization paths, considering distribution, monetization, and commercialization. Raiza Martin also discussed future product directions, particularly the vision for an AI editing interface and improvements in mobile experiences. Additionally, the article delves into users' curiosity and experimentation with AI technology, and how the team ensures the safety of AI applications through red team testing.

Duolingo: A Billion-Dollar Company with Over 100 Million Monthly Active Users, a Master of User Engagement: AI Can Be Used, But Make Users Happy

Duolingo utilizes AI and gamification strategies to enhance language learning experiences, prioritizing user engagement and retention over pure learning efficiency. The article delves into Duolingo's business model, user data, technology applications, and market strategies. Duolingo enhances user engagement through AI video calls and adventure game features, discovering that users prefer interacting with AI over real people. The company primarily generates revenue through a subscription model, with subscription users contributing over 80% of the revenue. Duolingo's revenue on the iOS platform is significantly higher than on Android, primarily due to iOS users' higher average spending. Additionally, Duolingo successfully attracts global users through thorough localization and social media marketing, without significant investment in traditional marketing, relying mainly on word-of-mouth and social media dissemination. The article also discusses Duolingo's educational effectiveness evaluation, decision-making for adding new languages, and AI applications in language and math teaching.

Breakout AI Hardware Founder Lü Cheng: Raised $50 Million, Shipped 100,000 Units, Opportunity Window Remains for 8 Months

In an interview, Rabbit founder Lü Cheng delved into the market performance, fundraising, and collaboration strategies of his AI hardware product R1, particularly with companies like OpenAI. R1 boasts a profit margin exceeding 40% and has shipped over 100,000 units. However, it faces legal challenges related to active user counts and application authorization. Lü Cheng emphasizes that competing directly with giants like OpenAI is unrealistic, making collaboration a more effective strategy. R1 integrates with services like Spotify through virtual machine and VNC technologies, bypassing API rules and simplifying early-stage partnerships. Lü Cheng also highlighted R1's positive user engagement, low return rates, and a significant proportion of active users. The launch of LAM Playground marks a crucial step towards a universal cross-platform agent system. Despite challenges in technical implementation and partner relationships, the Rabbit team remains focused on user needs and product functionality. Lü Cheng further discussed the form, design philosophy, and production challenges of AI hardware, emphasizing the gap between AI model intelligence and infrastructure. He shared experiences of collaborating with Teenage Engineering to design R1, highlighting the importance of a rapid and efficient hardware design process.

Voice-first: Reflections on Developing a Voice Product

This article examines the importance and potential of voice interaction in product design from multiple perspectives. Firstly, the author emphasizes that voice, as the earliest form of human communication, possesses natural and emotional expression advantages, making it suitable for applications such as search, emotional healing, social interaction, and music creation. Secondly, the article analyzes the core transformative potential of voice products in the C-end market, highlighting that the naturalness of voice interaction, its adaptability to multitasking, and its efficient information transmission make it an ideal means of human-computer interaction. Additionally, the author compares the strengths and weaknesses of voice-first interfaces (LUI) and graphical user interfaces (GUI), providing five self-assessment questions to determine which interface form is appropriate for specific product scenarios. Finally, the article looks ahead to the vast potential of voice technology in AI applications, particularly in the B-end and C-end markets, such as customer service, sales, healing, coaching, and companionship, making it a hot topic of interest for capital markets and users.

AI Designer Guide: Who Am I, Where Do I Come From, Where Am I Going? | Interview with Pozzo and Zhao Chen

This article is a deep interview about the application of AI in the design field, inviting ByteDance's Pozzo and ZAX Studio's Zhao Chen as guests to share their experiences and insights on using AI in the design industry. The article first introduces the professional backgrounds of the two designers and their views on the application of AI in design, including their experiences at Alibaba and Hema, and stories of using AI to generate Mid-Autumn Festival posters and participating in AI painting competitions. Subsequently, the article explores the application of AI in UI/UX product design, particularly the impact of Generative AI on designers' work, and how AI has changed the traditional design workflow, enabling designers to present their creativity more efficiently and become 'super individuals'. The article also discusses the application of AI in the design field, particularly how AI enhances design efficiency and creativity, reduces intermediate losses, and stimulates designers' inspiration, emphasizing the importance of human-AI collaboration. Finally, the article explores the impact of AI on designers' hiring standards, emphasizing the importance of soft skills such as aesthetics, curiosity, and creativity, and pointing out that AI lowers the technical threshold but raises the bar for content creation.

This AI Lets Me Chat with My 60-Year-Old Self, Healing My Mental Exhaustion

The article details MIT's AI project 'Future You', which simulates a 60-year-old version of the user to chat with, helping them understand their future self and thereby reduce anxiety and enhance well-being. Users first need to fill in their basic information, mood, life experiences, and then imagine their life at 60. This information is fed into a large language model to generate a virtual 60-year-old 'memory'. Users can also upload selfies, and the system will generate an aged photo to enhance the realism of the conversation. The article, through the author's experience of chatting with the AI, demonstrates how the AI uses role-playing and encouraging words to help users accept and love themselves. Additionally, the article cites the survey results and papers from the Future You team, proving that chatting with the AI indeed improves users' well-being and connection to their future self. Finally, the article explores the potential applications of AI in mental health counseling, self-fulfilling prophecies, and virtual selves, and reminds users not to over-rely on AI and to focus on current actions.

Sequoia Capital: Wrapper Applications Lead the Application Layer, o1 Revolutionized Large Language Models, Annual Industry Report Update

Sequoia Capital's annual report examines the evolution of the Generative AI market, particularly the transition from foundational models to reasoning-focused applications. The report emphasizes the critical role of inferential ability in AI advancement and highlights the importance of wrapper applications in building lasting value. It also predicts that AI will bring about significant changes to the software industry, similar to the impact of Software as a Service (SaaS), and shows the attractiveness of the application layer to venture capital.

Jensen Huang's Latest Interview: Reveals Daily Use of ChatGPT, Admits to Nerves Before Every Speech

In the interview, Jensen Huang discussed NVIDIA's multifaceted development strategies and future outlook. He shared NVIDIA's hiring strategies, emphasizing background checks and in-depth interviews to evaluate candidates, as well as the importance of transparency and employee involvement in decision-making. He also reviewed NVIDIA's system transformation journey from SHIELD to DGX-1, highlighting the company's comprehensive capabilities from hardware to software, and looking forward to the new industrial revolution brought by Artificial Intelligence. Jensen Huang discussed the rapid development of the computer industry and technological innovation, emphasizing the advancement of technology through system design and architectural thinking, and mentioning the future potential of AI services like ChatGPT. Finally, he shared his daily experience of using ChatGPT and emphasized NVIDIA's work in AI education and research, particularly the development of Physical AI.

After the Fall of 'Little Overlord', Educational Training Companies Turn to Hardware Business

The article traces the history of 'Little Overlord' learning machines, highlighting their enduring popularity despite the widespread adoption of smartphones. It analyzes the growth trend of the learning device market, particularly the surge in sales following the 'Double Reduction' policy. This policy, aimed at reducing academic pressure on students, has led to a significant increase in demand for supplementary learning resources, driving the growth of the learning device market. The article further explores the competitive landscape of the learning device market, noting the emergence of educational training companies like Xueersi (Learn and Think), Yuanfudao (Ape Tutor), and New Oriental as key players. These companies leverage their existing resources and expertise in education to develop and market innovative learning devices. The article discusses the impact of AI technology on the development of educational hardware, highlighting the use of large language models (LLMs) in providing personalized learning experiences. These LLMs offer features like problem-solving, dialogue, correction, lecturing, and recommendations, enhancing the effectiveness of learning devices. The article emphasizes the importance of personalized tutoring and teaching according to individual aptitude, enabled by AI technology. It also explores the potential for educational training companies to reshape the educational hardware market by leveraging AI technology. Finally, the article discusses the challenges facing the learning device market, including balancing parental needs with the educational needs of children and maintaining a competitive edge in the rapidly evolving technological landscape.

Meta’s open AI hardware vision

At the Open Compute Project (OCP) Global Summit 2024, Meta showcased its latest open AI hardware designs, emphasizing collaboration and innovation in advancing AI infrastructure. Key innovations include the new Catalina rack, designed for AI workloads, and the expanded Grand Teton platform supporting AMD accelerators. Meta's commitment to open hardware is driven by the need to support large-scale AI models like Llama 3.1 405B, which required substantial optimizations across their training stack. The article also highlights Meta's collaboration with Microsoft on disaggregated power racks and its ongoing commitment to open source AI, emphasizing the importance of open hardware systems for delivering high-performance, cost-effective, and adaptable infrastructure necessary for AI advancement.

The Rise of Inference Scaling in Large Models: How SambaNova Challenges NVIDIA's Dominance

OpenAI's release of the o1 model marked a significant advancement in the AI field, demonstrating a breakthrough in general inference capability. This success signifies a paradigm shift in large model evolution, moving from 'Training Scaling' to 'Inference Scaling'. This transition necessitates increased computational resources for inference and optimized hardware configurations to enhance efficiency. The article analyzes the limitations of GPUs in inference and introduces SambaNova's RDU chip, featuring a dynamically reconfigurable dataflow architecture. This architecture optimizes performance and efficiency through parallel processing and efficient data movement. SambaNova's latest RDU product, SN40L, showcased at the Hot Chips Conference, achieved an inference speed exceeding 100 tokens per second on the Llama 3.1 405B model and demonstrated throughput scaling on the Llama 3.1 70B model. The article further explores the advantages of dataflow architecture over traditional GPUs, emphasizing its ability to drive computation through data flow, enabling parallel processing and significantly improving computational performance. Finally, the article discusses SambaNova's position as a strong competitor to NVIDIA in the AI chip market, highlighting the potential impact of future large model inference scaling on the AI landscape.